Happy Thursday, and welcome to CIO Upside.

Today: AI is a double-edged sword in cybersecurity. There are ways to put it to use – and tactics to steer clear of, one expert said. Plus: Big tech and investors are making it rain; and Google is making its data a little fuzzier.

Let’s jump in.

Fighting Fire with Fire: Can AI Be a Cyber-Defense Tool as Well as a Threat?

AI is making cybersecurity tougher. It can be an ally as well as a dangerous opponent.

As with most technological advances, AI’s capabilities are boons to both legitimate businesses and so-called black hats, threat actors who infiltrate proprietary databases and networks for nefarious reasons, said Joshua McKenty, co-founder and CEO of Polyguard and former chief cloud architect at NASA.

“There are two major effects to AI in cyberattacks right now,” said McKenty. “One, it’s lowered the bar for who can attack … Two, it’s increased the amount of attacks – the surface area, the number of attacks per minute – because of automation.”

In a report published Monday, Crowdstrike found that generative models are helping threat actors with things like sophisticated social engineering, vulnerability exploitation and reconnaissance. Large language models are now even capable of committing cyberattacks on their own: Recent research out of Carnegie Mellon University found that AI could replicate the 2017 cyberattack on Equifax, exploiting vulnerabilities, installing malware and stealing data autonomously.

With AI increasing the pace and surface area of attacks, “the time to respond with a human in the loop on the enterprise side is disappearing,” said McKenty. “The window of opportunity doesn’t need to be very large for AI systems to be taking advantage of it … everything is being tried at once.”

But enterprises can fight fire with fire: There are a few methods that work and one common one that doesn’t, said McKenty.

- One tactic is doing as the attackers do, he said: automating. Enterprises can use AI to collect and synthesize data that needs to be managed, monitor the dark web for breaches of company data, and track in real time whether someone’s registering lookalike domain names. “Everything that you periodically do for good hygiene … you can now do continuously,” he said.

- Another is triaging and prioritizing what needs the most attention. While AI might be capable of being used to automate software-patching, “most enterprises are not in a position to (do so),” he said. But the tech can also automate reviewing of vulnerabilities, picking out which ones should be addressed first, he said.

The thing that generally doesn’t work, however, is detection of AI-generated content, he said. Security is always chasing the newest threat, he said, and as soon as you develop a 10-foot wall, a 12-foot ladder is there to surpass it. And the walls always take far longer to build than the ladders.

That’s why AI to detect deepfakes doesn’t always work, he said. As soon as a model is capable of detecting that content is AI-generated, a threat actor can create new AI-generated content that can trick it, he said. “Every time you make a detector, you might spend a year on it,” he said. “The attackers can make a new deep fake using your detector in a day, not a year.”

“Anything you can build as an AI can be used by a different AI,” said McKenty. “Every tool you build today, you’re putting into the hands of an attacker.”

Secure BYOD Without Sacrificing Productivity

Traditional security approaches to BYOD policies often improve business productivity, but leave the door open to major threats that admins must manually manage.

What if BYOD policies no longer required the choice between security and productivity? It’s possible.

Watch the on-demand webinar from 1Password and DataScan to learn how businesses can simplify and secure BYOD management without impacting employee productivity. You’ll discover practical insights into how to:

- Enable admins to manage and secure BYOD access efficiently.

- Automate credential policies to scale security effortlessly.

- Empower employees to self-remediate and adhere to compliance.

Big Tech’s Bigger AI Investments May Lead to a ‘Winner Takes All’

Big tech, smaller rivals and investors of all sizes are dropping big bucks on their AI visions, but all of them won’t be winners.

Which makes it important for businesses with less cash to spare to develop their AI strategy upfront rather than funneling cash to a variety of initiatives to see which works. That will help them avoid heavy losses in a game in which the winners take all and the playing field is tilted heavily toward those with the biggest budgets.

According to data from Bloomberg, four of the world’s largest tech firms – Amazon, Google, Microsoft and Meta – are expected to funnel more than $344 billion into capital expenditures this year, much of which will go toward data centers that feed their lofty AI ambitions.

They aren’t alone:

- According to data from Pitchbook, reported by CNBC in late July, AI startups raised more than $104 billion in the first half of 2025, nearly matching the total amount invested in AI startups in 2024. Around two-thirds of all venture funding during that period went to AI.

- And investors are devising their own AI infrastructure strategies, too. On Wednesday, Apollo Global Management agreed to acquire a majority stake in Stream Data Centers, and Brookfield Asset Management announced a strategy dedicated to developing AI infrastructure, aiming to capitalize on a “significant pipeline of opportunities.”

The firms are all vying for a piece of a market that’s estimated to be worth multiple trillions, said Brian Sathianathan, CTO and co-founder of Iterate.ai. Some are already starting to see the payoff. Industry darling Nvidia has been able to “sell and move servers with all the data center buildouts,” he said, and the three biggest cloud providers – Google, Amazon and Microsoft – are seeing significant growth. “It’s already working,” he said.

Those that manage to win have a shot at massive returns. “This market’s got the potential to do that,” Sathianathan said. But with all the cash flowing into developments, some companies may be digging themselves into a hole that they’re not able to get out of, he said.

“This is a winner takes all,” said Sathianathan. “If 20 players are vying for a spot that five can fill, then 15 are going to fail. That’s just the nature of free markets.”

So where does this leave the average enterprise? The best place to start is determining what “core competencies” your business has and how AI fits into them, Sathianathan said.

When choosing an AI vendor, he added, it’s best not to put all your eggs in one basket. “I would warn enterprises from fully betting on one Big Tech firm,” he said. Doing so could create a “lock-in” that ends up making it more difficult to switch if better options present themselves. “Bet on a few, and also bet on startups,” he recommended.

“Big companies have invested so much in this,” Sathianathan said. “They’re going to try and put you into a place where you are stuck with their software or their infrastructure.”

Google’s Differential Privacy Patent Highlights AI Training Risks

Google wants to make its data a little bit noisier.

The company is seeking to patent a system for “efficient generation of differential privacy noise.” For reference, differential privacy is a tactic that allows the privacy of individual data points within a dataset to be protected by adding “noise” to it, or randomness that obscures the features of the data.

“Differential privacy can be used to generate aggregate reports and/or statistical information while preserving the privacy of users for which data is included in the reports and/or statistical information,” Google said in the filing.

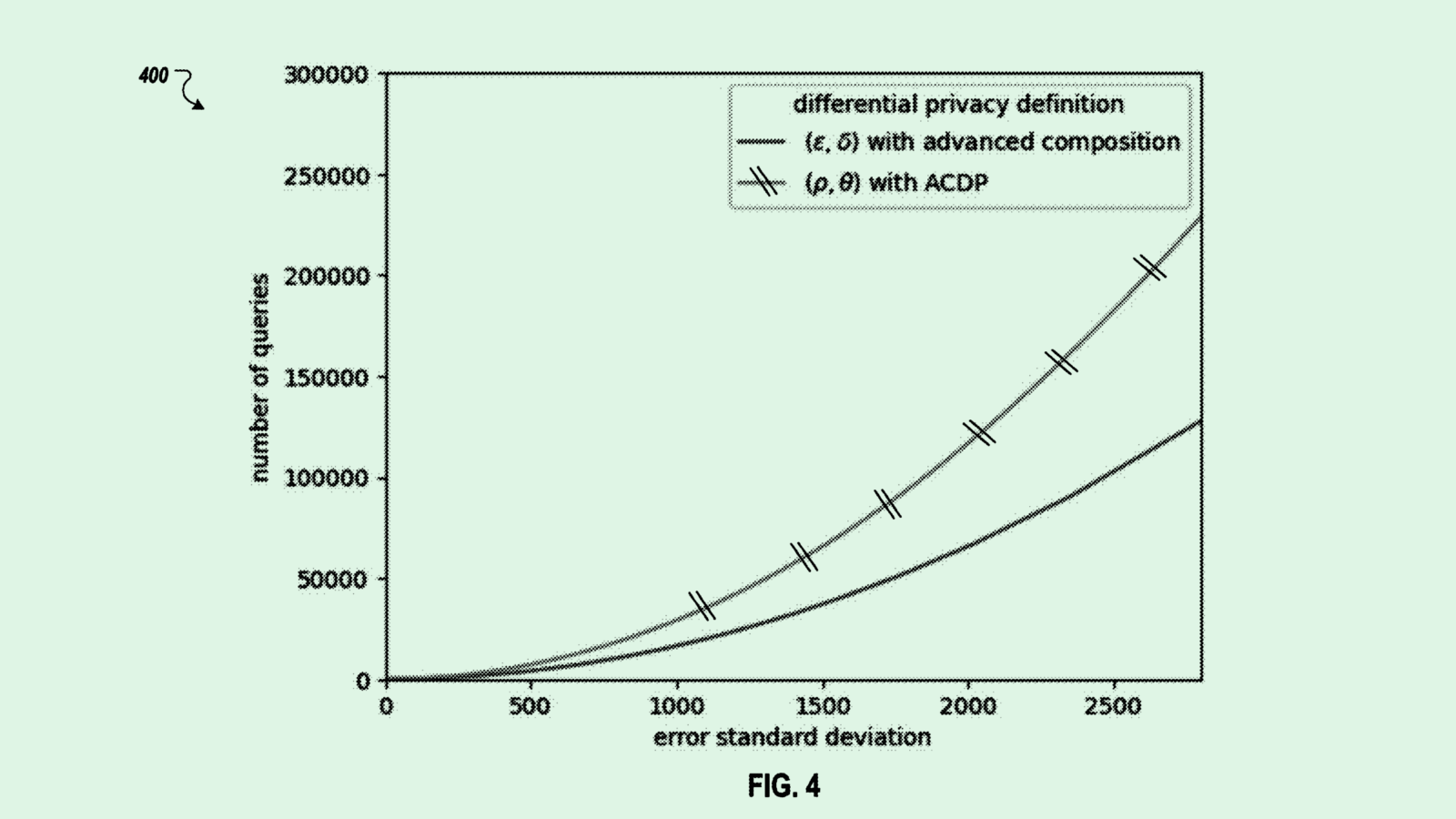

Differential privacy lives on a spectrum. Too little noise, and the data isn’t obscured enough, making it a privacy risk. Too much noise, on the other hand, and the features become overly obscured, making the data useless.

Google’s patent seeks to strike a balance. To put it simply, the system uses statistical modeling to find the maximum amount of noise that can be leveraged to keep a dataset private without tuning out too many of the data’s integral features. The method offers precise control over the differential privacy of data, while providing a scalable solution that’s good for larger datasets.

With data becoming increasingly valuable in the pursuit of bigger and better AI, privacy is a constant concern. Some large model developers scrape everything they can from the web to feed their massive foundational models.

But for many enterprises, it’s not always so simple. Using enterprise data to train AI can be a privacy minefield. And the consequences of a slip-up can be dire: IBM’s recent Cost of a Data Breach report found that 60% of AI-related security incidents led to compromised data and 31% led to operational disruption. Still, 97% of organizations surveyed reported not having AI access controls in place.

Differential privacy as Google’s patent proposes could provide a layer of security for a model’s core data before it can get into the wrong hands.

Extra Upside

- Heading North: Cohere launched its new AI agent platform, North, aiming to increase AI adoption while keeping enterprise data more secure.

- Opening Up: OpenAI launched two new open-weight models, gpt-oss-120b and gpt-oss-20b, which can run locally on devices and be fine-tuned.

- Don’t Let Traditional Security Tools Ruin Productivity. Instead, reduce admin burden with automated access and credential policies, enforcing security best practices without slowing down your business. Register for the webinar to learn how.*

* Partner

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.