Happy Monday, and welcome to CIO Upside.

Today: Why enterprise-scale open source models may be more trouble than they’re worth. Plus: Zero trust could help your security protocols evolve alongside your AI deployment; and Microsoft’s patent makes machine learning explain itself.

Let’s jump in.

Why Open Source AI May Be a ‘Marginal Player’

How useful is open source AI?

As Chinese companies like DeepSeek, Baidu and Alibaba open their models to all, other firms have made their own open source plays in recent months. Elon Musk-owned xAI made the base model for Grok open source in March. OpenAI announced (and delayed) the release of its open weights model in June with the promise of something “unexpected and quite amazing” instead, according to CEO Sam Altman. And Meta’s Llama family of models has been open source from the jump.

While the concept of open source AI may seem rosy and altruistic, it might be difficult for enterprises to get use out of the technology, said Aaron Bockelie, senior principal consultant and AI solutions lead at Cprime.

But without the computing power necessary to run and scale open source models, the average enterprise is not likely to reap as many benefits as you’d expect, said Bockelie. The “open source model is only one piece of the puzzle,” he said, requiring a proper framework and infrastructure to implement.

“The more you get into it, the more you realize that you need a full engineering team and development team to be able to really leverage that investment, which turns you towards looking at commercial offerings instead because they’re all just turnkey,” said Bockelie.

Open source AI does have its benefits, said Bockelie, including democratized access, opening the door for faster and more collaborative innovation. But with those benefits comes risk:

- It’s harder to vet questions related to data security and ethics of these models because their open nature creates a lack of traceability, he said. Additionally, closed commercial models are often better funded, and “have way deeper pockets for being able to produce the training data set.”

- “Who’s validating the safety of the models? Who’s validating alignment, who’s understanding what its capabilities are, what’s the training set?” Bockelie said. “In some cases, you’re kind of just going on a guess or a hedge that an open model was based on this or was based on that.”

As it stands, these difficulties that open source faces may make it just a “marginal player” in the broader AI space, he said. “You don’t have access to the type of scale of compute that’s necessary to carry the big commercial models.”

But that doesn’t mean open source is a complete loss, he said. The best way to implement open source just requires enterprises to think smaller: using open models with fewer parameters that can run locally on a device and serve specific purposes.

“Local models are going to end up being smaller by their nature, and therefore way more accessible to average people,” said Bockelie.

Stop Building Enterprise Features From Scratch

Enterprise customers demand features like single sign-on, user provisioning, and role-based access. But building this infrastructure from scratch can take months, draining time and money without offering any competitive upside.

WorkOS offers a faster, simpler alternative. Their prebuilt APIs plug into your app with just a few lines of code, making your app enterprise ready without slowing down product development.

With WorkOS you get:

- Modular authentication built to scale with demand.

- Precise access control that checks every compliance box.

- Modern infrastructure that’s secure, reliable, and developer-friendly.

This isn’t just a shortcut. It’s a blueprint for deploying faster, moving upmarket, and staying focused on what makes your app great.

Why Security and AI Need to Grow Hand in Hand

Enterprises are letting cybersecurity fall by the wayside in favor of their AI ambitions.

Recent data from Accenture found that 77% of organizations lack the foundational AI security necessary to keep models, data pipelines and cloud infrastructure safe. Meanwhile, 42% of companies say they have struck the right balance between AI deployment and security.

Data is the core of AI. If security strategies don’t grow in tandem with AI development and adoption, your enterprise is putting anything that goes into a model at risk, said Stephen Gorham, chief strategy officer at security firm OPSWAT.

“That data is often sensitive data, that data is often proprietary data, that data is often regulated data,” said Gorham. “If it doesn’t evolve alongside, you’re going to risk data leakage.”

But as AI continues to take budget and priority within organizations, enterprises may struggle to find a balance. According to Accenture, only 28% of organizations embed security protocols into tech transformation initiatives from the jump.

Plus, AI is often used outside of the view of organizations entirely. A survey from Zoho IT subsidiary ManageEngine found that 60% of employees increased their use of shadow AI, or unapproved AI tools, over the last year. Around 91% believe that shadow AI poses little to no risk. Though Shadow IT has been around for years, “the issue with AI is it kind of exacerbates this problem,” said Gorham.

The best place to start leveling up your security protocols, Gorham said, is by implementing principles of zero trust:

- In case you’re unaware, zero trust is a framework that assumes nothing is inherently safe – no user, device or application – even if it’s within your organization’s network.

- Grounding AI development and deployment in zero trust principles as a “standard bearer” could help prevent your data from ending up in the wrong hands, he said.

- This could look like creating an inventory to classify data that’s being fed to models, doing security and compliance scans through the model development lifecycle, and only allowing minimum necessary permissions for access.

“I know I sound like a broken record, but zero trust, zero trust, zero trust” said Gorham. “Strict identity controls solve a lot of this stuff. Visibility and monitoring solve a lot of this stuff. Policy enforcement and education solves a lot of these things.”

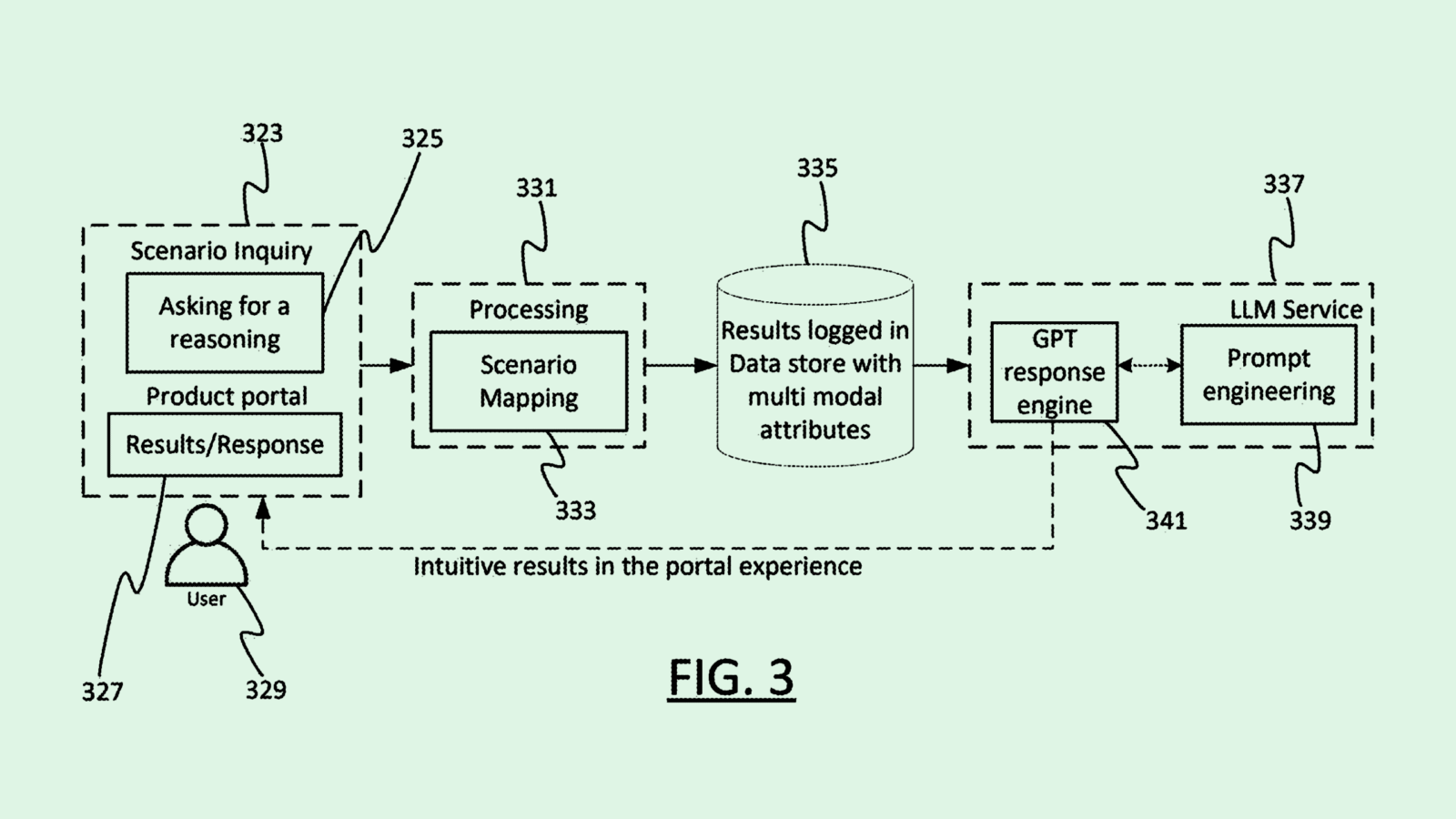

Microsoft Explainability Patent Highlights Need for AI Trustworthiness

Are two models better than one?

Microsoft might think so: The company is seeking to patent a “generative AI for explainable AI,” which uses other generative models to explain a machine learning output, helping users better understand where a model is getting its answers.

When the system is asked to explain a machine learning prediction, the system analyzes features of the input data and its respective output. Microsoft’s tech may also pull additional content, such as user preferences, previous explanations or subject matter knowledge.

The system will then come up with multiple possible explanations for the output. For example, If a person wants to know why a loan was approved or denied, this system may analyze the loan application as well as historical data to discover contributing factors. Finally, the system uses a second generative model to rank those possible explanations based on relevance.

“(Explainable AI) can be used by an AI system to provide explanations to users for decisions or predictions of the AI system,” Microsoft said in the filing. “This helps the system to be more transparent and interpretable to the user, and also helps troubleshooting of the AI system to be performed.”

While tech giants have been keen on building and deploying AI, these technologies still have some trustworthiness issues. Without proper monitoring, problems like bias and hallucination continue to pose threats to the accuracy of machine learning outputs.

As a result, some enterprises are taking an interest in responsible AI frameworks: A report from McKinsey found that the majority of companies surveyed planned to invest more than $1 million in responsible AI. Those that already had implemented these frameworks have seen benefits including consumer trust, brand reputation and fewer AI incidents.

As Microsoft seeks to elbow its way to the front of the AI race, researching explainable and responsible AI technologies could give it a reputational boost and help protect it from liability.

Extra Upside

- Gone with the Wind(surf): Google struck a $2.4 billion licensing deal with AI programming platform Windsurf, and would hire Varun Mohan, co-founder and CEO.

- Meta Gets Playful: Meta has completed a deal to acquire PlayAI, a voice AI startup, and will bring on the entire team in the acquisition.

- Don’t Let Enterprise Requirements Slow You Down. WorkOS gives you the identity and access solutions enterprise customers demand without slowing down your product teams. See how leading teams ship faster with WorkOS.*

* Partner

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.