Happy Monday and welcome to CIO Upside.

Today: As AI agents take on more tasks and enterprises start to take their hands off the wheel, just how far the tech can be trusted remains uncertain. Plus: How enterprises can keep themselves safe from cyberattacks by foreign adversaries; and Salesforce’s recent patent could keep a closer eye on your group chat.

Let’s jump in.

AI May Never Completely Conquer Hallucination, Bias

How hands-off can we really be with AI?

Models from two major AI developers went haywire in separate incidents this week. Grok, the chatbot from Elon Musk’s xAI, responded to a variety of queries on X with commentary on “white genocide in South Africa” — something the company blamed on “unauthorized modification” of the model. Meanwhile, Anthropic’s lawyers admitted to using an erroneous citation hallucinated by the company’s Claude chatbot in its legal battle with music publishers.

Such incidents highlight fundamental problems with AI – ones that developers can limit but may never be able to fully eliminate, said Trevor Morgan, senior vice president of operations at OpenDrives.

Hallucination and bias are core elements of AI, he said. Even if rates of inaccuracies and bias are reduced to minimal levels, AI learns from a “human-centered world,” said Morgan. Often, enterprises utilizing AI don’t know exactly what it learned from, or what is missing from its training datasets. Whether the result of negligence or intent, biased data can leak in and become amplified, he said, or models can simply make up answers for queries they weren’t trained to respond to.

That makes taking human hands off the reins incredibly tricky. “These things, unsupervised, they still are acting like 4-year-olds,” said Morgan. “These things can still run around the pool and fall in.”

While such issues aren’t new, they aren’t always fully considered as enterprises move full steam ahead with AI deployments, Morgan said. Failure to create adequate safeguards up front may create massive risk later, especially as autonomous AI agents continue to sweep the industry. “AI – more and more – is doing the doing within companies,” he said:

- Tech giants are more than eager to push the agentic narrative, with the likes of Microsoft, Salesforce, Google, Nvidia and more throwing their hats into the ring.

- And AI agents are already taking over processes that were once human-dominated: According to Neon, an open source database startup recently acquired by Databricks, 80% of the databases created on its platform were produced by AI agents as of April.

- When platforms begin to be optimized for usage by AI “rather than human beings,” said Morgan,“what do human beings do then?”

The first step in avoiding risk is reckoning with it. As much as we want to let AI Jesus take the wheel, Morgan said, the initial deployments need to be “very intentional and first-level.” The best-case scenario for working side-by-side with AI is that it drives ideation, but “human beings are still in control of the ultimate decision-making,” he said. In the event that something slips through the cracks, these systems also need guardrails and monitoring, he added.

Amid the speed of adoption and the pressure to keep up, though, the conversation about necessary guardrails, safety and governance often gets lost, said Morgan. “But are we dealing with something that, when we realize we need guardrails, are we too late?”

In essence, it’s the question underpinning the first Jurassic Park movie, Morgan said. “They went so fast to see if they could do it that they didn’t stop to think, ‘should we do it?’”

Combating Cyber-Espionage Requires Enterprises to Keep Their Eyes on the Endgame

The tectonic plates of the nation-state threat landscape have shifted significantly in 2025.

Cyberattacks from foreign adversaries have evolved and are now impacting enterprises in new ways. CrowdStrike’s 2025 Threat Report found that Chinese cyber-espionage — what the Department of State has called the Chinese Communist Party’s Military-Civil Fusion Policy — surged by 150% from the previous year. Sectors like finance, media, and manufacturing have seen security incidents spike as much as 300%, according to the report.

“In 2025, China has emerged as the most active nation-state cyber actor targeting American and European companies,” Martin Vigo, lead security researcher at AppOmni, told us.

While determining a specific percentage is difficult, cyberespionage constitutes a significant portion of the cyber-threat activity targeting the US private sector, said Michael J. Driscoll, senior managing director at FTI Consulting:

- Sectors that are likely to be impacted include energy, technology, aerospace, biotechnology, robotics, EV and automotive, and advanced manufacturing.

- In 2019, the government calculated that US companies were losing up to $600 billion to Chinese IP theft. Today, that number is expected to be much higher, but hard to estimate because such attacks often go undetected and are not reported, experts said.

- CISOs should be on the lookout for highly tailored spear-phishing emails aimed at executives and engineers, exploitation of zero-day or unpatched vulnerabilities, dark web leaks, infostealer malware, and living-off-the-land techniques.

Today, a ransomware attack may be used as a smokescreen to distract security teams while threat actors silently exfiltrate sensitive corporate data, says Mike Logan, CEO of C2 Data Technology. “China’s state-sponsored hackers have dramatically scaled their operations, focusing on both economic espionage and strategic intelligence collection,” Logan added.

Other novel techniques in use this year include breaching supply chain partners, SaaS and third-party service providers, and using stolen credentials for malware-free exploitation, said Nic Adams, founder and CEO at Orcus. Attackers are also increasingly using AI to compromise business emails and mobile devices, said Driscoll.

While China and the US have struck a 90-day tariff war truce, uncertainty remains. The dangers of future cyber attacks, in retaliation for tariffs, are a “highly real” danger moving forward, said Adams.

Given the unpredictable nature of global politics, US enterprises must take proactive steps to harden and strengthen their defenses, improve threat detection, and ensure rapid-response capabilities.

“Executives must recognize how the endgame equates to competitive displacement,” Adam said. “Always assume your blueprints are being harvested or monitored in real-time, because somewhere in the world, they most likely already are.”

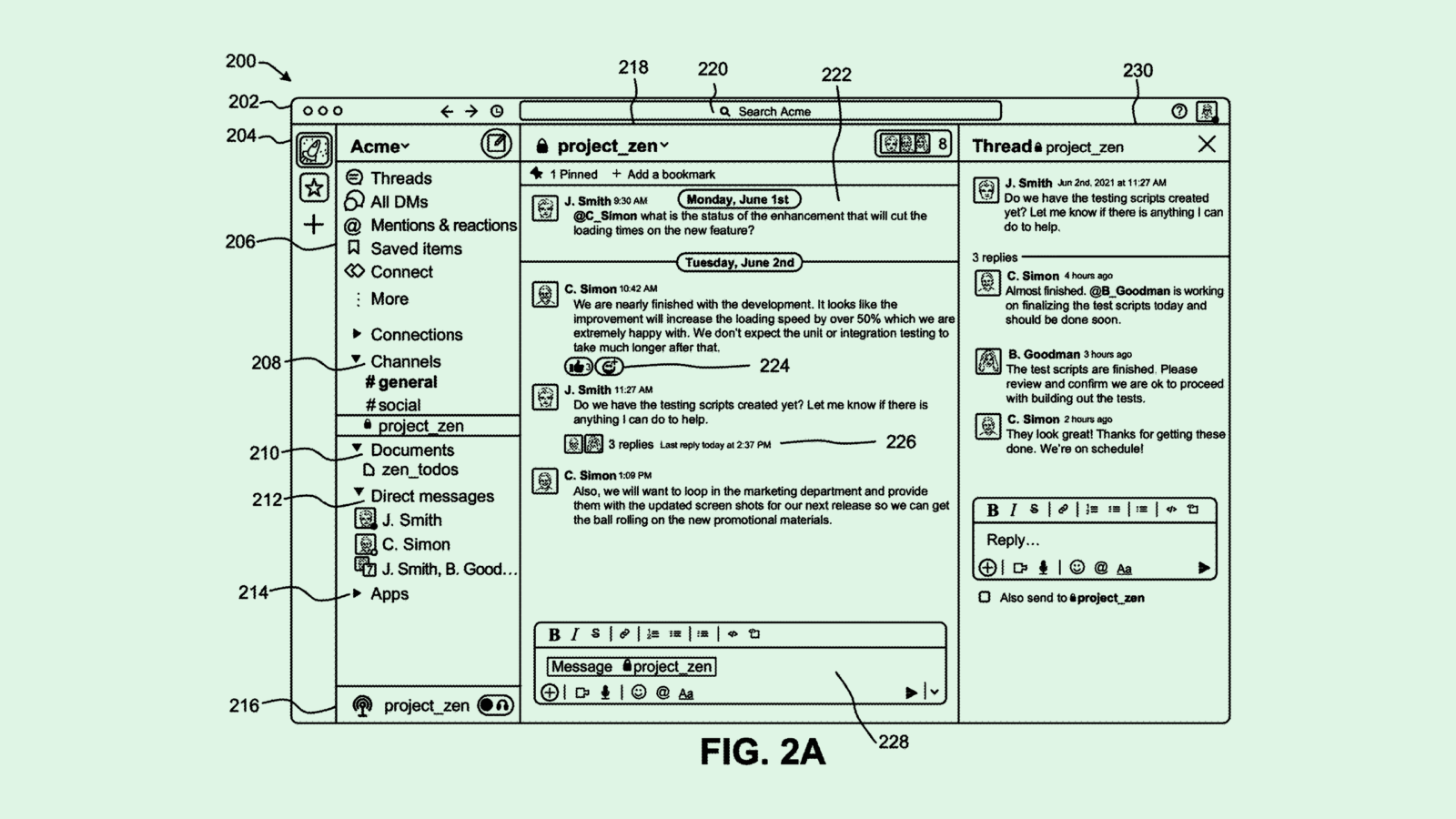

Salesforce Patent May Double-Check Your Slack Chats

You might need to watch your tone in the company group chat – or Slack might catch you slipping.

Salesforce, which acquired Slack in 2021, is seeking to patent a system for “updating communications with machine learning and platform context.” The filing details a system for, likely for Slack, that leverages machine learning to understand the way users communicate in order to help them craft messages.

“Oftentimes, users may want to (convey) a certain tone or voice associated with a message but may not be able to find the words to convey such,” Salesforce said in the filing.

Salesforce’s tech tracks and learns from your personal communication style, watching for sentiment markers like exclamation points or emojis along with text. The system will also learn and mimic high-engagement characteristics, or those from messages that got a lot of likes and reactions. All of the data is then used to suggest messages that convey the intent of the user’s original text but better fit the communication style of an organization.

For example, if a message beginning with “Howdy y’all” garnered more reactions or responses than a message starting with “Hello team,” then the system may recommend one greeting over another.

This isn’t the first time tech firms have brought AI and machine learning into workplace monitoring. Microsoft has sought to patent a similar tool for “automatic tone detection and suggestion” in emails and chats, as well as tech that changes users’ facial expressions to match their tone in virtual meetings.

Patents like this make sense for the likes of Salesforce and Microsoft: Both firms offer widely popular workplace messaging platforms (Microsoft’s is Teams) and are seeking to cement their places in the broader enterprise AI race.

But amid growing workplace AI adoption, the question of “how much is too much” still remains. A survey from McKinsey found that 43% of workers are concerned about personal privacy in the workplace adoption of generative AI. At a certain point, employees may be uncomfortable with AI-based tracking and monitoring, even if it provides them a certain amount of convenience.

Extra Upside

- Data Center Dreaming: OpenAI is set to develop a 5-gigawatt data center campus in Abu Dhabi, one of the world’s largest AI infrastructure projects yet.

- Go Go Google: Leaks suggest that Google will debut additions to it’s Gemini family of models at it’s annual developer conference.

- Mindstream Delivers Essential AI Insights Daily. Join 200,000+ professionals who trust our concise newsletter to stay ahead in a fast-changing world. Join today.*

* Partner

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.