Happy Thursday, and welcome to CIO Upside.

Today: We learn more about emotion AI’s role in customer service. Plus: A look at which executives are primarily handling AI security decisions; and why Nvidia’s patent is trying to check data label accuracy.

Let’s jump in.

Why Customer Service Needs Less Emotionally Savvy AI

Emotion AI wants to meet people where they are. But sometimes, people aren’t being so honest.

When a customer wants better service and is talking to a chatbot, they might act more upset than they actually are in order to get better treatment. Then, the AI is doling out limited resources, like support or refunds, to the wrong people.

And that makes the AI a less efficient helper.

“AI has become central to customer service because of its scalability and emerging emotional intelligence. Traditional customer care, through call centers or in-person interactions, faces limits in cost, speed and capacity,” said Yifan Yu, an assistant professor of information, risk and operations management at the McCombs School of Business at the University of Texas-Austin. “AI systems, by contrast, can process millions of customer messages or reviews in real time.”

To explore emotion AI’s efficacy, Yu created a model with McCombs postdoctoral researcher Wendao Xue that’s “focused on how companies, especially in customer service, use emotion-based AI to decide who gets what.”

The study looked into the fallibility of emotion AI, including whether it’s being gamed by smart users, or has its own errors.

A Matter of Nuance

“Firms shouldn’t just plug in emotion AI and assume it will make the service more empathetic,” Yu said. “They have to plan for how people will react to it.”

What good deployment looks like for an enterprise:

- Combines technology with smart policy. Users should build transparent systems, and make sure any of the AI’s decisions “are fair and easy to explain.”

- Regulates reliance on emotional data. In the “emotion AI paradox,” people believe that a stronger and more precise AI is better, but for customer service, a weaker AI that “doesn’t overreact” might provide fairer treatment.

The “weaker AI” benefit came as a surprise to Yu: The resesarchers found it “can sometimes increase social welfare” because it keeps customers from exaggerating their feelings and distracting the AI from efficiently addressing levels of concern.

“A moderate level of algorithmic noise can dampen these incentives of emotional misrepresentation, making emotional signaling less manipulative and reducing distortions in the market equilibrium,” Yu said. “‘Weak’ AI can serve as a natural regulator in emotionally charged digital interactions.”

A human agent can then come in to assess the actual tone and concern at hand. “While AI ensures consistency,” Yu said, “humans excel at contextual and empathetic judgment, recognizing subtleties in tone, irony or complex emotional states that algorithms still struggle with.”

The study sees a human-AI collaboration as the most effective approach, with AI augmenting the experience. That way, Yu said, AI can be the first layer and humans can give more attention to situations that call for “empathy, negotiation or creative problem-solving.”

“Emotion AI should be viewed as a socio-technical system, not just a technology. Its success depends on how organizations design incentives, regulate data use and account for human strategic behavior,” said Yu. “AI doesn’t operate in isolation. It changes how people express emotions and compete for attention. Recognizing these feedback loops is essential for responsible deployment.”

You’ve Invested Both Time and Capital Into Your Data Architecture

So why does it feel like you are working for your data, instead of it working for you?

You are not alone. For many enterprises, the promise of the cloud has turned into a cage. Data is locked into insecure silos, egress fees punish you for growth and success, and every month you are paying more to do more (although it feels like you understand your data less).

Many are slowly waking up to this reality: cloud computing comes with both obvious and even hidden costs. Data that isn’t accessible isn’t exactly useful. And the cloud isn’t exactly known for simplifying workflows or making data actionable for you.

OpenDrives’ Astraeus cloud-native data services platform helps solve for just that — providing you with all the cloud benefits, just minus the bad stuff:

- Slash cloud waste with cost-predictable, dynamic resource optimization and intelligent orchestration.

- Break vendor lock-in by unifying your data stores (whether they are in the cloud or on-prem) into one, centralized platform.

- Streamline collaboration and increase productivity with improved performance and powerful storage management capabilities.

If any of these items were brought up at your last sprint meeting — data sprawl, lack of security & compliance, inability to scale, idle systems, high complexity — it’s time to explore Astraeus.

Stop storing and start using your data to solve real business outcomes. Learn more.

CIOs Outrank CISOs as AI Security Leaders

Enterprises’ spending is shifting markedly, trending toward AI supply chain security.

In fact, that’s now their No. 1 investment priority (at 31%), according to Acuvity’s 2025 State of AI Security report, making for one of the biggest changes in spending patterns in decades.

The move is being led overwhelmingly by CIOs, who have taken first place in security ownership (29%), well above company CISOs, who now rank fourth (14.5%).

“The CIO generally, in an enterprise, holds a bigger responsibility across multiple different facets,” said Acuvity CEO Satyam Sinha, and the chief security officer is evaluating risks from application use and security attacks.

So why this positioning for who handles AI security? Mostly because it’s still so new.

“The adoption is ahead of the strategy,” he said. “We’ve seen disruptive technologies in the past. We’ve seen cloud come out, we’ve seen SaaS [software as a service] come out, but there was a slight bit of a difference there.”

A company would adopt cloud technology as a team decision, Sinha said, but AI is more of a prosumer approach: “Your friend told you about a tool, you go try out that tool, you get instant gratification.”

“If you look at the adoption of AI itself, it comes under the purview of CIO,” Sinha said. “There are two facets almost every time we adopt a new technology: One is that of governance, and the other one is of security.”

Companies have to ask themselves how they want to adopt AI, their safety policies, and all the other governance questions; usually, security implementations come later. That, Sinha said, is why CIOs are typically helming AI at the beginning of adoption.

“I still do believe that as the organizations mature, as they understand these risks better, the security implementation will end up residing with the CISOs,” said Sinha. “Right now, there’s a lot of research going on: What AI are we using? How are we going to do this? What are the policies around it? That’s why it’s finding a place with the CIO at the moment, from an ownership standpoint, but we do expect that to transition in the future.”

But no matter where a company puts its AI ownership, Sinha pointed out, it’s important to note that AI poses more risks than ever. And enterprises aren’t necessarily ready to handle that: Only 30% of companies have optimized AI governance, and almost 40% don’t have any managed or optimized governance at all.

At the same time, 50% expect to lose data through their AI tools.

“Even when there’s a lot of adoption happening with AI and there are risks associated with it, it’s not very clear to people: What are these new risks?’” Sinha said. “There’s a massive gap between the adoption and having the right guardrails in place … Out of the top five security priorities, we believe AI security will start rising up and become one of the more important things in the years to come.”

Nvidia Wants to Ensure Machine-Learning Data Is Labeled Properly

Bad information can be misleading, confusing and annoying — if not to machine learning models themselves, at least to their users.

That’s why Nvidia is looking to patent systems and methods that evaluate “labeled training data for machine learning systems and applications.”

When a company’s labeled data is wrong or inconsistent, the model isn’t able to learn properly.

“If these errors are not corrected before generating training data that includes the labeled sensor data, the training data may be inadequate for its intended purpose, such as training a machine learning model,” the patent says.

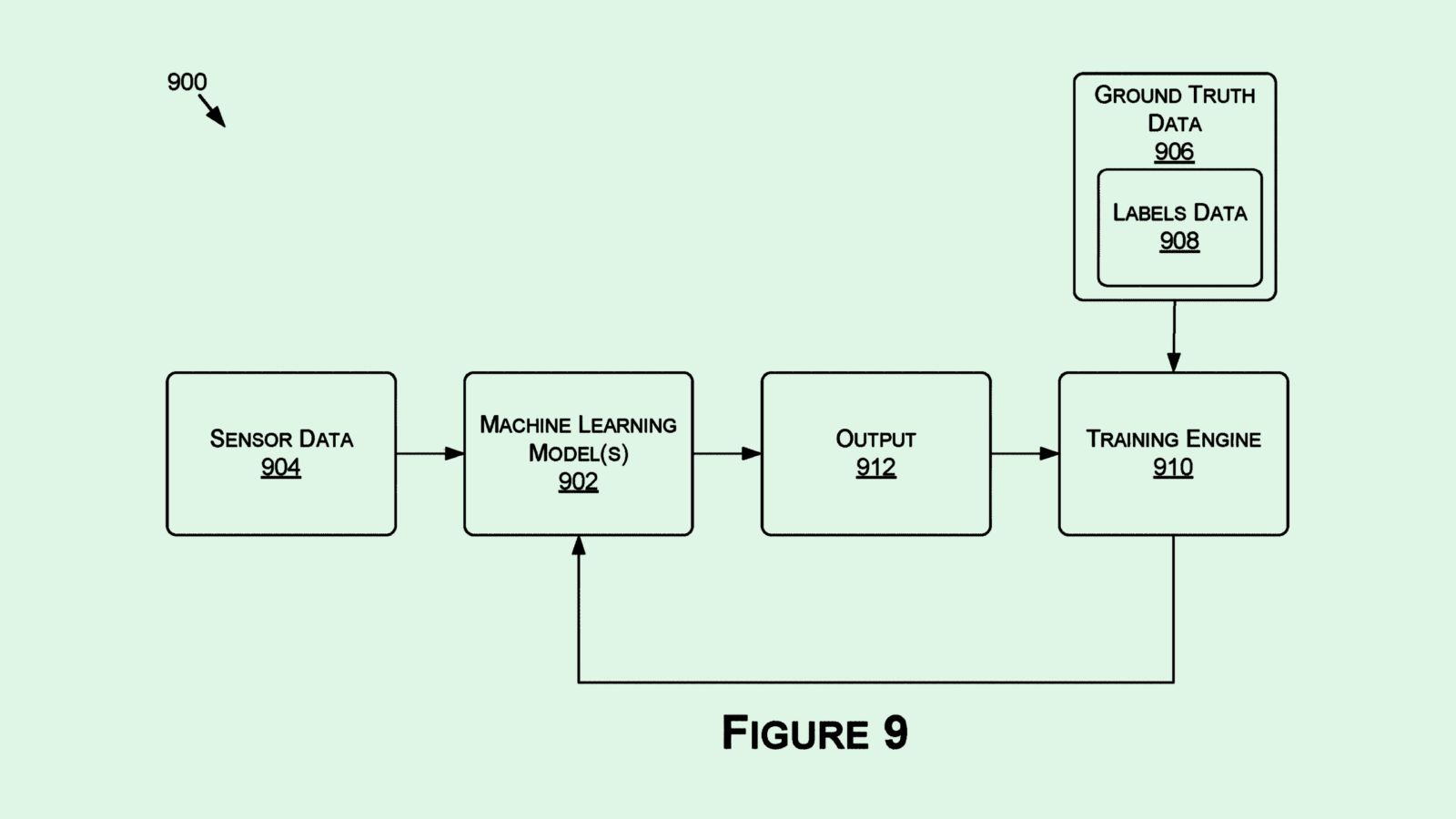

The system Nvidia seeks to patent uses “consensus labels” to check whether the labels are accurate, according to its application.

First, AI or algorithms label data from sensors (like images or 3D point clouds) automatically. A human hand comes in to review or fix the label from that tech as necessary: Your first set of labels is created.

But “even by having these users manually verify and/or update the initial labels, at least a portion of the labels may still be inaccurate based on user error,” the patent says.

To double-check, additional people are shown that data, and they label it separately, creating a second set of labels. The system evaluates the data for consensus, figuring out where people seem to agree.

Afterward, the system compares the first labels with the consensus labels to check how accurate the first labels were.

AI systems whose data is accurate and reliable will then be able to perform better.

Right now, a lot of money is going into startups dedicated to data labeling: Meta recently invested $14.3 billion in Scale AI. And that’s because good data matters. It’s key to training your models to be as efficient and accurate as they can be.

Extra Upside

- Stock Surge: Quantum is moving up in the world with JPMorgan’s cosign.

- Cut the Chatter: California is the first state to regulate AI “companion” chatbots.

- Picture Perfect: Microsoft has developed its first text-to-image generator.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.