Happy Thursday, and welcome to CIO Upside.

Today: AI comes in to take over HR roles as layoffs hit. Plus: How cybersecurity education is changing; and two patents from Google that could make meetings look and sound better.

Let’s jump in.

Is AI Ready for the HR Jobs It’s Taking Over?

As enterprise AI use becomes more pervasive, it’s starting to replace human staff.

It’s clear across tech: IBM replaced hundreds of people with AI (94% of its HR questions are now being answered by AI agents), and Amazon has said it plans to replace people with AI.

But that doesn’t mean the entire organization’s always on board.

New research from G-P shows that not all execs are as pumped about AI as their company lines might imply. Some 51% said they don’t completely trust AI with financial decision-making. Additionally, 22% are concerned about the quality of data going into AI models, and 20% fret over the accuracy and trustworthiness of AI outputs.

“This dynamic is characteristic of early-stage transformation: contradictions and experimentation,” said Laura Maffucci, vice president and head of HR at G-P.

There are also financial pressures, according to Amy Loomis, the group vice president of workplace solutions at IDC, that come into play when mistrust of AI coexists with layoffs in favor of AI.

Return on AInvestment?

“First, there is the pressure that senior leaders feel to show return on their AI investments,” Loomis said. “They may not have the means to fully measure the value of these AI costs, but they do have the means to improve their bottom-line results by reducing headcount in key areas that lend themselves to the use of AI tools.”

She added that leaders often know that relying on AI without implementing governance or guidelines can be a big risk, “yet the pressure to accelerate adoption means they are either A) willing to accept the risks or B) unaware of the downstream consequences, such as impacts on brand reputation, data privacy or security.”

But not everyone’s cutting back on staff. According to G-P’s research, 11% of execs are doubling down on human talent to make their companies stand out.

“It speaks to the different opinions, values and leadership philosophies that execs have in view of their workforce,” said Tim Flower, vice president of DEX Strategy at Nexthink. “Those that see AI as a force multiplier will leverage it as a collaborative partner to increase the value, productivity and output of their staff.”

When AI is treated like a digital colleague, it creates the false narrative that AI has agency and emotions like a person. That’s a path to misplaced trust and bigger blind spots.

“The ideal balance is humans with AI, not humans versus AI,” Maffucci said. “Our research shows 31% of employees worry using AI will accelerate their replacement, and 62% fear their skills aren’t future-proof. That’s real anxiety, and HR needs to address it head-on.”

AI works best as a “collaborative partner” for HR functions, Flower added, taking more control of resume and application analysis and communicating basic workplace policies with close oversight from human eyes.

The oversight is crucial, he said: “Believing that AI, even with trained agentic capabilities, is a suitable replacement for human counterparts is not only misguided, but risky from a business execution standpoint as well as from a legal perspective. Imagine employees being given incorrect or improper advice that is contrary to either company policy, legal guidelines or just plain human intellect?”

The hazards aren’t limited to occasional instances of bad policy advice, either. They can be existential.

“While [AI] may be the catalyst for the first one-person billion-dollar company, it could also be the downfall of an existing billion-dollar company,” Flower said. “I predict significant turbulence in the coming few years as AI advances … [and] as people continue to put false expectations and trust in technology over humans in the quest for greater financial value.”

Are Your AI Agents A Data Breach Waiting To Happen?

Teams are deploying AI agents with broad API access and minimal oversight. But what starts as helpful automation can quickly become a security incident.

The problem isn’t the AI: it’s something called the “infrastructure gap.” Most teams simply bolt agents onto existing systems without proper boundaries, audit trails, or access controls. A misconfigured agent can delete vital files, trigger unwarranted financial transactions, or expose sensitive data–all because no one defined what it should or shouldn’t be able to do.

WorkOS addresses this systematically with:

- Machine-to-machine authentication that verifies every agent request.

- Token-based permissions that restrict what agents can access.

- Real-time monitoring that logs and audits agent activity.

Fast-moving teams use WorkOS to build secure agent workflows without having to rebuild security infrastructure.

How Next-Gen Cybersecurity Workers Are Adapting to the Age of AI

Cybersecurity is evolving by the day. What does that mean for how it’s taught and what the next generation of students is bringing into the workforce?

When Chris Simpson, the director of National University’s Center for Cybersecurity, began teaching 15 years ago, it would take students days to set up labs; now they can simply click a link and be ready to go.

Over time, “the shift toward workforce readiness has been the most significant change” in the classroom, Simpson said. “Universities have moved beyond purely theoretical frameworks to embrace practical, hands-on learning.”

And that click-a-link ease is great for democratizing cybersecurity education: It means there’s lower technical overhead, and universities have one less massive bill to contend with.

But even as the field itself and levels of access change, Simpson said, the fundamentals still matter.

Building on the CIA Triad

“Confidentiality, integrity and availability (the CIA triad), along with a solid understanding of the network stack, remain the bedrock of everything we do,” he said.

The skills that employers demand from workers in addition to the basics, however, are constantly increasing as cyber threats not only grow but also become more complex.

“In the past, companies mainly looked for IT professionals with strong technical skills like network security and coding,” Simpson said. “Now, there is a much greater demand for a wider range of skills, including cloud security, threat intelligence, risk management and even soft skills like communication and teamwork.”

With companies leaning into artificial intelligence, education is emphasizing AI use as a skill, ensuring that new workers are using the tech to augment their abilities rather than relying on it. Critical thinking and problem-solving are vital; running prompts alone won’t suffice.

“Organizations need more specialists in areas like cloud computing, incident response, and data privacy,” Simpson said. As a result, colleges are creating more programs to meet future employers’ needs. The goal is to educate workers who “are more flexible, better trained, and ready to adapt to fast-changing threats while using AI responsibly and strategically.”

Google Wants to Fix a Lot About Video

Video calls have transformed the workplace, but they’re not without their setbacks: connection issues, equipment malfunctions and more.

Google is expressing interest in making calls a little clearer with two new patent applications.

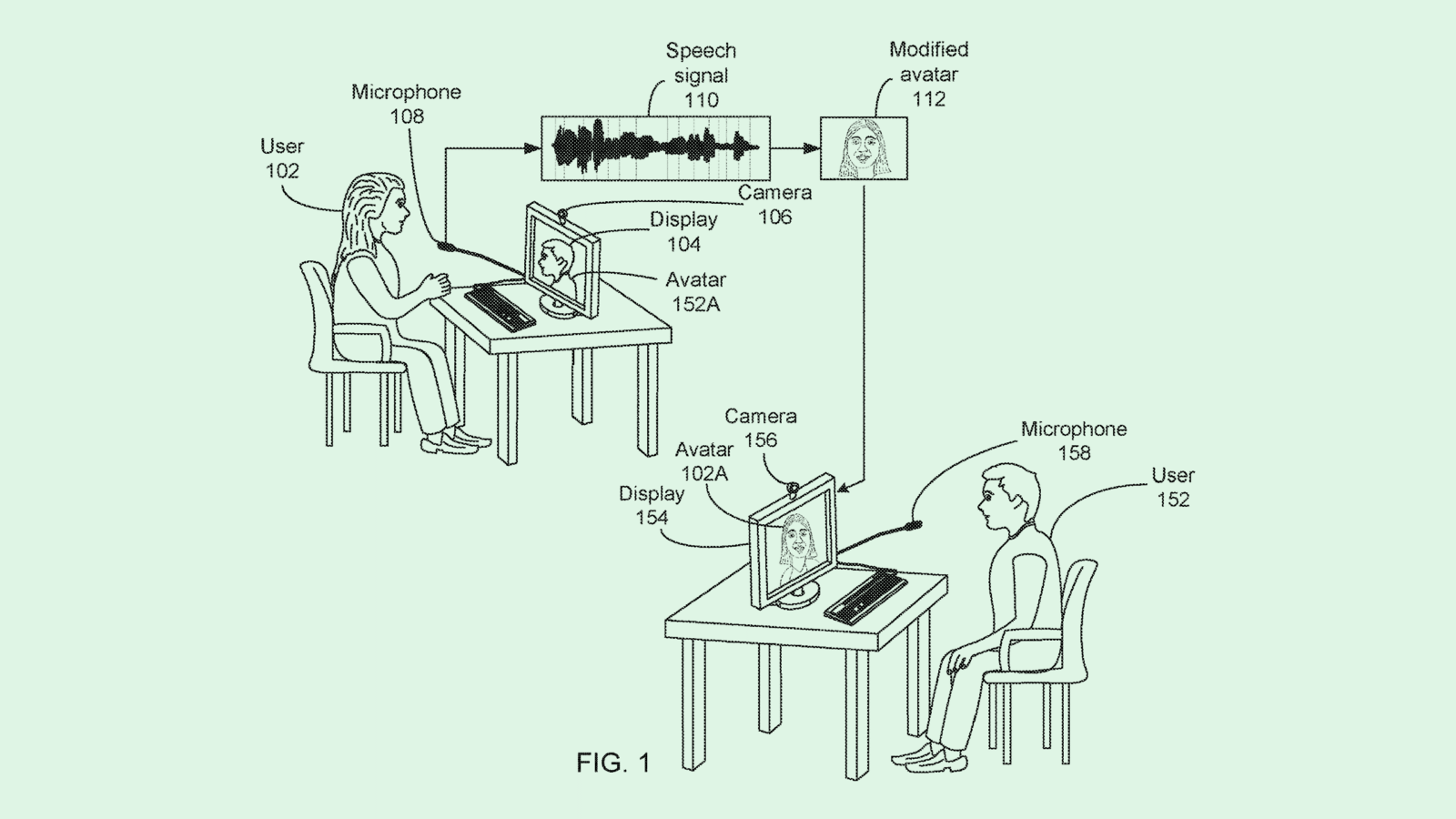

In one, the Mountain View, California-based company is seeking to patent a method for “modifying a facial feature of an avatar” to make its speech look more realistic when callers rely on animated representations of themselves instead of live video.

First, the system analyzes a voice recording and identifies moments where the sounds change because of a new word or syllable. Then, it determines what an avatar’s mouth should be doing at the moment any of those variations occur: opening, closing or changing shape. There’s a particular focus on vowel sounds, because they usually have smoother transitions.

The Natural Look

Based on all of that collected information, the system updates the avatar’s face, including its lip shapes and mouth positions so it better mimics saying the words.

That enables digital characters to speak in a way that looks more natural, with their facial movements better synced with the actual sounds coming from their mouths.

Whether you’re using an avatar or putting your own face front and center, Google is also looking to patent a method to alter a video’s audio “to increase understandability.” The aim is to improve how people sound on calls so that there’s no trouble understanding them.

During a video call, the speaker’s voice is transmitted through the meeting software as audio. With the method Google wants to patent, the system will recognize that the speaker is hard to understand (whether the reason is background noise, bad audio quality or unclear speech) and run the audio through an AI model.

The model then modifies the audio to make it easier to understand, whether that involves clearing up speech, adjusting the volume, or removing background noise. Afterward, it transmits the improved audio to other meeting participants in real time.

The two patents are part of Google’s bid for productivity tech — particularly meeting tech — as it competes with Zoom and Microsoft. Microsoft, in fact, is already seeking to read facial expressions during meetings, and Zoom is working on a work-life balancer.

With numerous meeting options available in a more remote world, the productivity race is on.

Extra Upside

- Keep Talking: Conversational AI startup Sesame raised $250M and launched its beta model.

- Unpinning Preferences: Pinterest is making it possible to see less (not no) AI in your feed.

- Bring Structure to AI Agent Access. WorkOS delivers enterprise ready access controls, audit logs, and permission limits built to secure AI agent workflows. See how teams limit what agents can access without slowing down their work. Make your agents secure and scalable.*

* Partner

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.