Happy Monday, and welcome to CIO Upside.

Today: We had the opportunity to sit down with Heather Ceylan, CISO of cloud storage firm Box, to discuss the emerging threat landscape, where AI fits into security strategies and the importance of remembering the basics. Plus: What Oracle and OpenAI’s $300 billion partnership means for the AI industry; and Salesforce’s recent patent lets language models recycle prompts.

Before we jump in, we have a quick announcement: Today marks lead reporter Nat Rubio-Licht’s final newsletter with CIO Upside. On Monday, September 22, Rubio-Licht will be joining The Deep View, a newsletter focused on the evolving landscape of AI, as its senior reporter.

Now, let’s jump in.

In Security, ‘There’s Two Sides to AI,’ Box CISO Says

AI can be a double-edged sword in security. That doesn’t mean you shouldn’t leverage it.

While the technology has created a new set of risks, it also has the capacity to strengthen the weakest link in security: humans, Heather Ceylan, chief information security officer at cloud storage company Box, told CIO Upside.

“There’s two sides to AI,” said Ceylan. “It’s great from a security perspective, because it brings us so many new opportunities that maybe didn’t even exist before. But it also brings about a new set of risks.”

It’s no secret that AI has turned the threat landscape on its head, Ceylan said:

- The tech has given bad actors a far wider arsenal of tools to work with to discover and exploit vulnerabilities. Plus, the users leveraging AI without understanding the risk that models can easily be reverse-engineered to spill the beans creates a wider attack surface for hackers to leverage.

- The risks become even more pronounced in the age of AI agents, said Ceylan, which can create vulnerabilities as they act on our behalf “with varying degrees of independence.” Additionally, “adversary-controlled agents” present a threat, she noted.

The biggest vulnerability in an organization, however, is always going to be personnel, Ceylan said: “The number one cause of breaches today still is the human element.” Ceylan, who began her role at Box in May, was previously the deputy CISO at Zoom, shepherding the company through the security challenge of its skyrocketing popularity early in the pandemic.

The company’s “overnight” pivot from B2B to B2C forced her to quickly rethink its approach to security: “Before you had IT admin setting the security controls of the software, and now you have grandma that you’re Zooming with.”

That lesson in adaptability has allowed her to understand and regulate the risk that humans play in creating vulnerabilities in her role at Box, finding a balance between the good, bad and the ugly of AI. Examples might include offering training on how to use AI safely or implementing “real-time” agents that monitor cyber-safety practices in your workforce’s day-to-day.

In more advanced cases, she said, agents may execute actions for security teams or perform design reviews to check for vulnerabilities in products prior to releases, while keeping a human in the loop for “sensitive actions, she said.

For enterprises struggling with their security posture in the face of AI, ignoring the tech isn’t an option, but you “can’t let it distract you from the basics” like consistent patch management, identity controls and risk monitoring, Ceylan said.

“AI amplifies both capabilities and risks,” said Ceylan. “But if you have the right defense posture, you’re combining identity controls, data governance, observability, human oversight … you shouldn’t let the risks slow you down. You just have to manage them as you always have been.”

User Access Shouldn’t Break As You Scale

Every B2B software company faces the same problem: customers want to control which users can access what inside their app. Most teams start simple, then spend months building custom role systems that break every time they add new features.

WorkOS handles customer access control so you don’t have to:

- Define and manage roles and permissions that scale with your app.

- Sync role assignments from identity providers for consistent access.

- Manage users in a centralized, enterprise ready dashboard.

Your engineering team stays focused on your actual product instead of rebuilding the same access systems every other B2B company needs. Whether you have 50 users or 50,000, the system grows with you without falling apart.

How OpenAI’s Partnership With Oracle Could Alter the AI Landscape

![September 12, 2025, Indonesia: In this photo illustration, the OpenAI logo displayed on a smartphone with qn ORACLE logo in the background. (Credit Image: © Algi Febri Sugita/SOPA Images via ZUMA Press Wire) (Newscom TagID: zumaglobalsixteen607294.jpg) [Photo via Newscom]](https://www.thedailyupside.com/wp-content/uploads/2025/09/zumaglobalsixteen607294-scaled-1600x1067.jpg)

In the age of rapid AI expansion, everyone has their eyes on the sky – or, at least on the cloud.

Last week, Oracle and OpenAI inked a deal in which the AI model developer will purchase an eye-popping $300 billion in compute power from the cloud provider over the span of five years, beginning in 2027, according to The Wall Street Journal.

The deal marks yet another massive investment by tech giants to develop the infrastructure needed to meet lofty AI goals. For enterprises, it should be a signal that AI isn’t something that can be ignored, said Trevor Morgan, COO of OpenDrives. “When you are talking about investments to that magnitude, this is not going away.”

In fact, if enterprises have any shot at achieving their sky-high goals, Morgan added, partnerships like these are necessary. “You can’t do it alone,” he said:

- Deals between massive firms like OpenAI and Oracle may force smaller companies to band together to stake their claim in the industry, he added.

- For any enterprise seeking to carve out their own “bit part” in the AI industry, “you’re going to need to find the right partners.” That means identifying what your niche is and where your weaknesses lie in the market.

- “These are partnerships that are super important to getting new technologies out to the consumers, because each one wouldn’t be able to touch the breadth without going in together,” he said.

If partnerships on the scale of OpenAI’s withOracle, don’t stall innovation, they may still force it within the parameters set by hyperscalers. The fact of the matter is that practically no enterprise will be able to compete with the likes of these businesses. Instead, “watch what they’re doing and ride in their wake,” he said.

“If you’re going to innovate, you’re going to innovate according to the way that Oracle and OpenAI want you to,” he said. “You’re going to play in our playground? Well, then these are the rules.”

How the massive investment will actually generate returns, however, remains to be seen, said Morgan. He compared massive AI models to an engine: While the possibilities seem endless, figuring out how to actually drive value is still a question mark.

“You just marvel at the amount of investment into this, and you ask ‘How are you going to recoup that?’” Morgan said. “This is underscoring the speculative nature of AI.”

Salesforce Patent Could Recycle AI Model Outputs

Salesforce wants to make sure its models aren’t repeating themselves.

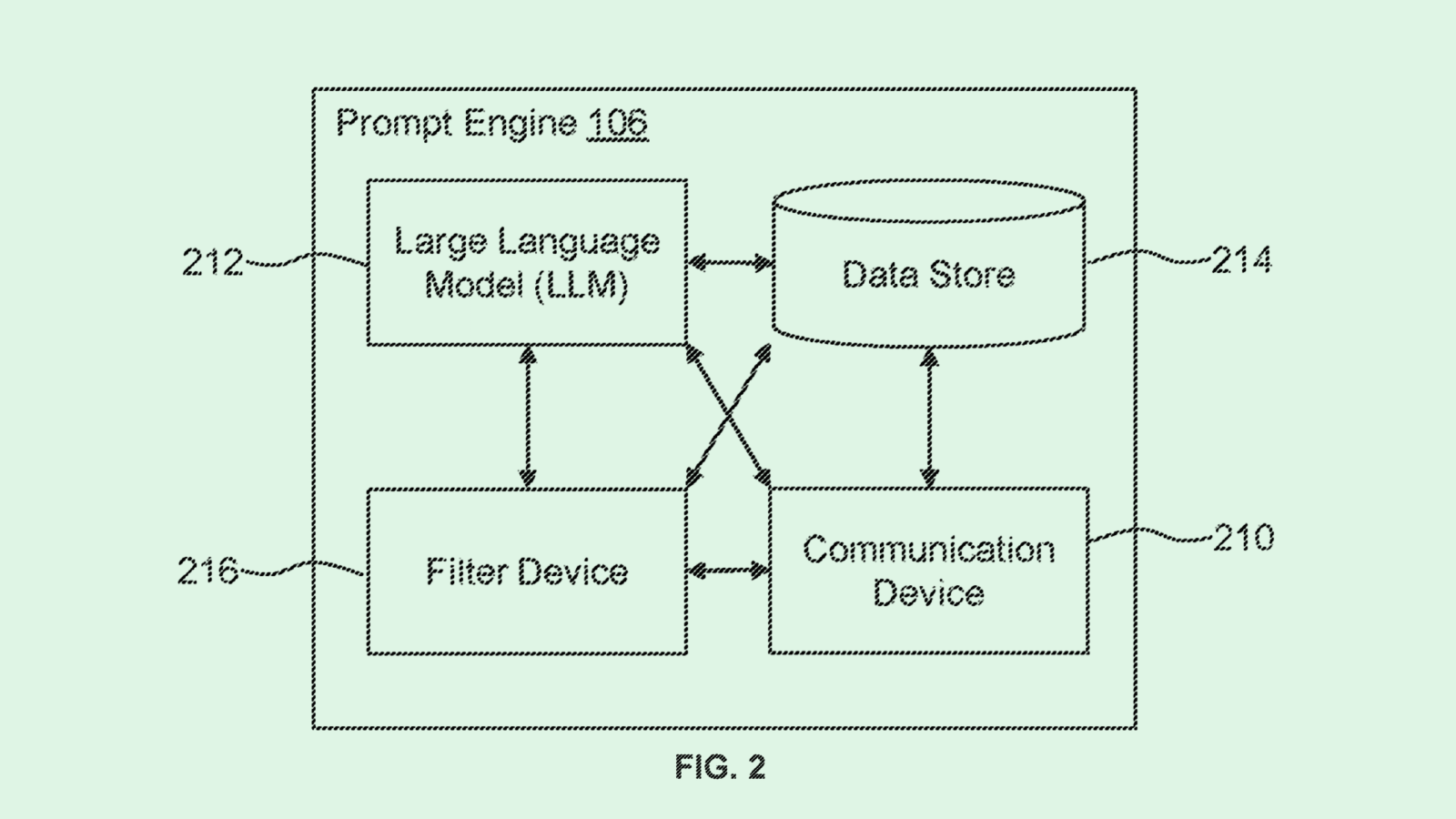

The company is seeking to patent a system for “pre-generative artificial intelligence prompt comparison,” which will essentially compare a user’s query to ones that have been previously sent to make sure the system isn’t starting from scratch to generate answers when asked a question it has handled before.

“(A large language model) may use any of its training data to formulate a response,” Salesforce said in the filing. “This presents a problem in certain environments, where an LLM is used, but precise language is required.”

As the title of the patent suggests, the system will take an input prompt and compare it to previous prompts, which are all stored by the LLM, grading the new prompt by whether it meets a threshold of similarity to previous queries. If it meets or surpasses the similarity threshold, the system will reuse an old response, no generation necessary. If it doesn’t, the model will craft a new response.

When reusing old responses, the model will apply certain rules to what the output can show. For example, it may censor “banned phrases,” or ones that are inappropriate or confidential, as well as insert “selected phrases,” or precise language that’s necessary depending on the field.

Salesforce’s application identified a few benefits of the tech. For starters, reusing old responses could help save the model energy that it would have expended in generating new phrasing. Applying content rules also could prevent inaccurate responses or data security slip-ups. For example, “in certain fields, such as finance or wealth management, precise language is required and there is a need to ensure that LLM responses are consistent,” the filing noted.

Tech that saves energy and ensures accuracy is increasingly important as companies seek to figure out where AI fits in without breaking the bank or causing a security meltdown. Given that Salesforce has staked a lot of its future on agentic AI – which security experts have expressed concern over – making sure they’re getting things right is crucial as enterprises seek to give these systems more autonomy.

Extra Upside

- Budding Talent: OpenAI announced a new mentorship program, called “OpenAI Grove,” to support early tech entrepreneurs.

- Overseas Investment: Nvidia and OpenAI are reportedly in talks on a deal to support data center development in the U.K.

- Stop Rebuilding Access Controls. Customers expect to control which users access what inside your app. WorkOS handles role and permission management so your engineering team can stay focused on building core features. Integrate WorkOS RBAC into your app today.*

* Partner

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.