Microsoft Explainability Patent Highlights Need for AI Trustworthiness

These technologies still have some trustworthiness issues.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

Are two models better than one?

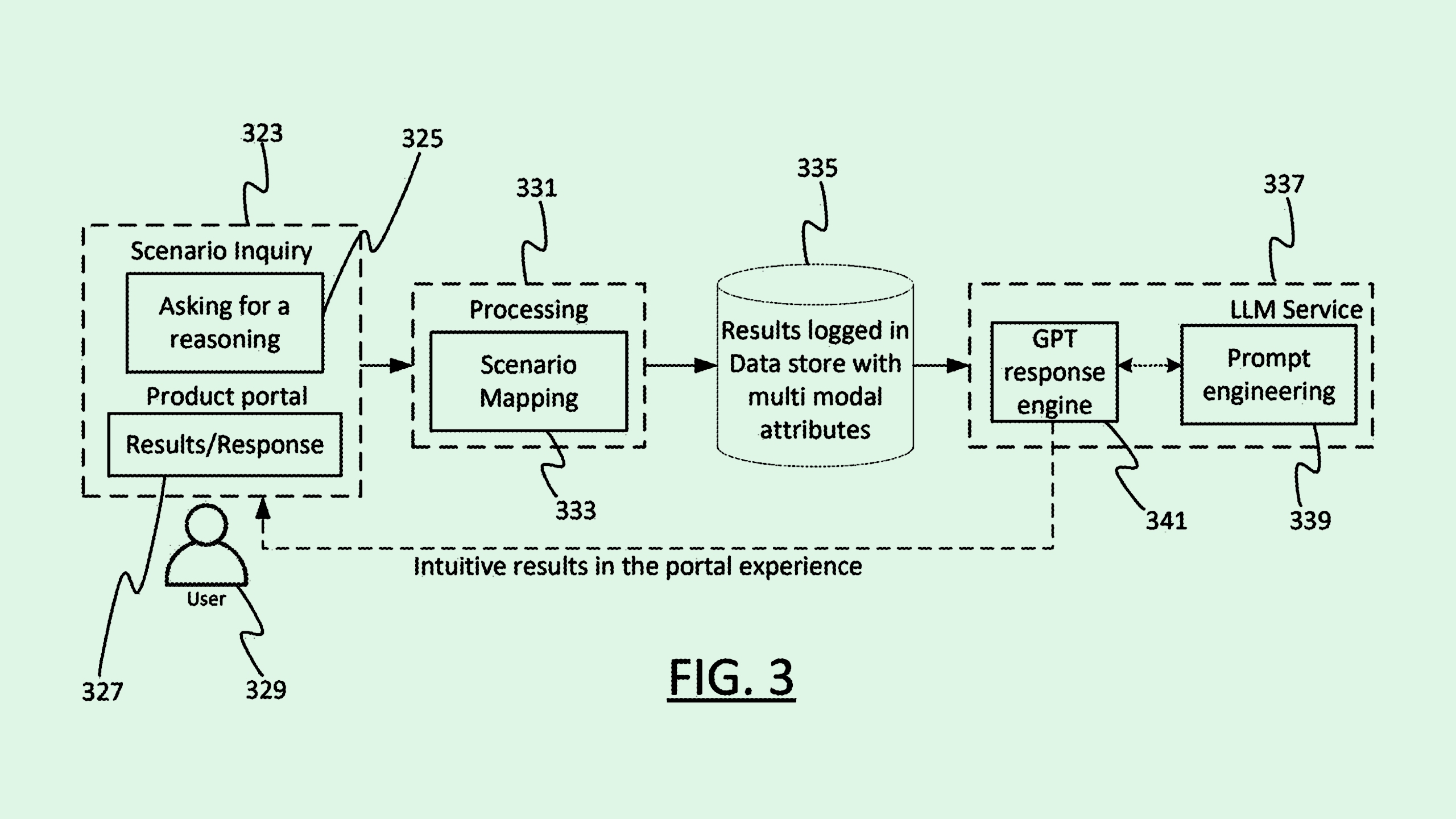

Microsoft might think so: The company is seeking to patent a “generative AI for explainable AI,” which uses other generative models to explain a machine learning output, helping users better understand where a model is getting its answers.

When the system is asked to explain a machine learning prediction, the system analyzes features of the input data and its respective output. Microsoft’s tech may also pull additional content, such as user preferences, previous explanations or subject matter knowledge.

The system will then come up with multiple possible explanations for the output. For example, If a person wants to know why a loan was approved or denied, this system may analyze the loan application as well as historical data to discover contributing factors. Finally, the system uses a second generative model to rank those possible explanations based on relevance.

“(Explainable AI) can be used by an AI system to provide explanations to users for decisions or predictions of the AI system,” Microsoft said in the filing. “This helps the system to be more transparent and interpretable to the user, and also helps troubleshooting of the AI system to be performed.”

While tech giants have been keen on building and deploying AI, these technologies still have some trustworthiness issues. Without proper monitoring, problems like bias and hallucination continue to pose threats to the accuracy of machine learning outputs.

As a result, some enterprises are taking an interest in responsible AI frameworks: A report from McKinsey found that the majority of companies surveyed planned to invest more than $1 million in responsible AI. Those that already had implemented these frameworks have seen benefits including consumer trust, brand reputation and fewer AI incidents.

As Microsoft seeks to elbow its way to the front of the AI race, researching explainable and responsible AI technologies could give it a reputational boost and help protect it from liability.