The Time and Place for AI Code Generators

Though chatbots do code’s heavy lifting, humans need to be in charge.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

When a chatbot can generate code in the blink of an eye, where do developers fit in?

The AI revolution has brought with it new ways to automate and augment just about every task, a major one being code-writing. Tons of tech giants have introduced a code-generation tool in the past year or so, including Microsoft, Amazon, IBM, and Google.

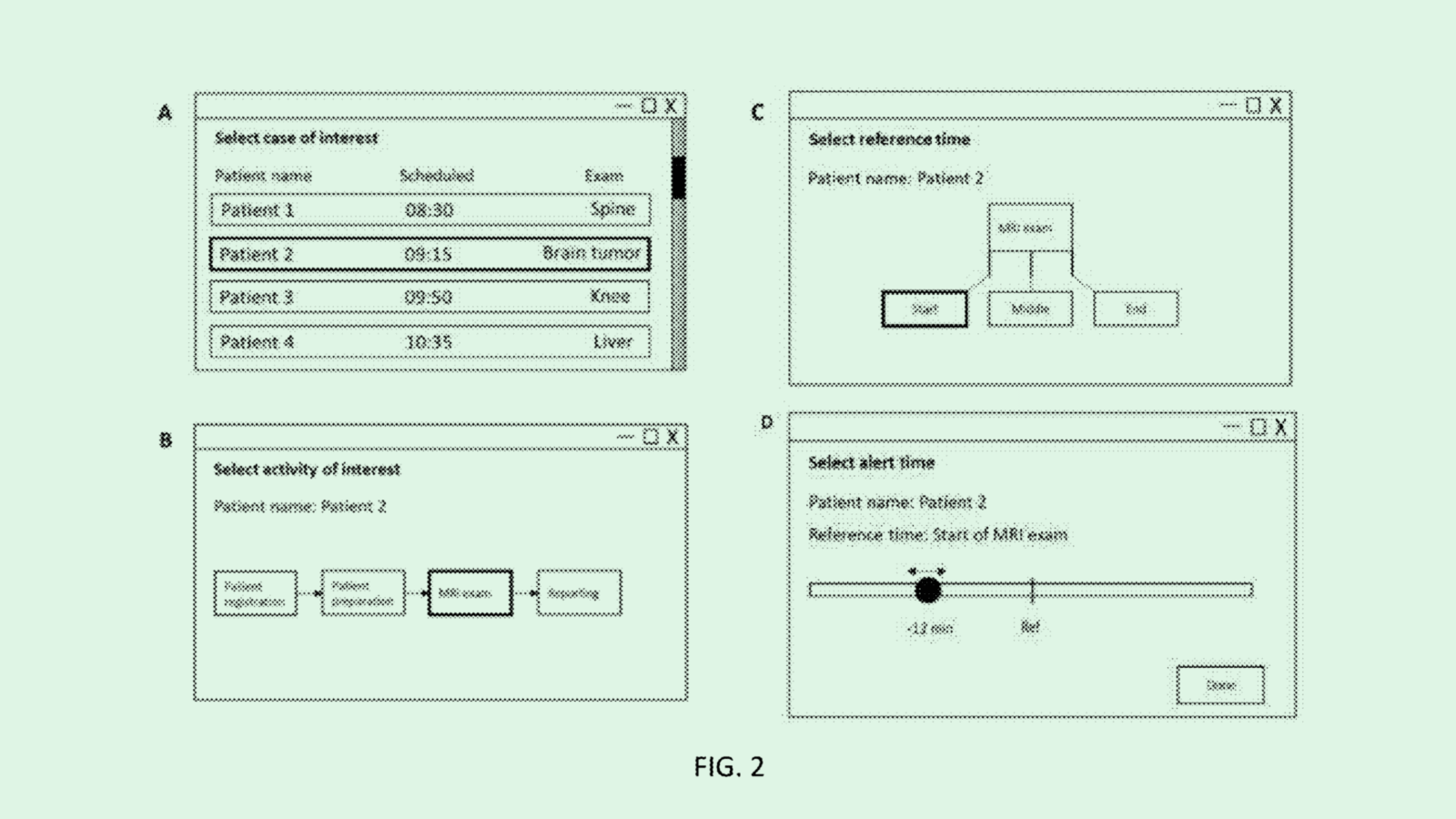

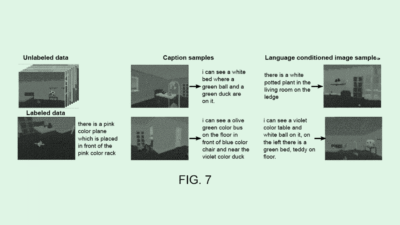

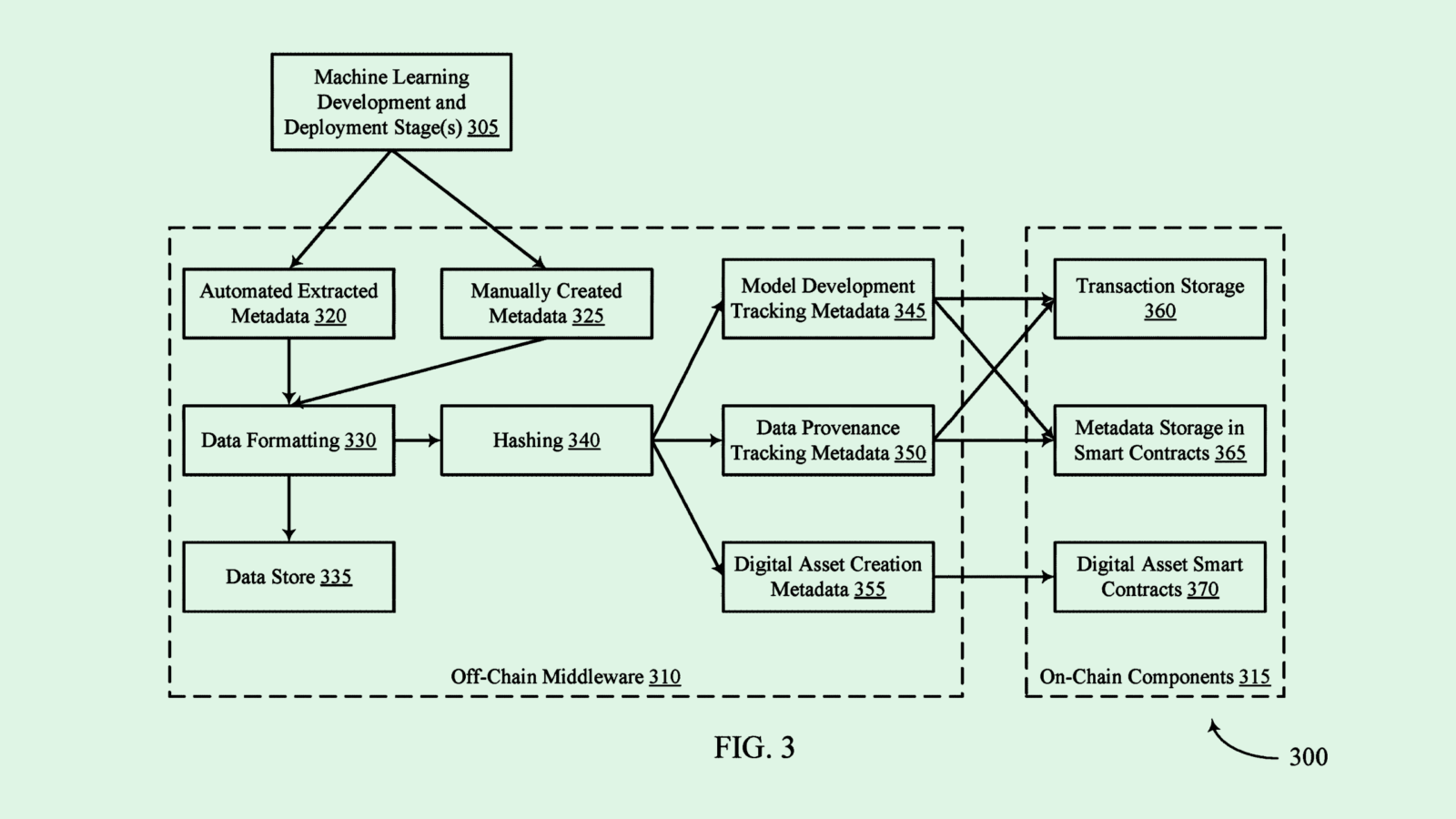

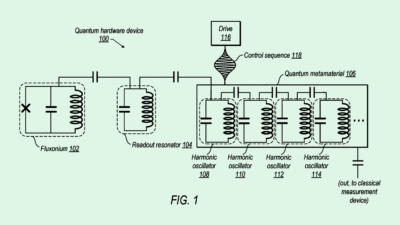

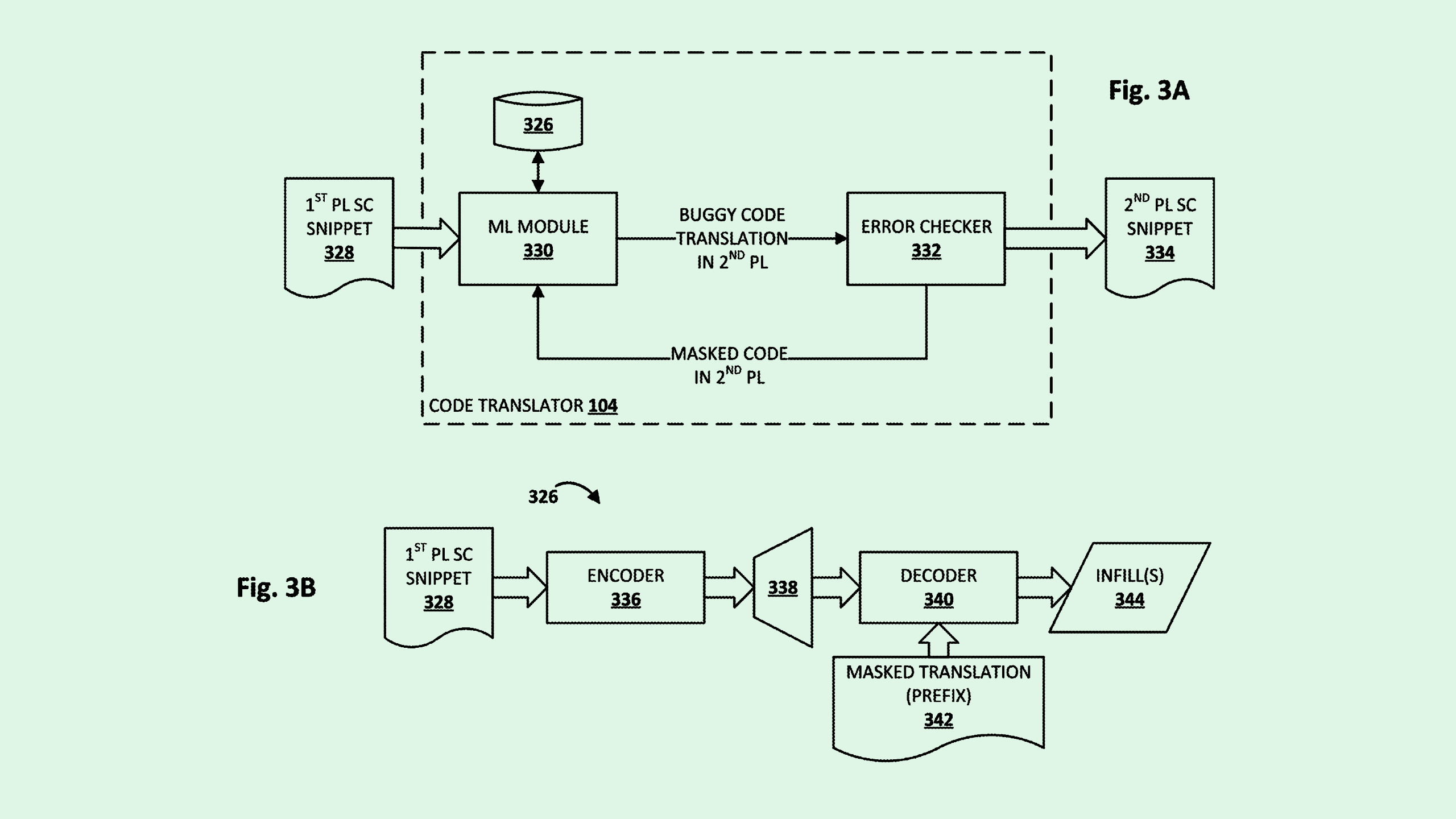

An examination of recent patent applications also reveals an interest in making AI code generation smoother. Google, for example, is seeking to patent a system for “iterative neural code translation,” which improves the quality of automatic translation between code languages by identifying bugs and iteratively fixing them over several tries. This tech uses neural networks to make sure things don’t get lost in translation – literally.

It also falls in line with a number of other code generation patents that Google has sought in the past, as well as Google’s own internal push to create more code with AI: CEO Sundar Pichai said in the company’s third-quarter earnings call that more than a quarter of new code for its products is initially generated by AI before being reviewed by engineers. “This helps our engineers do more and move faster,” Pichai said.

This strategy begs the question: When is the time and place to use an AI code generator? Though these tools are generally reliable, they still have the tendency to occasionally hallucinate and make mistakes the same way every generative AI product does, said Kaj van de Loo, chief product and technology officer and chief innovation officer at UserTesting.

For enterprises, it’s important to think about what that code may be used for, he said. For instance, if you need to write code that’s “super mission-critical and needs to perform flawlessly,” it may not be the best use of code-generation software. But for everyday code-writing, he said, “There are some areas that are so right for this, where I think anybody not doing it is missing out big time.”

But in any case, it’s vital that a human remains in the loop, said van de Loo. Though we may soon get to a place where humans and machines can reliably work together to check code, these models can’t replace developers: Researchers in China recently discovered that ChatGPT is overconfident in the quality of its code when checking its own work.

“In the AI tooling space, there are two approaches to delivering value: One is to augment humans, and one is to replace humans,” said van de Loo. “With our code-generation, we’re still pretty firmly in the augmentation camp. A human needs to take responsibility and own the code.”