Google’s Differential Privacy Patent Highlights AI Training Risks

With data becoming increasingly valuable, keeping it safe is a constant concern.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

Google wants to make its data a little bit noisier.

The company is seeking to patent a system for “efficient generation of differential privacy noise.” For reference, differential privacy is a tactic that allows the privacy of individual data points within a dataset to be protected by adding “noise” to it, or randomness that obscures the features of the data.

“Differential privacy can be used to generate aggregate reports and/or statistical information while preserving the privacy of users for which data is included in the reports and/or statistical information,” Google said in the filing.

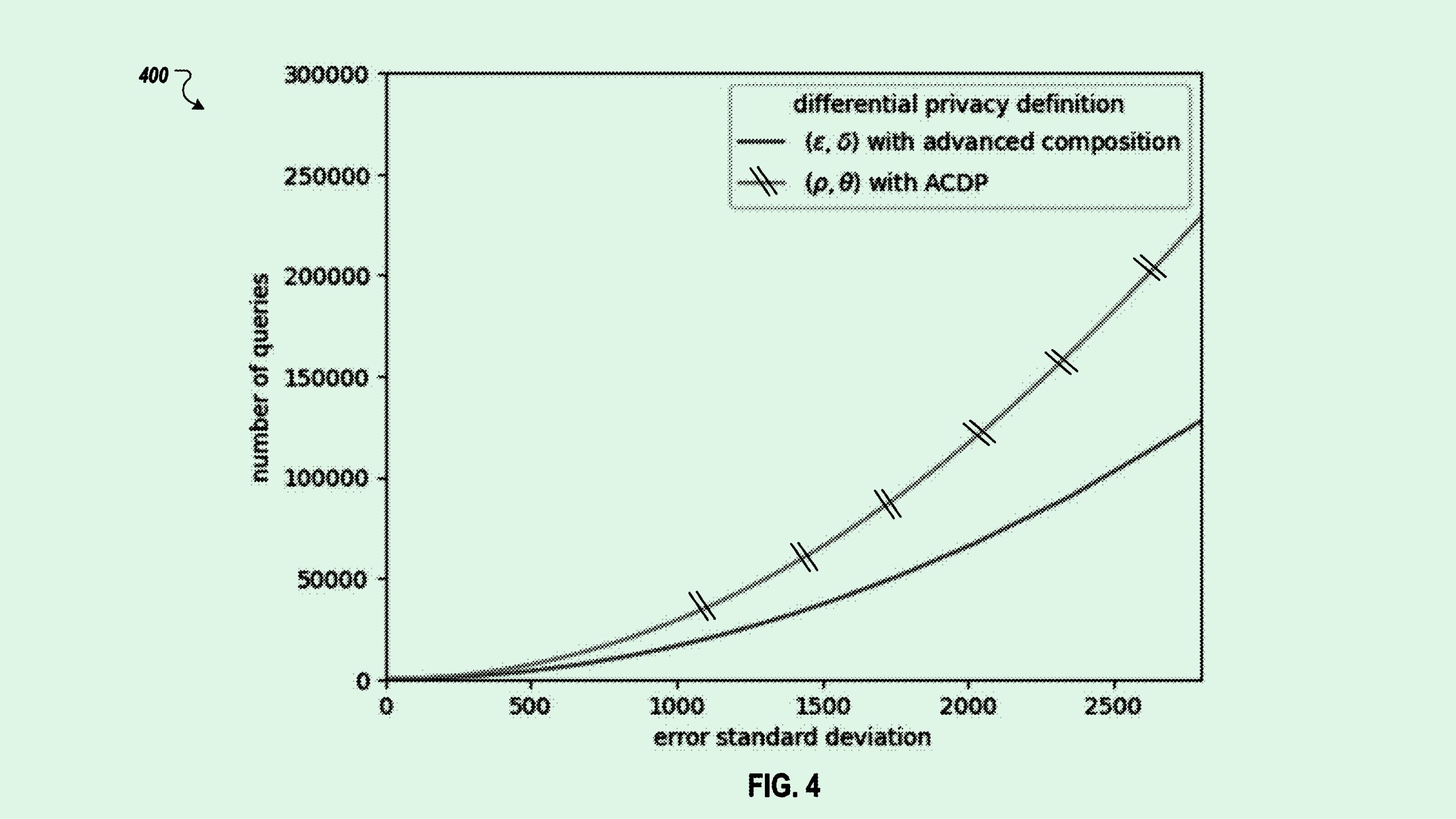

Differential privacy lives on a spectrum. Too little noise, and the data isn’t obscured enough, making it a privacy risk. Too much noise, on the other hand, and the features become overly obscured, making the data useless.

Google’s patent seeks to strike a balance. To put it simply, the system uses statistical modeling to find the maximum amount of noise that can be leveraged to keep a dataset private without tuning out too many of the data’s integral features. The method offers precise control over the differential privacy of data, while providing a scalable solution that’s good for larger datasets.

With data becoming increasingly valuable in the pursuit of bigger and better AI, privacy is a constant concern. Some large model developers scrape everything they can from the web to feed their massive foundational models.

But for many enterprises, it’s not always so simple. Using enterprise data to train AI can be a privacy minefield. And the consequences of a slip-up can be dire: IBM’s recent Cost of a Data Breach report found that 60% of AI-related security incidents led to compromised data and 31% led to operational disruption. Still, 97% of organizations surveyed reported not having AI access controls in place.

Differential privacy as Google’s patent proposes could provide a layer of security for a model’s core data before it can get into the wrong hands.