IBM Patent Adds Guardrails for Agentic AI

Its recent filing could dynamically keep models in check, adjusting to the environment over time.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

How can you make sure your AI assistants don’t go off the rails?

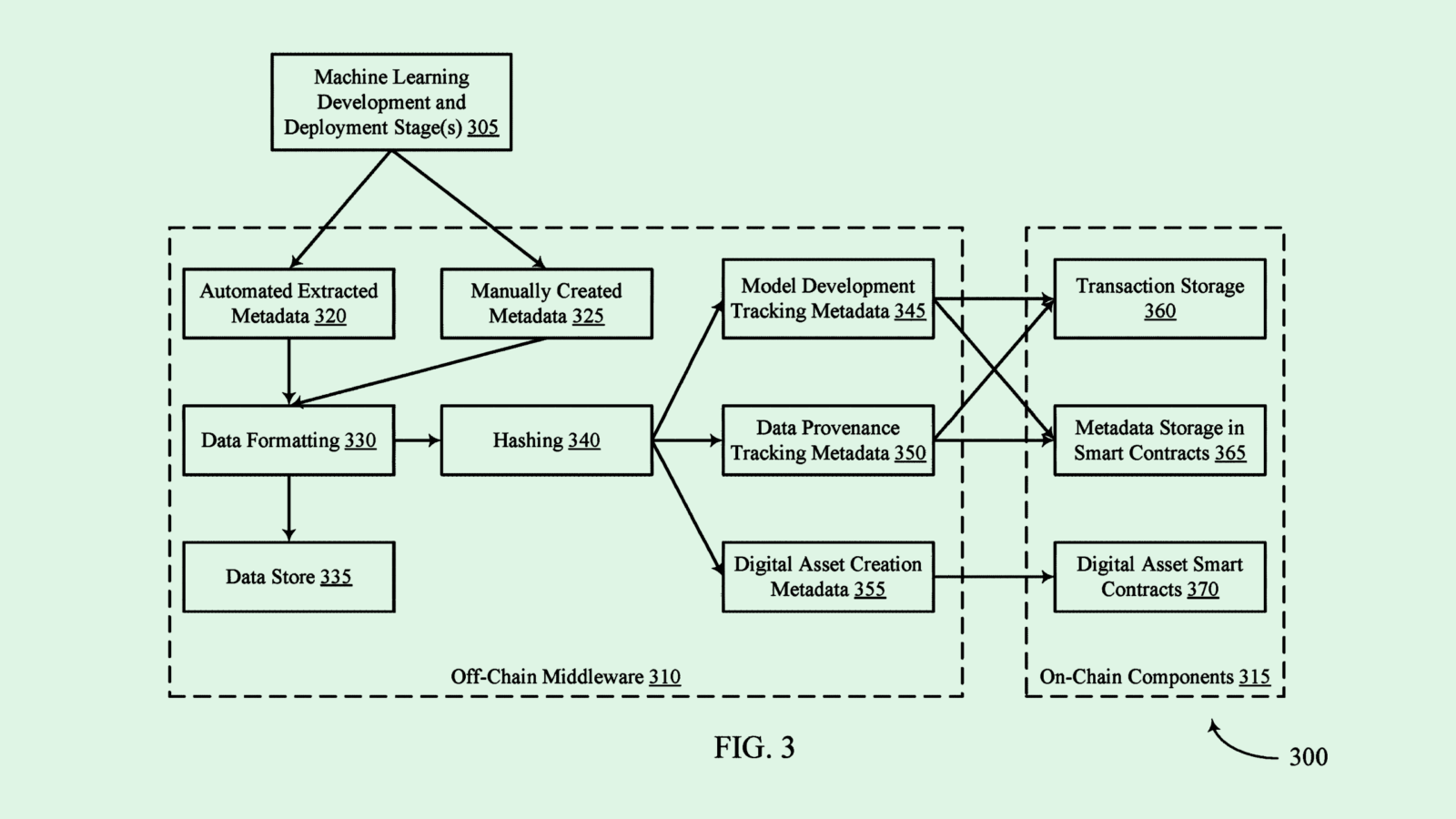

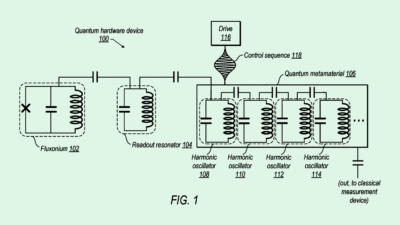

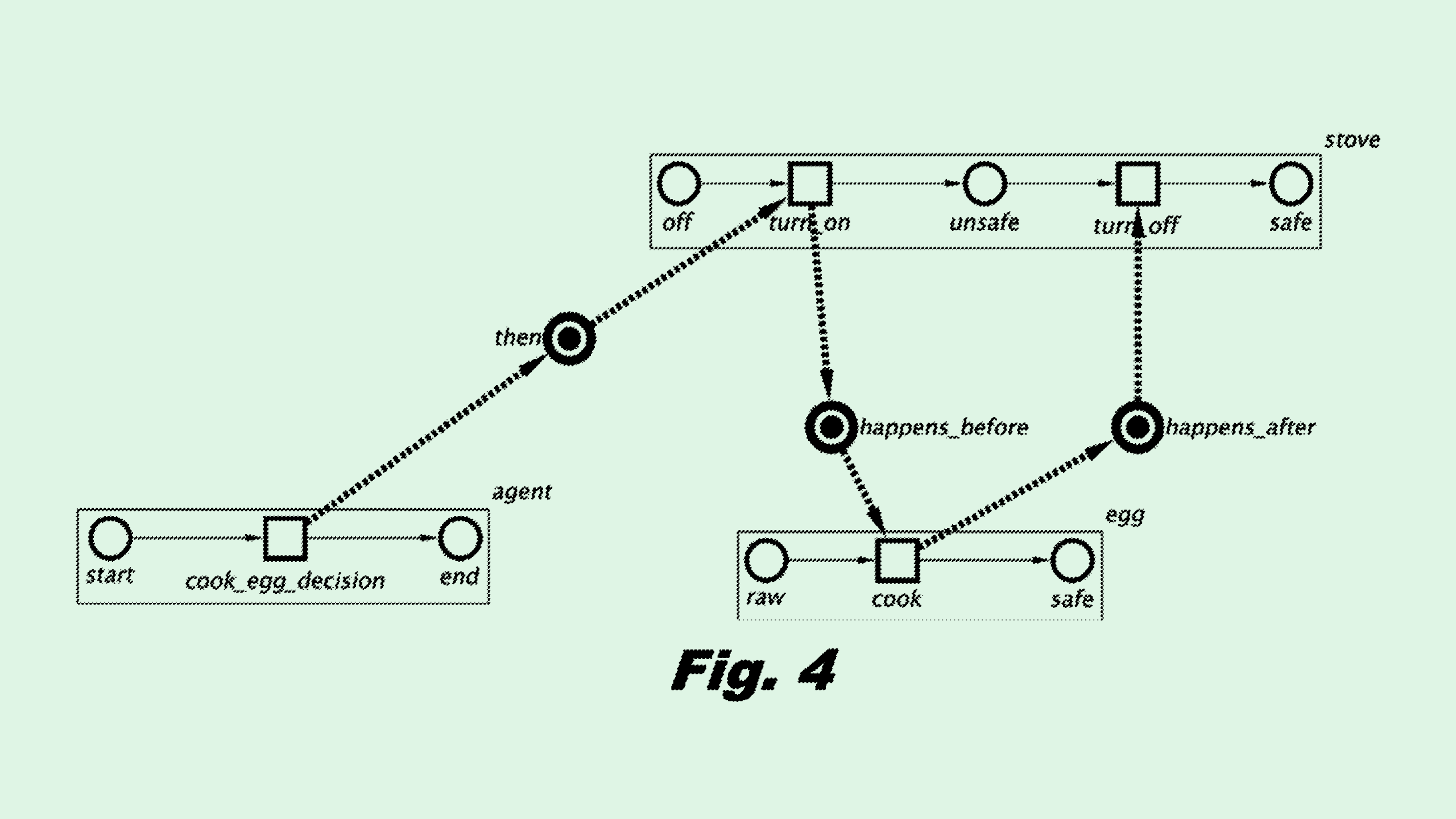

IBM might have something for that: The tech firm is seeking to patent a system for “constrained policy optimization for safe reinforcement learning.” This tech essentially implements rules into language models to keep them from picking up risky behaviors during reinforcement learning.

For reference, reinforcement learning is a training technique that essentially teaches a model to function in an environment through reward and penalization, with the goal of a reinforcement learning agent being to “maximize a cumulative reward over time.” What IBM’s tech does is extract “safety hints” from a model’s behavior that indicate potential risks, and use those to assign a cost to such actions.

“If the reward signal is not properly designed, the agent may learn unintended or even potentially dangerous behavior,” IBM said in the filing.

IBM’s system is dynamic, meaning that it adjusts over time to better understand the needs and constraints of the environment that it’s in. The tech is also specifically used for text models, and could be helpful for keeping chatbots or automated AI assistants in check.

This filing comes at a time when agentic AI is all the rage. Beyond call-and-response chatbots, enterprises are seeking ways to get more from their AI. Autonomous agents with the ability to freely handle tasks seem to be the solution.

Interest from Big Tech could signal that this trend is more than just a buzzword: Nvidia, Microsoft, Google and Salesforce have all staked their claim in agentic AI with new enterprise products. IBM has released its own agentic tooling, including a system to “supervise” how AI agents get a task done.

But even if this new wave of AI is capable of handling more, it still comes with the same challenges that any AI program has, including data security, hallucination, and a tendency to slip into biases. Tech that keeps these agents in line, as IBM proposes in its patent application, could become increasingly important as enterprises continue to adopt them.