Salesforce Patent Could Recycle AI Model Outputs

Reusing old responses could help save the model energy.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

Salesforce wants to make sure its models aren’t repeating themselves.

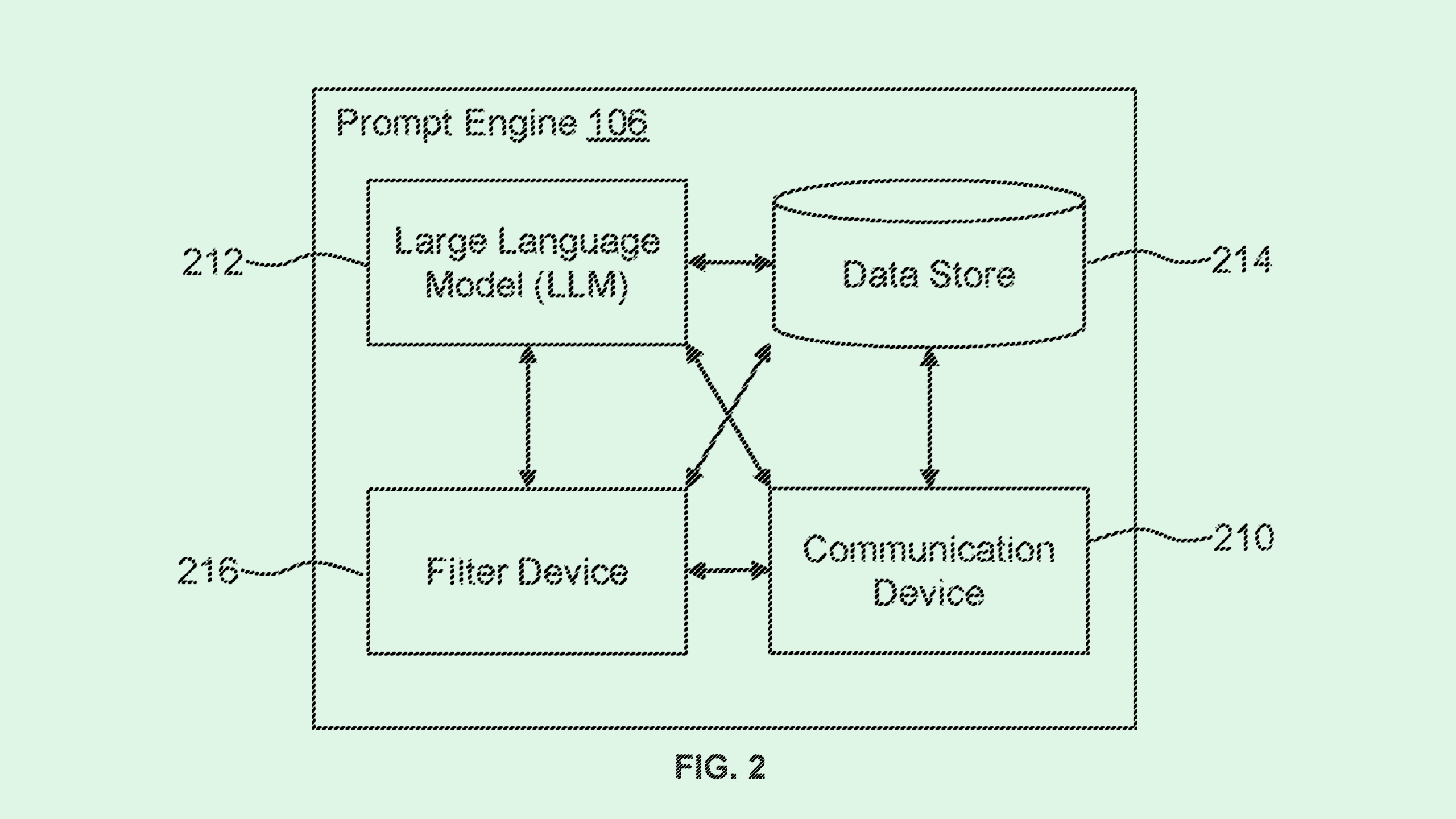

The company is seeking to patent a system for “pre-generative artificial intelligence prompt comparison,” which will essentially compare a user’s query to ones that have been previously sent to make sure the system isn’t starting from scratch to generate answers when asked a question it has handled before.

“(A large language model) may use any of its training data to formulate a response,” Salesforce said in the filing. “This presents a problem in certain environments, where an LLM is used, but precise language is required.”

As the title of the patent suggests, the system will take an input prompt and compare it to previous prompts, which are all stored by the LLM, grading the new prompt by whether it meets a threshold of similarity to previous queries. If it meets or surpasses the similarity threshold, the system will reuse an old response, no generation necessary. If it doesn’t, the model will craft a new response.

When reusing old responses, the model will apply certain rules to what the output can show. For example, it may censor “banned phrases,” or ones that are inappropriate or confidential, as well as insert “selected phrases,” or precise language that’s necessary depending on the field.

Salesforce’s application identified a few benefits of the tech. For starters, reusing old responses could help save the model energy that it would have expended in generating new phrasing. Applying content rules also could prevent inaccurate responses or data security slip-ups. For example, “in certain fields, such as finance or wealth management, precise language is required and there is a need to ensure that LLM responses are consistent,” the filing noted.

Tech that saves energy and ensures accuracy is increasingly important as companies seek to figure out where AI fits in without breaking the bank or causing a security meltdown. Given that Salesforce has staked a lot of its future on agentic AI – which security experts have expressed concern over – making sure they’re getting things right is crucial as enterprises seek to give these systems more autonomy.