The Drawbacks of Synthetic Data in AI Development

Using fake data is “not a panacea” to the security woes these models face.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

There’s no question that AI models are data hungry. But think twice before using synthetics to satiate them.

Synthetic data can be an incredibly helpful tool in the development of AI models – if used in the appropriate context. While the fact that this data isn’t based on real users or situations can help the data privacy issues that AI models often face, it has its drawbacks.

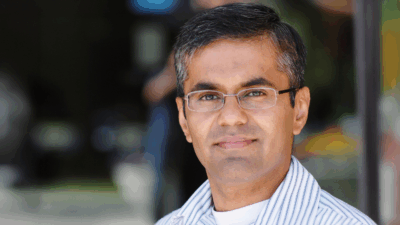

Let’s start with the good stuff. For one, it’s really easy to create good synthetic data that captures many aspects of the authentic data that it’s based on, said Bob Rogers, Ph.D., the co-founder of BeeKeeperAI and CEO of Oii.ai. That ease can be particularly helpful in contexts where regulatory hurdles using real data slow AI development, he said, such as finance.

“A synthetic set is a great way to make sure all the pieces connect,” said Rogers. “It’s not a panacea, but it certainly gets you past certain kinds of hurdles.”

However, while this data is “secure to a certain point,” it takes a “certain amount of sophistication to make a good synthetic set that captures the elements you need,” said Rogers. But the more sophisticated this data gets, the closer it mimics authentic data.

- Think of it like a sliding scale: On one end, you have synthetic data that’s not detailed and doesn’t closely display the features of the authentic data. While this synthetic data is safer to use, it’s usually not particularly useful, said Rogers.

- The other end of the scale, however, is synthetic data that so closely mimics its origins that it “basically exposes everything,” said Rogers. Though this is far more useful than less-detailed data, it raises security risks.

“It’s either not expressive enough to build good AI, or it’s so expressive that it could actually be risking security and it’s basically no better than anonymized data – which is also very easily re-identified,” said Rogers.

Even with these pain points, synthetic data has use cases. One big one is testing AI, said Rogers. For example, before training a model on real data, using synthetic data to “pressure test” how it may behave at scale could save a lot of time and effort if it ends up crashing, he said.

“Check the plumbing. Once the plumbing works, then you go into the real data and you build the models off that in a secure way,” he said.

But just because this data is fake doesn’t mean security can be any less rigorous, said Rogers. Whether it’s a chief data officer, a chief AI officer or just “someone who understands synthetic data,” the outputs of models that were built on this data need to be under surveillance for security slip-ups. Differential privacy, or a technique which ensures individual privacy within a data set, can provide an additional layer of security to synthetic data, he said.

“Train your people to at least know where the hot spots are,” said Rogers. “Not everybody has to be an expert in data security, but everyone should know when they’re treading on thin ice.”