Workplaces Face a Balancing Act with Generative AI Monitoring

Where should businesses draw the line with large language model utilization?

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

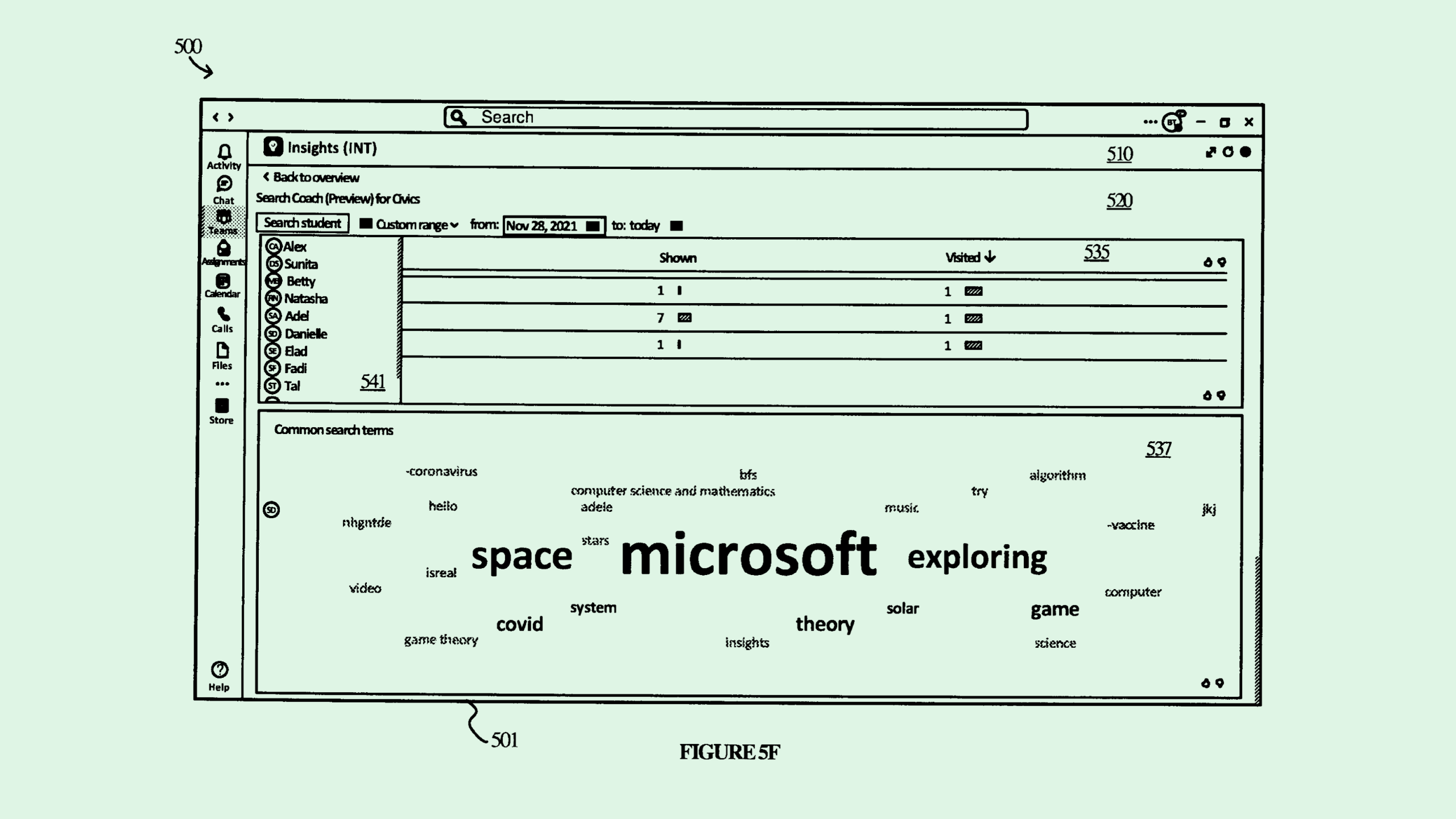

How can you know whether your employees are using AI the right way? Microsoft may have an answer for that.

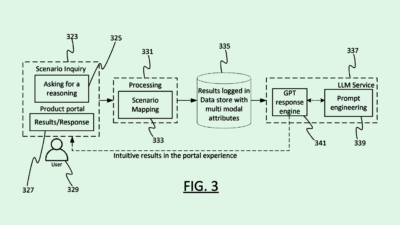

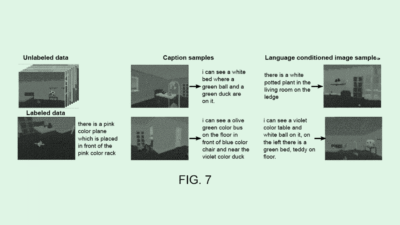

The company is seeking to patent an “insights service for large language model prompting.” Microsoft’s tech is intended to observe and understand how a group of people, such as a business, prompt and utilize large language models. “Users who lack proficiency with respect to prompting are at risk of falling behind in their careers and education,” Microsoft said in the filing.

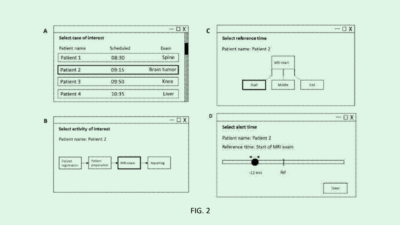

The system would monitor an “observed group” on a “per-user basis,” tracking prompts and replies as well as behavioral metrics like dwell time, when they stop the conversation and whether they click links provided by the language model. It may categorize user prompts as on-task, off-task or inappropriate, and rate them for their quality.

There are several reasons why an enterprise might want to track the ways in which their employees are using AI, said Thomas Randall, advisory director at Info-Tech Research Group. Tracking AI usage could help with infrastructure and software improvement, as well as ensuring that model usage “aligns with organizational objectives” and falls within legal compliance, he said.

Monitoring can also help workforces figure out if they’re overutilizing – or underutilizing – the AI at their disposal, he said. While that balance differs between companies and sectors, AI generally shouldn’t be doing workers’ jobs entirely, Randall said.

“If it augments your position so that it gets rid of the drudge work so that you can focus on being more attentive to the delivery of service, that’s going to be the best use of AI,” he said.

But enterprises may walk a fine line with monitoring how employees use these models, Randall said. Given that these models often struggle with data security flaws, understanding who has ownership over what data is important in enterprise AI strategies.

Another major factor is employee consent: “Are they aware that they’re being monitored, and are they empowered to have a say in what kind of data they’re happy with being tracked?” Randall said. “Especially if specific individuals can be called out, versus if it’s aggregated and anonymized to the extent possible.”