Happy Thursday, and welcome to CIO Upside.

Today: How much software is too much software? A glut of SaaS applications, combined with a weak security culture, could be putting your enterprise at risk. Plus: Tech talent wars may be fueling the fire of innovation at the risk of safety; and Microsoft’s recent patent could let users DIY machine learning models.

Let’s jump in.

‘SaaS Sprawl’ Heightens Data Security Risks for Enterprises

The bigger the tech stack, the wider the attack surface.

In recent months, several enterprises have faced attacks on Salesforce-hosted databases, leading to the exposure of a wealth of personal data. The latest victim is HR technology firm Workday, which confirmed on Friday that an unspecified amount of personal information was stolen from its database, including names, email addresses and phone numbers.

The breach, in which hackers posed as IT and HR personnel to get employees to share their credentials, follows similar social engineering attacks on Google, Cisco, Allianz Health and airline Qantas.

The string of incidents underscores the need to tackle security issues from two sides: the technology itself and the workforce using it, said Dave Meister, global head of managed services at security firm Check Point.

- From the technology side, enterprises are falling prey to “SaaS Sprawl,” said Meister, or having so many applications and platforms that they’re unable to properly track and monitor them. “There’s an assumption by a lot of organizations that just because it’s SaaS, that it’s protected, and that’s just not the case,” he said.

- From the workforce side, the point of entry for hackers tends to be employees falling for phishing emails or robocalls, he said, and many organizations haven’t cultivated a strong enough “culture of security” to prevent this.

“There’s not really a lot of tech that’s going to stop this type of attack,” said Meister. “If somebody’s calling up, requesting to get access to something, it comes down to process and procedure and company policy that’s going to prevent this type of social engineering from being successful.”

Meanwhile, AI stands to make both challenges worse, Meister said: As companies deploy models without strong policies governing the data that can go into them, the attack surface widens and the data gets further from their control.

But enterprises can avoid that fate, said Meister. The first step is understanding your organization’s overall “SaaS posture” by taking stock of how many apps your company uses – and how many it actually needs on a regular basis. Then, find a platform that allows for continuous monitoring and auditing of all the data going in and out. “Organizations need to have a vetting process for any SaaS tools that they’re taking on,” he said.

The next step is ensuring that security is more than a “tick-box exercise,” Meister said. While most enterprises have some kind of security training in place for employees, the programs are often not taken seriously and have little impact. Organizations need to have security baked into their policies, culture and procedures to protect information from getting into the wrong hands from the jump.

“There needs to be a cultural shift from an individual level and from an organizational level,” said Meister. “We need to establish cultures of protection saying ‘I’m not going to share this unless I explicitly need to.’”

Ensure Compliance Without Sacrificing Productivity

Compliance can feel like a constant battle. Manual monitoring tasks for users, devices, and applications already overwhelm admins; tedious approvals and constant updates worsen this, leading to user frustration and lost productivity.

The solution is to empower your employees to help with compliance, not make it more difficult.

Watch this on-demand webinar from 1Password and DataScan to see how businesses can ensure compliance without sacrificing productivity. You’ll gain practical insights to:

- Empower employees to self-remediate and maintain compliance standards.

- Automate credential and access policies, simplifying compliance.

- Enable admins to easily manage and secure BYOD access.

How the AI Talent War is Worsening an Ethical Dilemma

With the war for AI talent reaching a fever pitch, safety might be getting lost in translation.

A recent study from KnownHost found that fewer than half of AI jobs on the market are requiring employees to follow compliance, responsible governance, or ethical AI practices. As enterprises seek to keep up with the rapid pace of AI development and deployment, some tech developers may be viewing responsible and ethical AI as something holding them back, said Valence Howden, principal advisory director at Info-Tech Research Group.

“Governance should be taught, but isn’t. Ethics should be applied, but aren’t,” said Howden.

Tech giants are continually seeking to one-up each other, pursuing market dominance by stacking their teams. Meta is a prime example, having reorganized its team several times in the past months as it hones in on superintelligence.

- Alongside Microsoft, Amazon and Google, however, Meta ditched its team focused on responsible and ethical AI years ago.

- Enterprises, meanwhile, are feeling the pressure, said Howden. With many “trying so hard to be first” in adopting the tech and nabbing talent, governance and ethics are being pushed to the wayside.

- “When profit is on the line, people ignore their ethics and values,” said Howden. “It doesn’t matter what you have up in the wall if you never follow it. And they know what you care about by what you do.”

With the AI talent pool slim as it stands – and the technology itself constantly evolving – finding employees with a strong understanding of tech ethics and responsibility isn’t always easy, said Howden. But to build and adopt the tech safely, these skills and values need to be taught and applied internally, he said.

The tech industry has long had a ‘move fast, break things’ mentality, Howden acknowledged, but that may not be applicable to AI given the extent of its capabilities and the gravity of the harm it can cause. Repairing the damage from failing to interlace governance and ethics into technology and workforce development likely won’t be easy.

So how can enterprises keep from steering their AI efforts the wrong way? They have to stop thinking of ethics and innovation as “two opposite things that they have to balance out,” Howden said. Considering governance as something that works in concert with adoption, rather than against it, can help companies spot biased, inaccurate or harmful AI before they scale it.

“There’s this belief that they can come back and fix that later,” he said. “The reality is, some of this isn’t fixable later, but there’s a lot of overconfidence around the ability to do that.”

Microsoft Patent Could Enable Enterprises to DIY AI Models

Do you need to be an AI expert to make your own models?

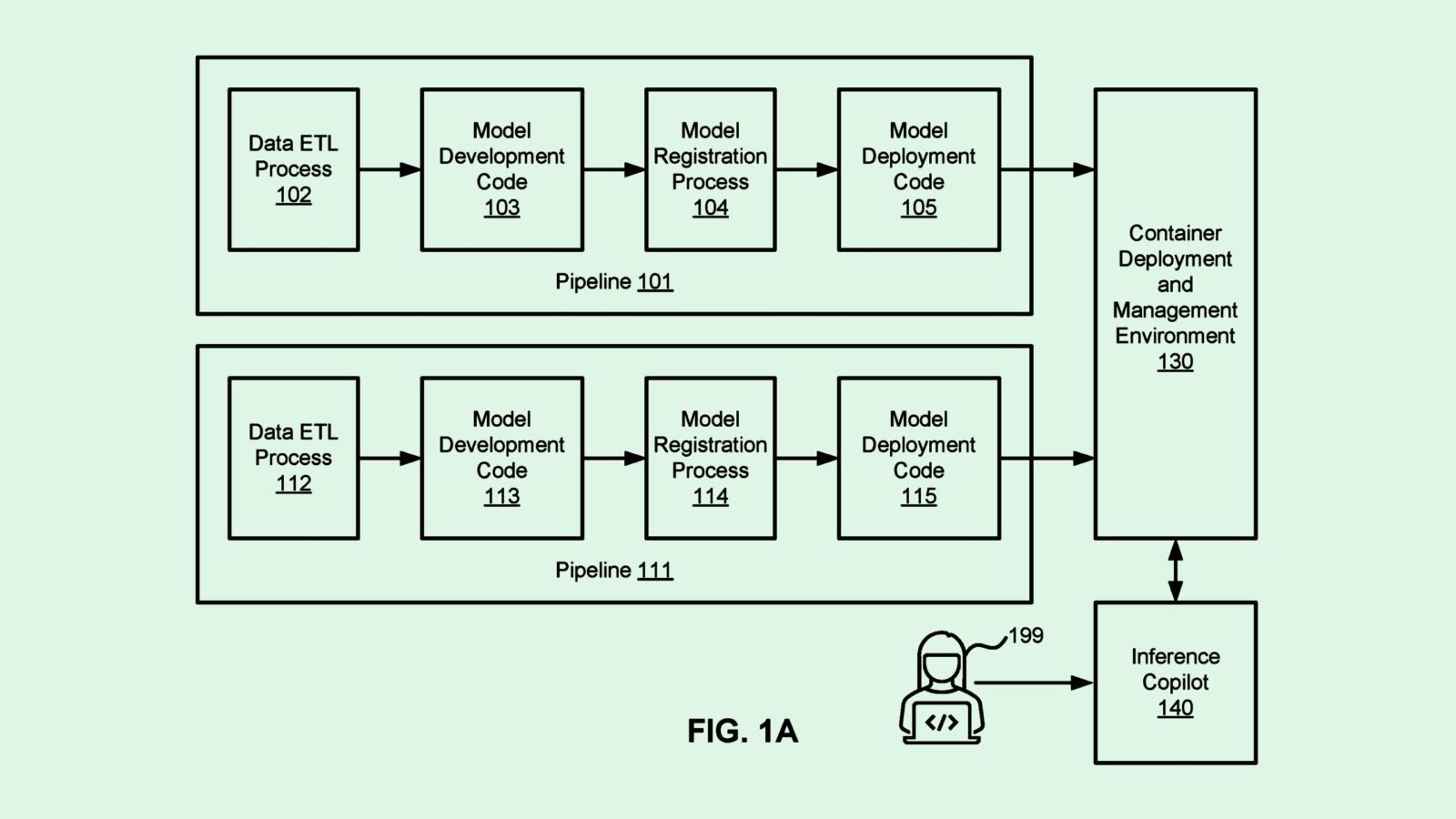

Microsoft may want to lower the barrier to entry. The company is seeking to patent a system for “machine learning development using language models” that basically makes model development as simple as using a chatbot, relying on a natural language processing assistant and reusable machine learning components to help users DIY their own models.

To start, a user would describe in plain language a task that they want completed. The assistant would then parse that to automatically configure a pipeline of machine learning components. Once the pipeline is set up, the assistant tests different variations of it before presenting it to the user.

The company noted a number of benefits that the tech could provide, such as cutting development time, lowering compute and storage costs and making development more accessible. “The number of people needed to develop, train, and test machine learning components may be significantly reduced,” Microsoft said in the filing.

Microsoft isn’t the only firm that wants to put AI development into everyone’s hands. Salesforce previously sought to patent a similar invention: a system for “building a customized generative artificial intelligence program,” which details a framework to fine-tune generative models to personalized needs with little technical know-how.

Such tools may become increasingly relevant as the AI talent war heats up. In recent months, tech giants have doled out eye-popping pay packages to poach talent from one another. While Meta has made headlines for continuously shaking up its AI division this summer, Microsoft, too, has been building its arsenal, seeking to poach talent from both Meta and Google DeepMind.

But not all enterprises can afford to score their own AI expertise. As many seek to keep up with the broader AI race, tools like these that help less technically-inclined users leverage AI could become a lifeline.

Extra Upside

- Here Comes the Sun: Meta signed a deal to develop a $100 million, 100-megawatt solar farm in South Carolina to power its AI data center in the state.

- Robotic Raise: Field AI, a robotics startup backed by Bill Gates and Nvidia, raised $405 million between two funding rounds.

- Code Monkey: Anthropic debuted a new subscription option which bundles Claude Code into its enterprise offering.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.