Happy Thursday, and welcome to CIO Upside.

Today: A lack of federal rules has left AI regulation in state’ hands, and the resulting patchwork of rules could create roadblocks for enterprises — while also inspiring safer AI. Plus: IBM and Moderna’s mRNA research highlights quantum’s capabilities here and now; and Okta’s recent patent prevents phishing in multi-factor authentication.

Let’s take a look.

States Take the Lead in Regulating American AI Development

Before President Trump signed his “One Big Beautiful Bill” into law on July 4, the Senate unexpectedly removed a 10-year prohibition on states enacting or enforcing their own AI laws.

Cutting the AI regulation moratorium from the bill opened the floodgates for states to set their own rules, a movement that has picked up speed across the country. The volume of laws and potential laws, however, makes it difficult for enterprises to keep up, presenting challenges with fragmentation, duplication and governance, experts said.

“A fragmented AI regulatory map across the US will emerge fast,” said Daniel Gorlovetsky, CEO at TLVTech. “States like California, Colorado, Texas and Utah are already pushing forward.”

AI legislation from states is tackling a wide range of issues, from high-risk AI applications to digital replicas, deepfakes, and public sector AI use, said Andrew Pery, ethics evangelist at ABBYY.

Colorado’s AI Act targets “high-risk” systems to prevent algorithmic discrimination in different sectors, with penalties of up to $20,000 per violation. California, meanwhile, is moving forward with myriad AI bills that focus on data transparency, impact assessments and AI safety, especially around consumer-facing applications.

Texas is zeroing in on AI-generated content and safety standards in public services, such as with HB149, known as the Texas Responsible AI Governance Act. Utah’s SB 149, the Artificial Intelligence Policy Act, demands companies disclose the use of AI when they interact with consumers, said Gorlovetsky.

Additionally, Tennessee’s Ensuring Likeness, Voice, and Image Security (ELVIS) Act, passed in 2024, “imposes strict limits on the use of AI to replicate a person’s voice or image without consent, targeting unauthorized digital reproductions,” Pery said.

New York is expected to take the lead on financial sector regulations and push for mandatory risk disclosures from large AI developers, said Patrick Murphy, founder and CEO at Togal.AI. Additionally, the state’s legislature passed the Responsible AI Safety and Education Act that requires large AI developers to prevent widespread harm.

So what does this patchwork of legislation mean for enterprises? The impact will depend on size and AI maturity levels.

- Small businesses will need to start thinking about compliance before using off-the-shelf AI tools in customer-facing roles, said Gorlovetsky, while midsized companies will need to consider legal and data governance strategies state by state.

- Large enterprises will be forced to build compliance into their architecture and devise modular AI deployments that can toggle features depending on local laws, Gorlovetsky said.

- “Bottom line, if you’re building or deploying AI in the US, you need a flexible, state-aware compliance plan — now,” Gorlovetsky said.

Despite the challenges, the regulations do not necessarily translate into innovation loss. Rather, they can be leveraged to build safer and better AI. Enterprises can fall in line with compliance by keeping inventories of all components involved in the development, training and deployment of AI systems.

“If the US wants to stay ahead in the global AI race, it’s not just about building smart tools,” said Murphy. “It’s about proving we can govern AI responsibly without holding back innovation and building confidence in AI without crushing startups or overwhelming smaller firms.”

mRNA Research by IBM, Moderna Shows Quantum’s Present-Day Uses

The timeline for scaling quantum might be murky, but the tech is being put to use in the here and now.

IBM and Moderna released research on Thursday advancing the use of quantum computing in the development of mRNA medicines, a rapidly growing field that was used in the development of the Covid-19 vaccine. The research uses both classical computing and quantum computing methods to tackle increasingly complex problems.

Quantum computing was used to complement and extend the capabilities of classical algorithms in identifying “biological mechanisms involved in a disease” as a means of developing mRNA medicines, according to the report. That involved “mapping” the astronomical number of ways that mRNA proteins can fold, Sarah Sheldon, senior manager of applied quantum science at IBM, told CIO Upside.

“While they have very good solutions for this today with classical computers, it’s something that gets harder and harder as you look at more complex problems,” Sheldon said. “As this mRNA sequence link gets longer, it gets exponentially harder.”

The work, she said, plays well to quantum’s strength in optimization:

- In the context of medicine, this means that quantum can more rapidly tackle exponentially complex research in drug discovery. “We’re pushing the boundaries of the size of the problems we can look at with quantum,” said Sheldon.

- And quantum is an “interdisciplinary field,” she added. The next step is figuring out how to translate these optimization and efficiency gains into other domains. The tech is a natural fit for things like chemistry, materials science and high-energy physics, she noted.

- “It’s really about finding the right problems for the algorithms that we have,” she said.

There are still plenty of barriers to scaling this tech, including its sensitivity to environmental factors such as temperature and “noise” that can knock it out of the delicate superposition on which it depends. But as the tech develops at an increasingly fast rate with the attention of tech giants like Google, Amazon, Microsoft and IBM, the job now is to figure out how to best put it to use.

“We work in parallel,” she said. “We always are trying to build bigger and better hardware, improving the quality as well as the size of our systems … And then, at the same time, we keep working on algorithm development.”

Okta Patent Disrupts AI-Fueled Phishing Attacks

Hackers love to go phishing.

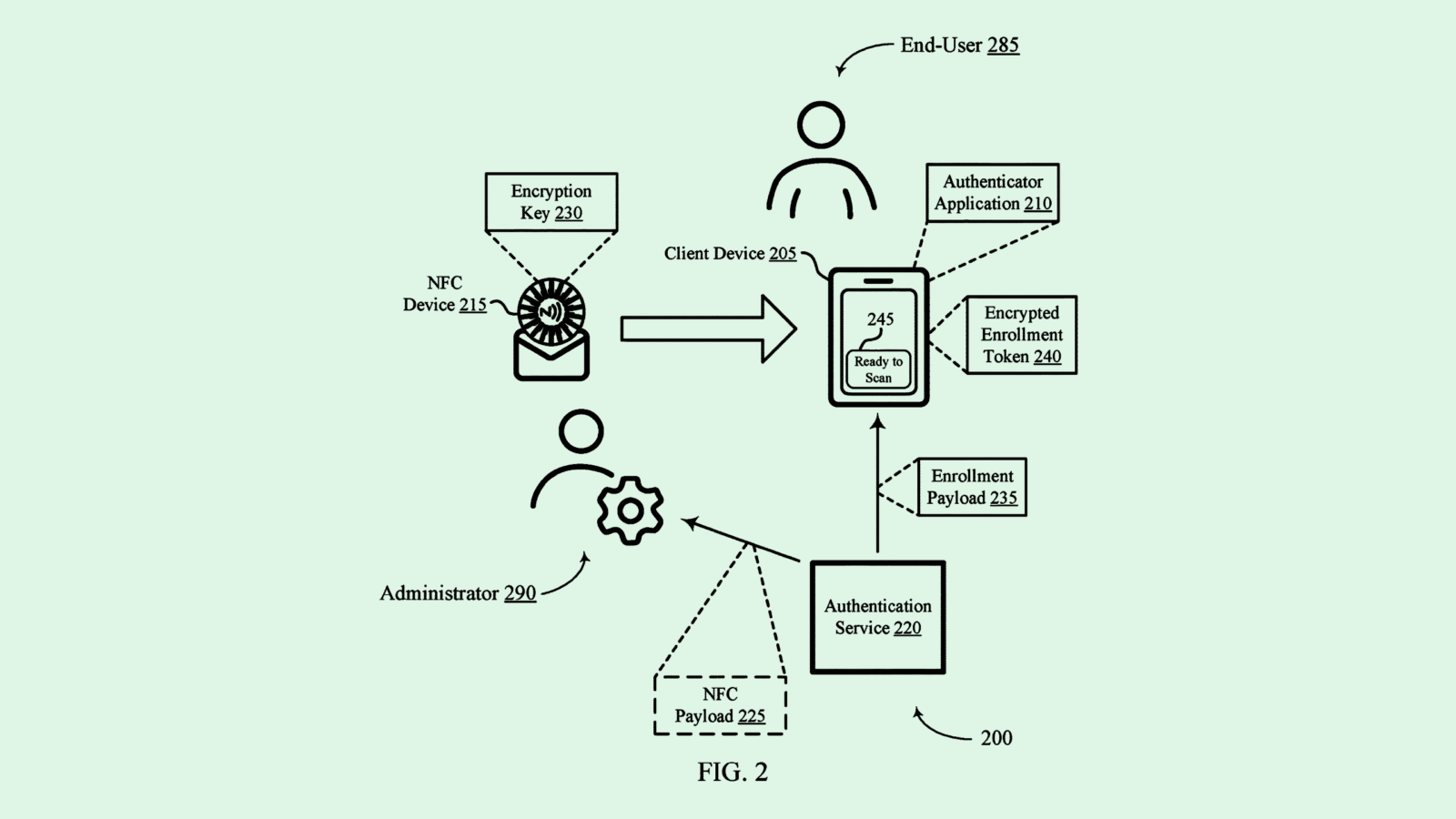

Okta wants to keep you from taking the bait. The company filed a patent application for “techniques for phishing-resistant enrollment and on-device authentication” that, to put it simply, fortifies a user’s enrollment into authentication apps such as Okta Verify against phishing attempts.

“Some enrollment channels may be susceptible to phishing attacks in which an attacker may intercept sensitive data,” said Okta. If a user’s enrollment into an authentication app is intercepted by a hacker, they may be able to register their device as a trusted one, intercepting multifactor authentication and quietly compromising a user’s account.

Okta’s patent seeks to limit that: First, when a user signs up for an authentication service, Okta’s tech encrypts the “token,” or the special code used for verification of a device. That encryption ensures that a hacker can’t use that token if it’s stolen.

Additionally, the system relies on a secure encryption key stored on an “NFC device,” or a piece of hardware that needs to be physically close to the enrolling device to verify it. The physical hardware element prevents a hacker from remotely compromising device enrollment.

Okta’s patent highlights a growing trend in cybersecurity: Phishing attacks are getting more sophisticated. AI has offered an expanded tool chest that can help threat actors create convincing bait. While setting up multifactor authentication is vital in preventing phishing attacks, security professionals prefer certain methods of authentication to others.

According to the 2025 Customer Identity Trends Report by Auth0, a subsidiary of Okta, biometric methods, like fingerprinting and face ID were ranked as the most secure means of login by professionals surveyed. Authenticator apps, meanwhile, ranked third. Okta’s patent could add an additional layer of safety to authentication apps as threat actors become smarter.

“While legacy authentication techniques often imposed a tradeoff, modern approaches combine phishing-resistant security with the convenience of a fingerprint or facial scan, or the tap of a button on an authenticator app,” the report notes.

Extra Upside

- Chips Abroad: After the US reversed its ban on Nvidia selling its H20 chips in China, the company wants to sell more advanced chips in the country.

- Shipping to Space: Amazon has launched more of its Project Kuiper internet satellites into space, with help from a SpaceX Falcon 9 rocket.

- Hiring Spree: Meta has reportedly courted two more prominent OpenAI researchers to its Superintelligence lab.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.