Google’s Liveliness Detectors Aims to Combat Deepfakes

The company is seeking two patents to detect whether biometrics have been faked, one for fingerprint data and one for voice data.

Sign up for smart news, insights, and analysis on the biggest financial stories of the day.

Google wants to make sure you’re the real deal: The company is seeking two patents to detect whether biometrics have been faked, one for fingerprint data and one for voice data.

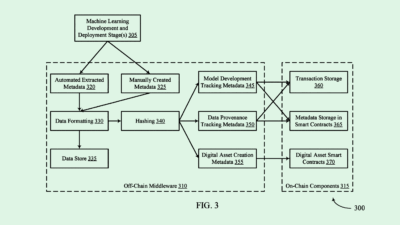

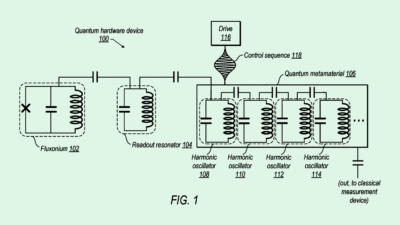

To check whether your fingerprints are legit, Google is looking to use “localized illumination” as part of its biometric authentication systems. This tech adjusts the brightness and color of a user’s fingerprint to create “illumination patterns,” which highlight the ridges and valleys on a finger. The system then takes in a reflection of the fingerprint created by this illumination.

That reflection is then analyzed for authenticity, and the fingertip itself may be analyzed for “liveness characteristics,” such as “skin spectroscopy, blood oxygen saturation analysis, (or) heart rate analysis,” Google noted. In addition to checking whether the print was made by a real finger, the system checks if it matches an “enrolled print” to decide whether or not to grant access.

Meanwhile, Google also wants to check that your voice is really you. The company wants to patent “self-supervised speech representations” to help detect fake audio. Essentially, this system uses multiple AI models to detect whether speech it hears is synthetic or real.

First, a trained self-supervised model trained only on samples of “human-originated speech,” extracts features of a user’s audio input to make it easier for the next model to understand. That data is then sent to a “shallow discriminator model,” which is trained on synthetic speech. The second model scores the speech data to determine if it breaks a threshold of being synthetic or not.

“Speech biometrics is commonly used for speaker verification,” Google said in its filing. “However, with the advent of synthetic media (e.g., “deepfakes”), it is critically important for these systems to accurately determine when a speech utterance includes synthetic speech.”

While biometrics are safer than your average password, getting them faked or stolen can make for a far more dangerous situation for a user than a password hack. While a password can be easily changed, a fingerprint, a face scan or a voice biometric can’t be replaced, said Jason Oeltjen, VP of product management at Ping Identity.

Voice biometrics in particular are a rising cause for concern as deep fake audio AI tools become more popular and realistic, said Oeltjen. An August study from University College London found that many people struggle to tell the difference between AI voices and real ones, with participants only correctly identifying deepfaked voices 73% of the time.

Given that AI is being used to fight this problem, in cybersecurity contexts, “AI is both like a weapon for good and evil,” said Oeltjen. “(Security companies) are trying to figure out how to weaponize AI for the positive side of these things, to do better at things like what Google is proposing here.”

The way to fight this kind of vulnerability is additional layers of security, said Oeltjen. This means being “multi-modal” with your security approach by asking for multiple kinds of biometrics, such as a voice print and a facial scan, he said. “That’s one of the things that I think our most security sensitive customers are doing,” he said.

And while Google likely won’t monetize this kind of tech, adding layers of security and tools to prevent these hacks when they happen will only make the company look better, especially to high-value enterprise customers.