IBM Patent Seeks to Help AI Models ‘Unlearn’

Enterprises are still grappling with balancing innovation and risk as the AI race rages on.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

IBM wants to help AI models pick up good habits.

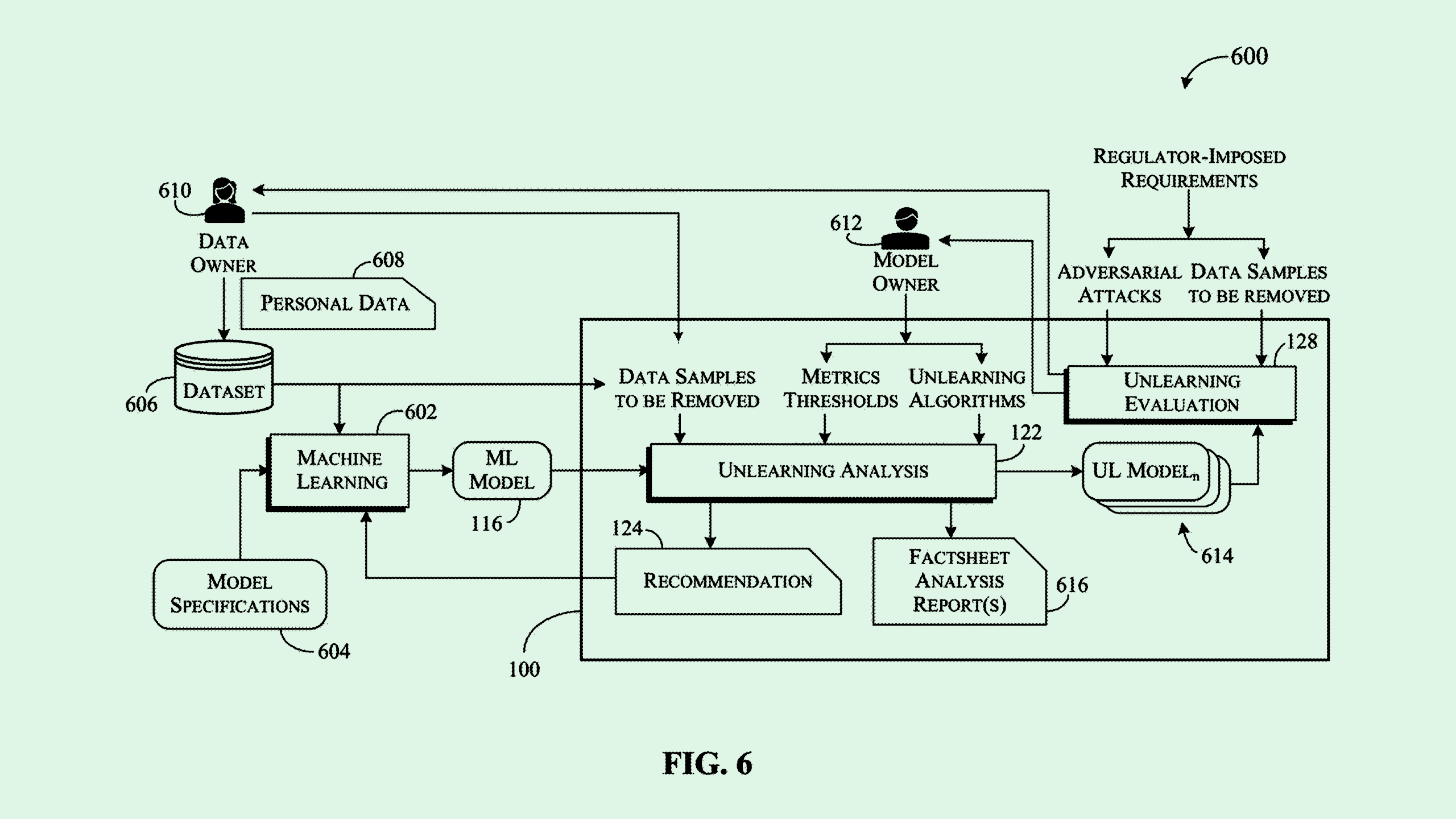

The tech firm is seeking to patent a system for “automated guidance for machine unlearning,” which essentially helps AI models discard programming that deviates from its intended objectives and makes suggestions on retraining.

First, IBM’s tech removes data from a trained machine learning model that may be influencing specific outputs, such as ones exhibiting bias or accuracy issues. Then, the performance of the so-called “unlearned” model and the previously trained model are collected and compared.

If there’s a significant gap in performance or outputs of the unlearned model compared with the trained one, IBM’s system makes suggestions on how to fix the problem. For example, it may recommend alternative training data or adjusting certain parameters.

Along with helping address problems that pop up in model training, IBM’s tech can also assist with privacy compliance, helping a model forget certain data – such as potentially personal or sensitive information – used in training.

“Regulatory compliance creates the need for technological solutions that allow a model owner or user (to) not only remove sensitive data from a machine-learning training dataset but also ensure that the influence of the sensitive data on a previously trained model is also eliminated,” IBM said in the filing.

Enterprises are still grappling with how to balance innovation and risk as the AI race rages on. IBM’s patent seeks to tackle a number of the risks, including data privacy, accuracy, reliability and bias.

Often, however, AI’s risks and ethical implications aren’t considered early enough during adoption and deployment but treated as a secondary concern, one that users assume will be simple to address. “We have this wonderful arrogance that we can understand ethics and put it into a little capsule and inject it into a system,” Valence Howden, principal advisory director at Info-Tech Research Group, told CIO Upside at Info-Tech Live last week.

While IBM’s patent could help developers backtrack on their mistakes, it begs the question of how certain data, biases or problems become introduced into a model’s DNA in the first place.