How Enterprises Can Fit Ethics Into Their AI Strategies

When it comes to ethics, “the tone is set at the top.”

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

Amid the AI frenzy, a critical question is often getting lost: What are the consequences of building and using these models?

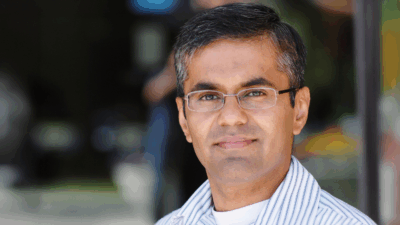

The ethical implications of AI often fall to the wayside in favor of faster and more efficient deployment – especially as competition in the space remains high, said Brian Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University. But enterprises building ethics into their own AI strategies could help prevent lasting reputational damage, both for themselves and the industry at large, Green said.

“Ultimately, these technologies are world-shaping technologies, and if they’re done wrong, they’re going to turn the world into a bad place,” said Green.

So what does it mean to use AI ethically? The main consideration lies with figuring out where AI fits into your organization, said Green: Who is using it, what it’s used for, who is benefiting from it and who is potentially harmed by it.

- “They should make sure that the AI that they’re making or using is producing the effects that they actually wanted,” Green said.

- There are many intersections of risk to consider, such as data privacy, model biases from training, copyright issues, environmental considerations or how the AI is put to use.

- When introducing new AI, enterprises can perform cultural assessments to ensure that everyone in their business is on the same page about AI and its risks, said Green. But while ethics should be considered by everyone within an enterprise, “the tone is set at the top,” he said.

“The leadership has to decide whether they’re actually going to take ethics seriously,” said Green. “If the leadership is not on board, everything is going to look like a cost instead of a benefit. ”

The last step is implementation, he said. That involves moving past the “theoretical phase” and implementing practical AI monitoring and guardrails for privacy, safety and accountability, he said. “It’s one thing to have a set of principles … it’s a completely different thing to actually implement those in a company and measure them and make sure that you’re making progress on them.”

Building ethics into enterprise AI strategies could help companies steer clear of incidents that inflict long-term damage on their reputations, said Green. The impact of such problems can stretch beyond the enterprise responsible, impacting the industry as a whole.

“If you are making mistakes in the deployment of AI technology, it is going to damage your reputation by making you look untrustworthy, incompetent or uncaring,” he said. And depending on the magnitude of harm, “it’s not something that can be recovered from very easily.”

But in a market as hot as AI is right now, the downsides tend to get lost. The more competition there is, the less people think about ethics, said Green. They tend to focus on the short-term payout rather than the long-term impacts.

A lot of tech giants shifted their focus away from ethics and responsibility when the AI boom began – with the likes of Meta, OpenAI and Microsoft cutting responsible AI staff in recent years. And with the Trump administration slicing the already-feeble government oversights that were in place, the onus of responsibility and ethics lies on the users themselves.

For tech giants, ignoring ethics “hasn’t affected their money enough” so far, Green said. “This is an experiment that’s run over time. Over a certain period of time, people will start realizing that the companies that are protecting their reputations better are getting more business.”