Microsoft Wants to Improve AI-Powered Code Generation

Microsoft wants to patent a system to improve how an LLM writes code in response to requests. One step is learning to recognize good code.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

AI can code based on prompts, but there’s always room for improvement.

Microsoft wants to patent a system that can help language models create better code in response to natural language requests using “symbolic property information.”

First, the system learns to recognize examples of good code.

It analyzes a training dataset of code examples where only one example is given using a “symbolic property mining pipeline.” Through that analysis, the system determines the “symbolic properties,” or code patterns/features that help describe how the code works or what makes it right.

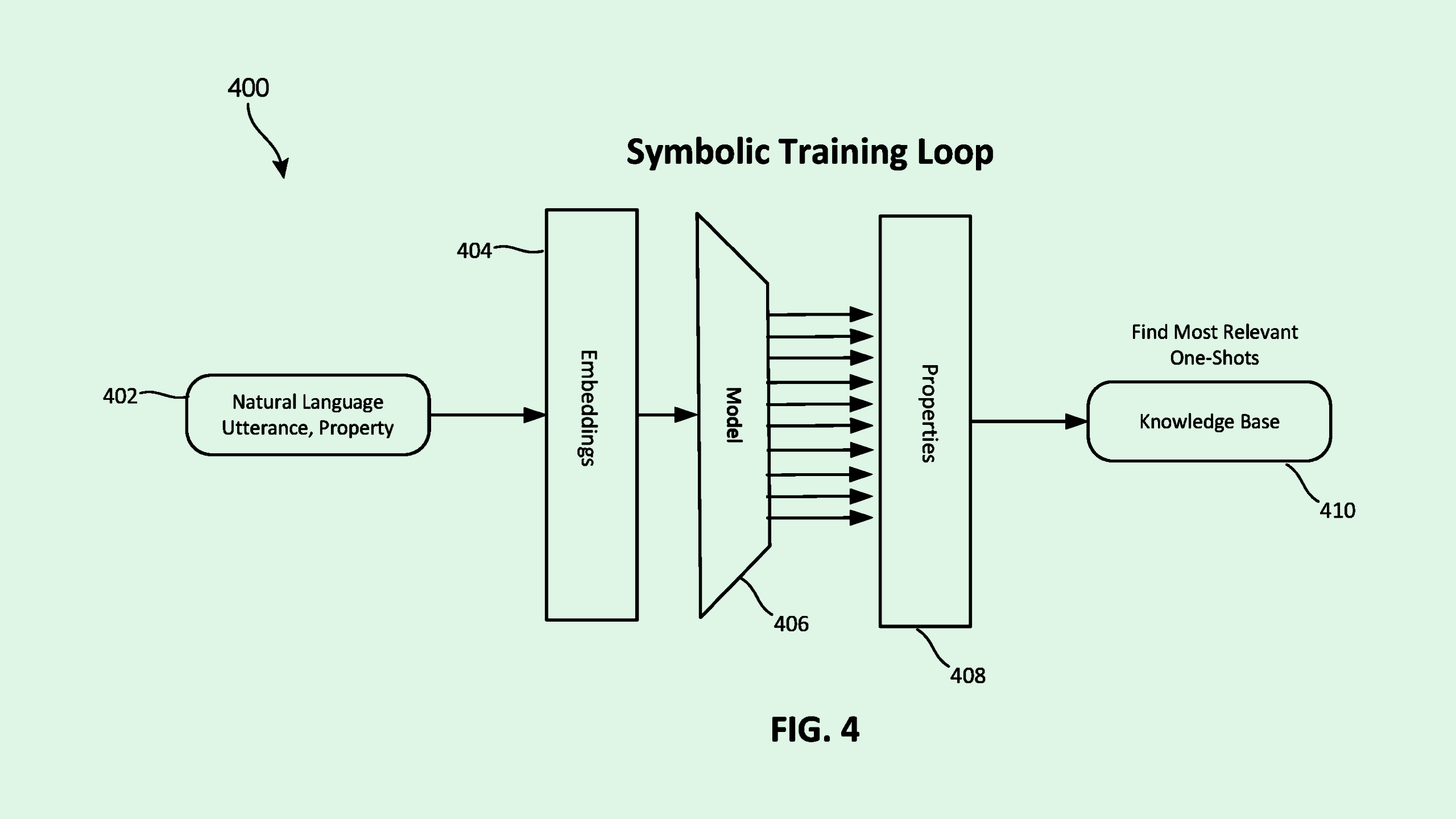

Then the system uses those properties to train another model to recognize them: a “property recognition model.” The property recognition model can take any natural-language prompt and identify the expected code features or patterns.

All of this is in the service of guiding and improving code coming from the LLM so it’s more accurate and reliable.

It’s no surprise this is an area of focus for Microsoft. The company previously filed a patent that pointed out code generators’ weaknesses. It’s not alone, either. Improving AI-powered code generation is a priority for major firms including OpenAI, Anthropic and Google as they build out their own offerings.

And other companies are also leaping on the code-generation train: Amazon wants to make suggestions to coders, JPMorgan is showing interest in making sure its code passes muster and Oracle has expressed interest in cleaning up potential errors.