Happy Monday.

Welcome back to our sneak peek of CIO Upside. Today, we’re discussing the importance of quantum resistance in security plans as quantum computers continue to beat out supercomputers. Plus: Patents from IBM and Intel highlight the move towards data minimization.

Let’s jump in.

How CIOs Can Prepare for the Not-So-Distant Quantum Security Threat

Quantum computing is coming faster than we think. IT leaders need to prepare.

That’s in no small part because these promising, powerful devices present a threat to modern cryptography, which is a pillar of most cybersecurity practices. But the movement toward quantum-resistant cryptography – that is, building cryptographic systems that can’t be broken by the immense computational power of quantum computers – may help enterprises get ahead of threats before they happen, said Karl Holmqvist, founder and CEO of cybersecurity firm Lastwall.

So what exactly does it mean to be “quantum-resistant?” While current cryptographic algorithms would take a supercomputer roughly until the end of the universe to crack, these endless strings of numbers can be easily strung together by quantum computers, Holmqvist said. Conversely, quantum-resistant encryption essentially applies new, more difficult types of mathematical equations in place of traditional cryptographic ones – ones which a quantum computer (so far) can’t crack.

“What quantum resistance is doing is saying, ‘Let’s stop using this thing that we know – when we have a large enough quantum computer – will just be able to break cryptography,’” said Holmqvist.

The potential capabilities of quantum computing threaten to upend security and cryptography as we know it.

And even though quantum computers are still nascent, breakthroughs are happening at an increasingly rapid clip: Earlier this month, Google unveiled a quantum chip called Willow, which can perform calculations in five minutes that would take a supercomputer 10 septillion years, excessive even by universe standards. Holmqvist added that Google’s Willow “paved the way for larger-scale systems,” and removed the “major roadblocks” in adoption.

As researchers continue to make breakthroughs, malicious actors are also preparing for a quantum reality with “harvest now, decrypt later attacks,” Holqvist said. This is when hackers breach encrypted data and store it in the event that quantum computing gives them the capability to crack it later.

“The risk here to enterprise leaders and CIOs is if somebody can capture your secrets now while they are encrypted, and those secrets are actually relevant five years from now, that’s a big risk,” Holmqvist said.

But teaching an old dog new tricks is never easy. A lot of enterprises are so focused on more imminent threats, like ransomware or phishing attacks, that they don’t have the bandwidth to handle the problems that are a mile away, Holmqvist said.

“It makes it really hard to look at this longer term threat that has an unknown timeline,” he added. And like adopting any tech, many firms have reservations about quantum resistance.

- A lot of enterprises are nervous about the overhead costs of new hardware or routers that are incurred making the switch, he said. Some worry that the complexity of this robust cryptography will have a “detrimental” effect on the latency of their networks. “The reality from our experience has been that’s not really the case – it’s not as bad as people think,” he said.

- Some enterprises have bided their time waiting for quantum-resistant algorithms to be standardized by the National Institute of Standards and Technology, Holmqvist noted, a breakthrough that came in August of this year.

What security teams should be doing now is “getting past the unknown,” he said, by starting to test these standardized algorithms in the field. Figuring out what data, information or secrets are most important to protect can help enterprises decide where it’s most vital to deploy quantum-resistant security.

“You can start making these plans part of your normal business operation, instead of having to do them urgently later,” Holmqvist added. “There will be cases where there are some performance implications. But what’s more concerning is that people are just waiting and doing nothing.”

The Less You Know, the Better: Tech Moves Toward Data Minimization

For a long time, the ethos of the tech industry was the more data, the better. Now some are beginning to realize that less is more.

Recent patents from IBM and Intel both highlight an increased focus on data minimization, or the concept that the collection, storage, and utilization of user data should be kept to a bare minimum.

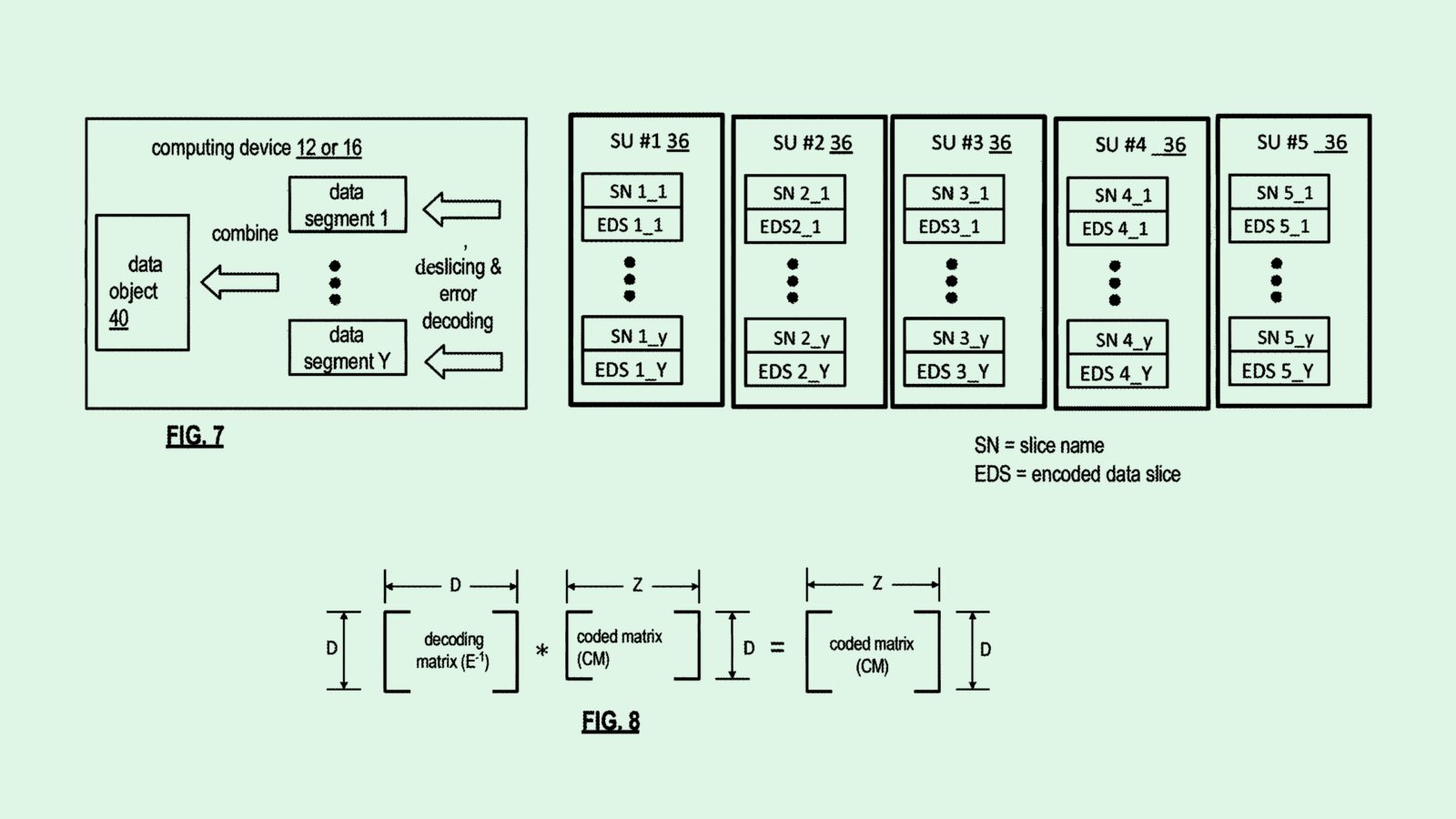

- IBM is seeking to patent a system for the “efficient deletion of data in dispersed storage systems,” or data that’s been stored across multiple cloud locations. This tech basically aims to get rid of out-of-date and unneeded data, which is helpful for data security, cutting costs, and improving performance of cloud environments.

- Intel, meanwhile, is seeking to patent a system for “verification of data erasure,” which verifies that “programmable circuits,” or hardware that’s customized to perform specific tasks, have been securely erased using digital signatures and private keys. This is important for maintaining the data security of hardware that’s commonly used in things like AI training.

We’ve seen patents with a similar goal of data minimization in the past, such as Google’s filing for anonymizing large-scale datasets and Twilio’s patent for personal information redaction. And it makes sense that these companies are tackling data minimization in their security practices: the more data your enterprise has, the more your enterprise needs to protect, said Clyde Williamson, senior product security architect at Protegrity.

“To have the data in the fewest places possible is really necessary, because security isn’t an infinite thing that we can just constantly add more of,” said Williamson. “And at the end of the day, wherever that data is, it has to be protected, it has to be monitored, we have to be able to audit it.”

But the drive to develop and grow data-hungry AI models is making it increasingly difficult for tech companies to reconcile their data-minimization needs, said Williamson. Not only do these models often come with their own data security vulnerabilities, but they also add to what security protocols need to cover.

Plus, data security slip-ups aren’t always the fault of the company that collected the data in the first place, Williamson noted, but rather the third-party companies and vendors that have access to that data by extension. A company’s data-security strategy is only as strong as that of its weakest vendor. “That is where we see a lot of the vulnerabilities and compromises that we see in the news right now,” he said.

Extra Upside

- President-elect Donald Trump appointed Sriram Krishnan, former general partner at a16z, as senior policy advisor for AI for the White House Office of Science and Technology Policy.

- Palantir and Anduril are in talks with competitors to form a consortium that will jointly bid for US government work.

- OpenAI announced a new family of AI models called o3, which it claims can “think” about the company’s safety policy during inference.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.