Happy Thursday, and welcome to CIO Upside.

Today: As OpenAI cements ChatGPT as the king of LLMs, the safety of off-the-shelf tools for enterprises remains uncertain. Plus: How to avoid accidentally hiring a North Korean hacker; and Microsoft keeps its head in the clouds.

Let’s jump in.

What Businesses Should Consider Before Using Off-the-Shelf AI

OpenAI may be the darling of large language models, but is it ready for the enterprise big leagues?

Last week, Bloomberg reported that the company’s pioneering ChatGPT model has been downloaded onto devices by 900 million users, far outpacing Google’s Gemini at 200 million, DeepSeek at 127 million and Microsoft’s Copilot at 79 million.

To build on the chatbot’s broad popularity, OpenAI introduced a ChatGPT-backed general purpose agent last week that can automatically navigate a user’s calendar, create slideshows and run code.

Using off-the-shelf AI tools like OpenAI’s, however, exposes an enterprise to strategic risks, said Valence Howden, principal advisory director at Info-Tech Research Group. For one, without appropriate governance, usage can undermine data security and erode safeguards for your business’s intellectual property:

- Agentic tooling presents an even greater surface area for data collection and surveillance, he explained. Giving an off-the-shelf agent access to your calendar, personal information or company data is “way more problematic from a risk perspective,” he said.

- “Do I trust it to grab and hold corporate information and not expose it to any other form? No, I don’t,” said Howden. “The idea of putting critical business information into the hands of a third party that can use that information and aggregate it with other things is a competitive disadvantage,” he added.

“The ability to scrape information … is way more advanced than people realize,” Howden said.

Additionally, bias needs to be considered carefully, said Howden. While some bias is obvious, many enterprises don’t understand the nuanced ways that an AI model can exhibit prejudice.

For example, bias doesn’t just occur in the datasets themselves but “in the language we train on,” he said. “The English language tends to have a Western bias by its nature. So if you’re an enterprise that is not Western-based, you’re going to absorb the bias of the West through the language used to train the model.”

The alternatives to off-the-shelf tools, however, may be out of reach for many businesses. While building custom AI for internal use can assuage concerns about privacy, security and intellectual property, doing so costs more than some can afford, Howden said. When considering the talent, money and resources, “I just don’t know that anyone but large companies has the ability to do that,” he said.

The safety of ChatGPT and similar models for enterprises depends on how they’re being used, Howden said.

Setting boundaries for usage can help in theory, but the challenge is that “the stewardship and the governance is not flexible or fast or adaptive enough,” he said.

“We run very far ahead of what we can control,” Howden said. “AI moves at the speed of innovation. Governance and regulation move at the speed of paperwork.”

How to Avoid Hiring a North Korean Spy as a ‘Remote Employee’

Do you really know who you’re hiring?

For the past decade, North Korean operatives have sought to infiltrate US companies, posing as remote employees to spy on operations, install malware and steal corporate and personal data. Last month, two operations by the Department of Justice and the FBI executed coordinated search warrants on 50 suspected “laptop farms” spread across more than a dozen states. About 400 laptops, 29 financial accounts, 21 fraudulent websites and remote-controlled devices were seized.

To avoid hiring foreign saboteurs, the FBI advises US companies to adopt identity verification processes when hiring new workers, but even that provides no guarantee of security.

“We advertised for a remote employee through our normal hiring processes and, unbeknownst to us at the time, several North Korean fake employees responded,” said Roger Grimes, chief evangelist at KnowBe4, a company that unknowingly hired a North Korean hacker.

KnowBe4 interviewed the operative via Zoom and spoke to him over the phone, said Grimes, asking him for a valid ID and work references. The ID, which was stolen, didn’t raise flags, said Grimes. The work references, fabricated by threat actors, initially appeared legitimate.

Though KnowBe4 quickly caught the operative, not all companies are as lucky, he said. “Once (the laptop) was picked up, the person tried to install malware on the device,” Grimes said. “Our software detected it and alerted our (security operations) team immediately, which froze the employee’s accounts and the laptop.”

KnowBe4’s situation is far from singular. Security firm HYPR nearly hired a North Korean hacker, said Bojan Simic, CEO and co-founder. The operative was exposed during the first day of onboarding after triggering the company’s identification system through discrepancies in biometrics and geolocation.

“A blend of multi-factor authentication, continuous biometric and location verification is the future of secure workforce access, Simic said.

Every enterprise with remote employees needs to be aware of this threat and adapt hiring processes to detect hostile foreign operatives masquerading as legitimate applicants before they’re hired, Grimes said:

- Red flags include inconsistent time-zone activity and reuse of the same virtual machine across multiple accounts, said Nic Adams, co-founder and CEO of 0rcus.

- For companies in software development, code submissions should only be done from managed workstations with hardware attestation to detect virtual machine misuse, Adams added.

- Monitoring traffic patterns for lateral movement, unusual host jumps, automating alerts for new accounts that request high-privilege access and double-checking open- or closed-source resources that lack rigorous vetting are also a must, he said.

“Enterprises must treat remote hires like external threat feeds: integrate their profiles into threat intelligence platforms, run continuous validation of their digital presence, and rotate credentials aggressively to limit any undetected foothold,” Adams noted.

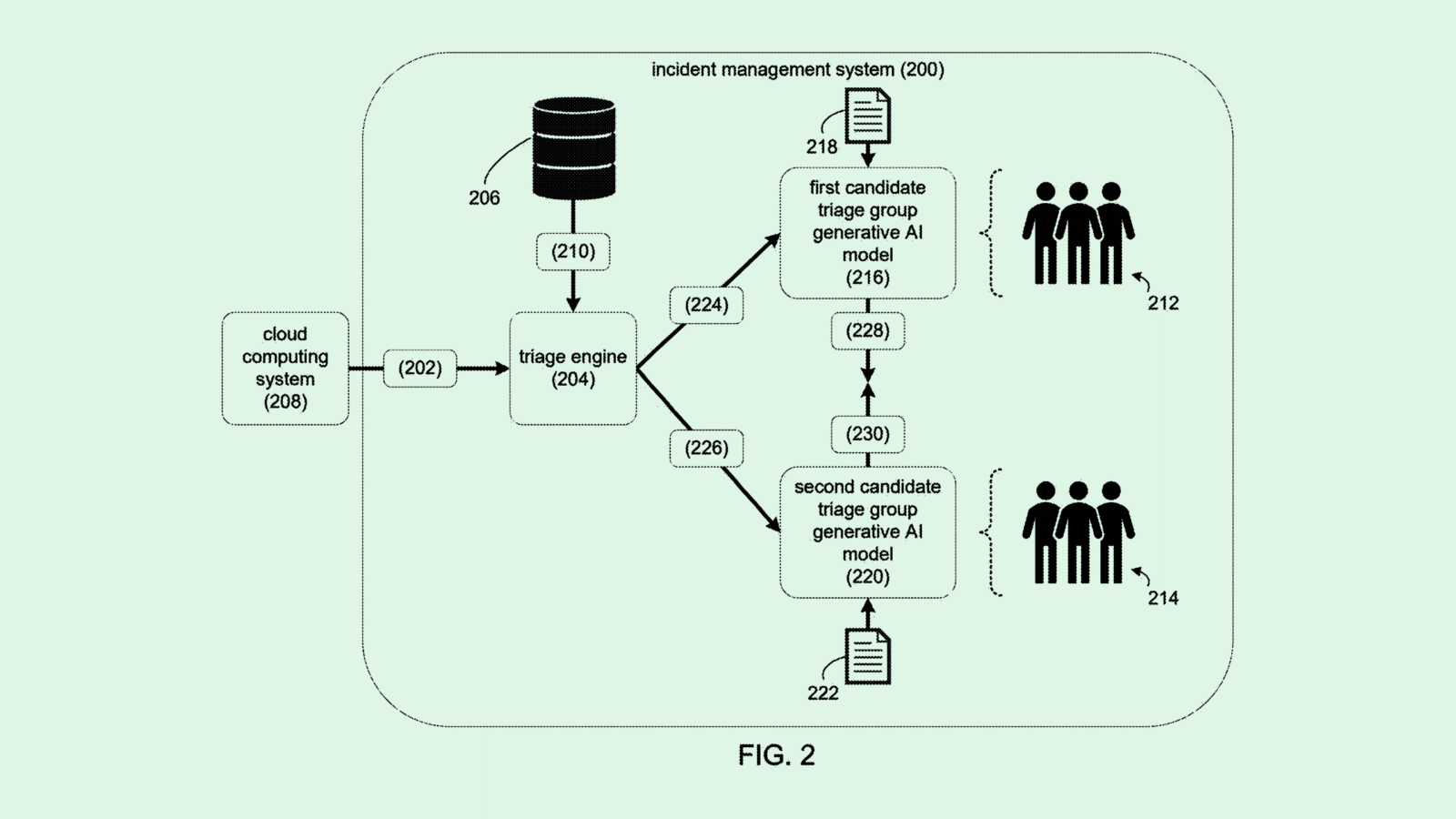

Microsoft Patent Automates Cloud Error Fixes

Microsoft wants to keep things running smoothly in the cloud.

The company is seeking to patent a system for “automated incident triage” in cloud computing environments using generative AI to automatically track, route and handle any errors.

“Conventional approaches leverage general machine learning models to aid in triage and diagnosis,” the patent notes. “However, the performance of these approaches is limited due to a lack of domain knowledge in general machine learning models from various triage teams.”

Cloud “incidents” come in many forms that can undermine the health, performance and security of a cloud system, including bugs, outages, service errors and vulnerabilities. When an incident is detected, a triage engine will sort it into the right category to troubleshoot it properly.

Each potential category has its own dedicated generative AI model trained on past data to properly handle such incidents. The models digest information related to the incident and make recommendations on which team should tackle it.

Cybersecurity has long been Microsoft’s bread and butter. The company’s patent history includes tech to weed out anomalies in cloud environments, prevent AI “jailbreaks” and evaluate data health. It’s only natural that security extends to Azure, which has become one of the company’s biggest moneymakers as AI drives up demand.

In the most recent quarter, the company’s cloud unit made $42.4 billion, up 20% from the previous year’s quarter. Azure holds 22% of global cloud market share, according to CRN, second only to Amazon Web Services.

But its security oversight extends only so far: Earlier this month, hackers exploited a vulnerability in Microsoft’s SharePoint document management software, creating a breach impacting organizations worldwide. Microsoft said that hackers are targeting clients running SharePoint services from on-premise networks rather than services hosted by the company itself.

That underscores the stakes for enterprises weighing whether to repatriate their data and systems to on-premise servers, rather than storing them with cloud hyperscalers: The relative security capabilities of each option should be evaluated carefully.

Extra Upside

- Vanta Victory: Compliance firm Vanta, backed by Atlassian and CrowdStrike, is valued at $4 billion after it’s latest funding round.

- AI Makes History: Google DeepMind revealed Aeneas, a model to help contextualize ancient inscriptions.

- Action Plan: The White House unveiled the AI Action Plan, aiming to loosen regulations on permitting for data centers.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.