Happy Thursday, and welcome to CIO Upside.

Today: AI is an energy hog with an endless appetite. What can be done to appease it? Plus: How bots are creating a big cybersecurity problem for enterprises; and DeepMind’s recent patent wants to give agents more context.

But first, a quick programming note: CIO Upside will be taking a break on Monday, July 7, in observance of Independence Day.

Let’s jump in.

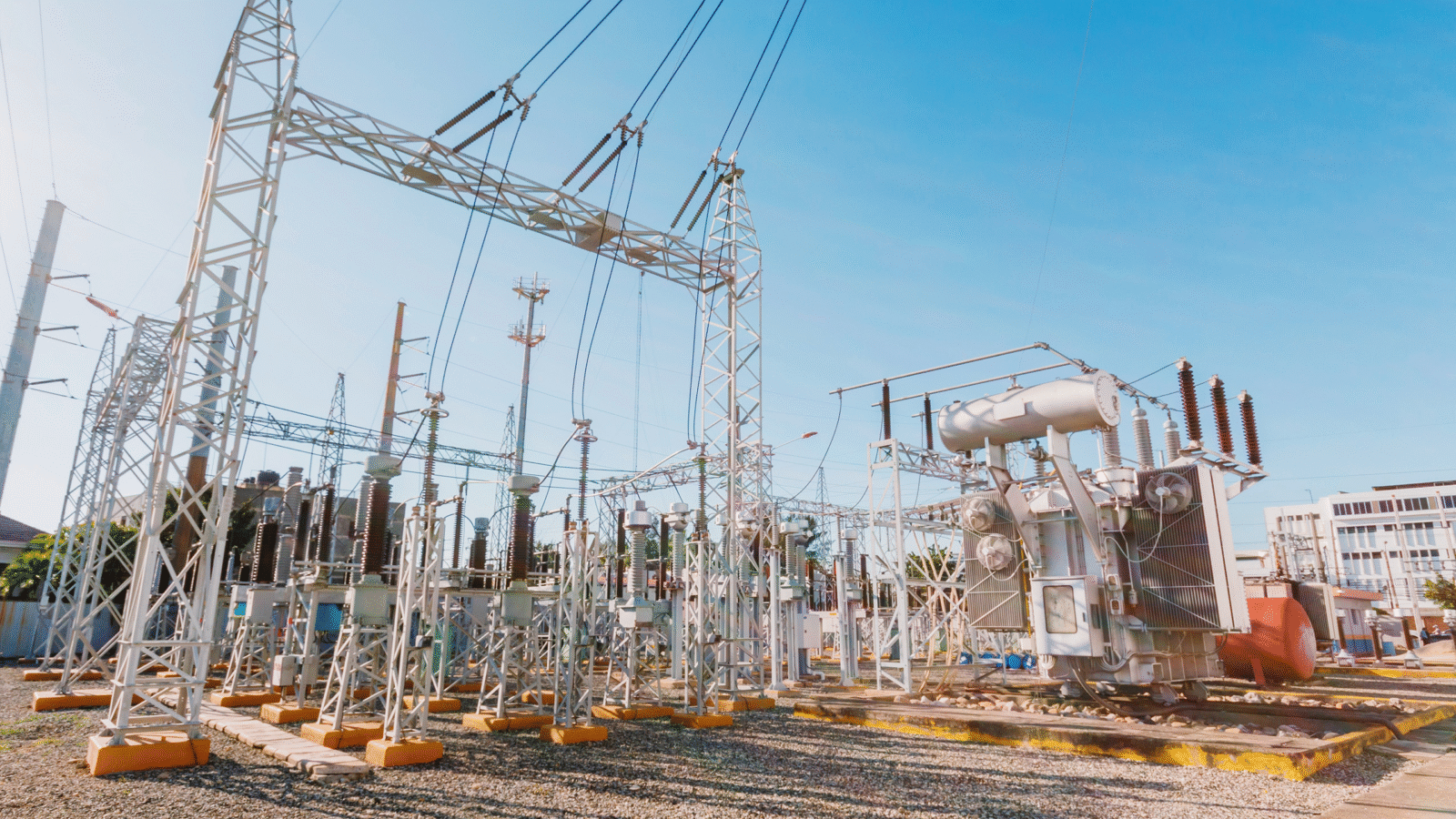

Big Tech Is Still Grappling With AI’s Energy Problem

What will it take to reckon with AI’s energy problem?

As AI development causes an explosion of data center demand, how the industry will be powered as it grows – and what the environmental consequences of powering it may be — remain open questions. A Harvard University study found that the carbon intensity of electricity used by data centers is 48% higher than the US average.

Though their AI ambitions show no signs of abating, tech giants are seemingly reading the tea leaves on this issue. On Monday, Google announced a deal to purchase 200 megawatts of power from Commonwealth Fusion Systems’ first commercial plant, which aims to begin delivering energy to the grid in the early 2030s:

- The search-engine giant isn’t alone in its maneuvering. Meta signed a deal last week to source nearly 800 megawatts of solar and wind energy from Invenergy. Microsoft has signed a number of clean energy procurement deals over the past year, and Amazon was named the top corporate purchaser of renewable energy in Europe earlier this year.

- Their efforts may not be in vain. In Google’s most recent sustainability report, released last week, the company claims it has “successfully decoupled our operational energy growth from its associated carbon emissions.” Though its power demand from data centers grew 27%, its data center energy emissions dropped 12%.

Demand, however, might be outpacing clean energy’s ability to keep up, said Hannah Bascom, chief market innovation officer at Uplight. According to MIT Technology Review, AI could consume as much electricity as 22% of all US households by 2028.

“I think that we are definitely going to see a crunch in terms of the ability to get enough energy to supply these data centers,” said Bascom. “The reality is that you can’t build it fast enough in a lot of instances.”

And with AI adding pressure, the clean energy transition is going to rely on support from major tech firms, both in rethinking currently available technology and developing innovations, such as fusion and modular nuclear plants, said Jon Guidroz, senior vice president of commercialization and strategy for Aalo Atomics.

“From where I’m sitting, it feels like a really sincere commitment to the long term around this,” Guidroz said. “That’s just so critical for innovation to be pulled into mainstream commercialization.”

Though scaling nuclear energy can be a slow process, a lot can be done with the energy assets and infrastructure that are currently available, Bascom said. As it stands, meeting current power demands may require a “fundamental rethink of how we run the grid,” she said.

Load flexibility, or shifts in when energy is used, can have a major impact on a power grid’s ability to keep up with demand, Bascom added. One study from Duke University, released in February, found that cutting back grid power usage by half a percent of its annual uptime could add 100 gigawatts of power for larger loads.

“We have to figure out how to get more out of the grid that we have and invest only incrementally, really where we need it,” said Bascom.

How the ‘Bot Epidemic’ Threatens Enterprise Security

After decades of working to deploy effective human identity cybersecurity, organizations now face a bigger problem: Non-human identities.

Driven by digital transformation, AI, agents and automated workspaces, non-human identities, commonly known as bots, outnumber human online activity by as much as 100 to one, according to GitGuardian.

Non-human identities can include anything from credential-stuffing bots to click-fraud, fake form-filling, and advanced AI agents APIs. When leveraged by cybercriminals, these bots can pose major risks, such as recent breaches of the US Treasury Department and The New York Times.

Even for smaller businesses, 30% to 50% of traffic can be non-human, said Steve Zisk, senior product marketing manager of Redpoint Global. In some sectors, the ratio is even higher.

Non-human identities often slip by security guardrails, mimicking human users by using evasion techniques such as residential proxies to blend their traffic with human activity. Key risks they pose are security and signal contamination, Zisk said:

- From a security standpoint, bots are often reconnaissance tools looking for vulnerabilities in login portals, APIs, or checkout flows.

- The subtler threat is data distortion. Marketing teams use engagement metrics to power segmentation, and AI models and bots polluting that data can lead to poor decision-making, inaccurate personalization, and a degradation of trust in business systems.

“The Internet was built with humans in mind; thus, this can create a lot of security vulnerabilities when over half of all traffic [according to the 2025 Imperva Bad Bot Report] is now automated,” said IEEE Senior Member Shaila Rana. “This bot epidemic creates significant operational challenges.”

Beyond cybercriminal activity, non-human identities strain infrastructure through massive volumes of automated requests, skewed analytics and business intelligence data, increased bandwidth costs, and degraded user experience due to server overload.

Enterprises can tackle the challenge through a proactive approach to differentiate between legitimate and malicious automated traffic in real-time, Rana said.

To curb the risks, companies should implement AI-driven bot protection that adapts to evolving threats, establish rate-limiting and traffic-throttling mechanisms, and build scalable infrastructure that can handle traffic spikes. Regular stress testing, chaos engineering, and deploying automated failover systems are essential for maintaining uptime during bot-driven traffic surges, Rana added.

As AI and online bots continue to proliferate, regular security assessments that focus on bot detection and API vulnerabilities need to become standard practice, Rana said.

“CIOs should implement comprehensive bot-protection solutions, secure APIs with proper authentication and monitoring, deploy MFA to prevent account takeovers, and establish continuous monitoring for unusual traffic patterns,” Rana said.

DeepMind Patent Gives AI Robots ‘Inner Speech’

DeepMind wants to give its agents an inner monologue.

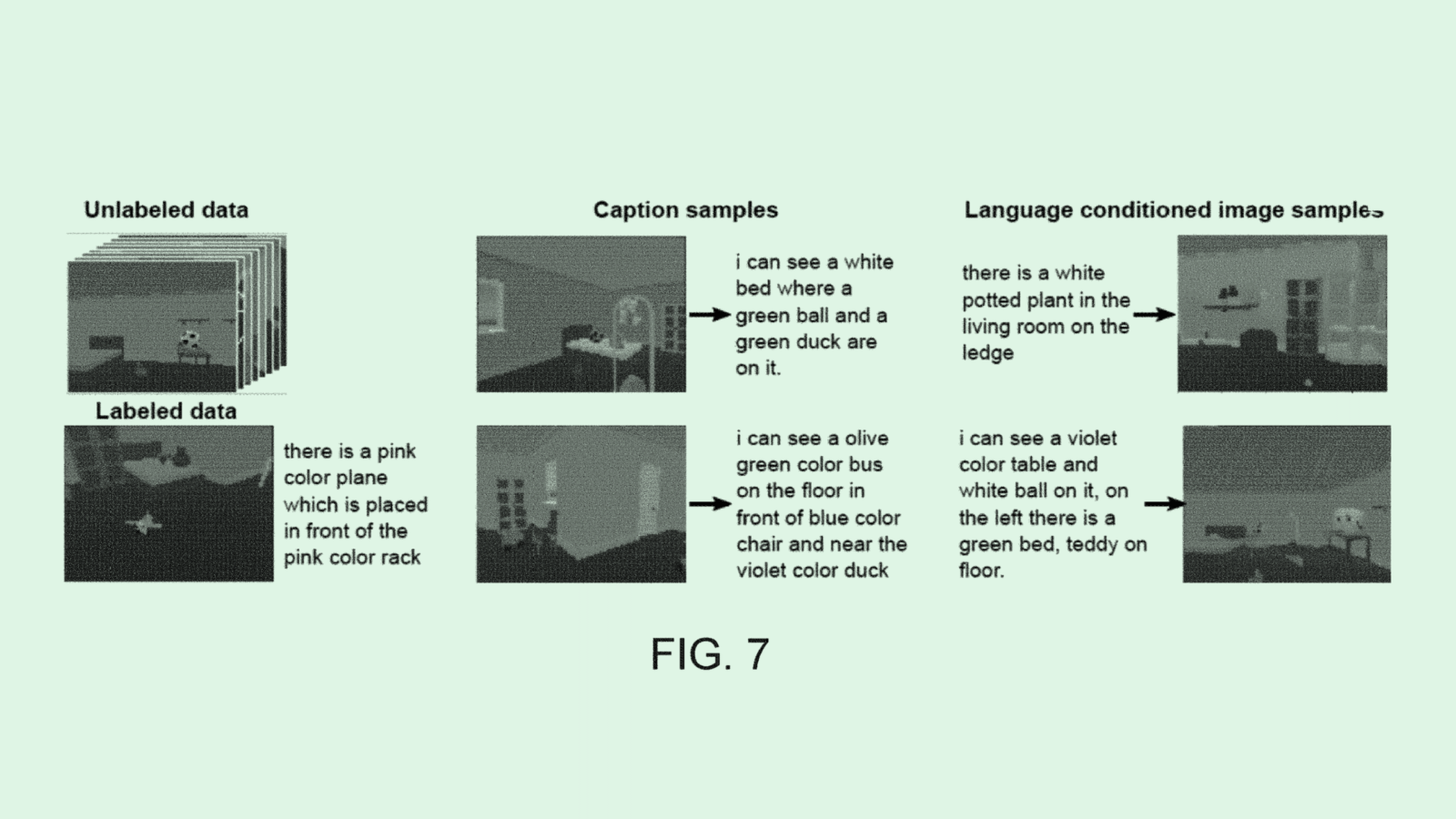

Google’s AI lab is seeking to patent “intra-agent speech to facilitate task learning,” a tool that would help AI agents or robots understand the world around them.

The system would take in images and videos of someone performing a task and generate natural language to describe what’s happening using a language model. For example, a robot might watch a video of someone picking up a cup, while receiving the input “the person picks up the cup.”

That allows it to take in what it “sees” and pair it with inner speech, or something it might “think.” The inner speech would reinforce which actions need to be taken when faced with certain objects.

The system’s key benefit is termed “zero-shot” learning because it allows the agent or robot to interact with objects that it hasn’t encountered before. They “facilitate efficient learning by using language to help understand the world, and can thus reduce the memory and compute resources needed to train a system used to control an agent,” DeepMind said in the filing.

This isn’t the first time DeepMind has made its robotics goals known: The firm rolled out an on-device version last week of its vision language robotics model that can run without internet access. Google said it’s “small and efficient enough to run directly on a robot.” Like the patent’s design, the flagship model is engineered to help robots generalize to complete tasks on which they may not have been trained.

For AI-powered robotics and agents, more context is always better. Giving a robot or agent an inner monologue provides more data to train on and work with as well as better tools to understand unfamiliar situations.

The unpredictability of AI-powered robots remains a barrier to adoption – one that many firms are trying to solve, with Google, Nvidia and Intel all seeking patents targeting similar objectives.

Extra Upside

- Microsoft Cuts: Microsoft will lay off 4% of its global workforce across teams, or roughly 9,000 employees.

- Stargate Expansion: OpenAI will rent additional data center capacity from Oracle for its Stargate project, totalling in about 4.5 gigawatts of power.

- Tesla Tumble: Tesla sales dropped again this quarter, representing its second straight year of falling sales.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.