Happy Monday and welcome to CIO Upside.

Today: Though AI adoption is being pushed in practically every industry, the tech’s usage in the legal field presents complications and can have major consequences when it makes mistakes. Plus: Why getting in the weeds on AI contracts could be your enterprise’s saving grace; and Oracle’s recent patent keeps large language models from running their mouths.

Let’s take a look.

Hallucinations Create Complications When AI Goes to Court

Judges are losing their patience as AI mistakes pile up in US courts.

The number of cases in which the tech has generated incorrect information or cited nonexistent cases to support lawyers’ arguments is both alarming and increasing. In early June, the Utah Court of Appeals found that two lawyers had breached procedural rules by submitting a legal document citing multiple cases that did not existt.

That wasn’t an isolated incident. The Washington Post reported that court documents generated by AI, which include hallucinations, are rising across the country. These incidents are also tripping up large tech firms, such as model provider Anthropic, which admitted in mid-May to using an erroneous citation generated by its Claude AI chatbot in its legal battle with Universal Music Group and other publishers. One researcher found more than 150 incidents of hallucination filed in US courts since 2023, with 103 of those occurring this year.

And using AI incorrectly in this context has consequences, including being referred to professional bodies and responsibility boards, fines of up to 1% of case value, court warnings, class action petitions, and sanctions costing thousands of dollars.

AI is not a legal gray area anymore, and regulators are starting to enforce disclosures, transparency, and bias mitigation requirements, Mark G. McCreary, partner and chief AI and information security officer at Fox Rothschild, told CIO Upside.

Clarifying where attorney-client privilege or trade secret risks arise when using external tools is a must, according to McCreary. That involves determining what data is being put into the AI tools that legal teams use.

“As CIO, I’d focus the conversation (with compliance officers and lawyers) on clarity, boundaries, and accountability,” he said.

Innovation vs. Risks

A 2024 Thomson Reuters survey found that US lawyers using AI can save up to 266 million hours. That would translate into $100,000 in new, billable time per lawyer each year. The study also found that only 16% of lawyers think that using AI to draft documents is “going too far.”

Even if a company has AI legal policies in place, workers could be ignoring them, Wyatt Mayham, lead AI consultant at Northwest AI Consulting, told CIO Upside. “If policies exist, but aren’t enforced or tracked, they’re worthless,” he said.

McCreary advised companies to establish a light but structured governance framework. “The point is not to restrict innovation, but to track and guide usage,” McCreary said:

- Self-registering legal teams to AI apps helps create logs that reveal who used AI, for what purpose, and what type of data was used, creating a system of record. Logs also can be used to ensure no sensitive data is being fed to non-compliant tools, or used in a way that violates ethics rules or client contracts, McCreary said.

- Legal teams need to be continually updated on how AI tool capabilities – and risks – evolve, he said. “An AI feature that’s benign today might add model training next quarter.”

- McCreary noted that an AI recognition program can also incentivize transparency and caution in using new tools, while reinforcing positive behavior. These programs can create a culture of AI literacy, not just compliance.

As flawed AI inputs in legal cases continue to emerge, the reputations of firms, companies and clients are at stake. Courts have already made it clear that tolerance for the improper use of AI and AI hallucinations is low. Despite the push across industries to adopt AI, the damage may outweigh the benefits.

“Waiting for the law to ‘catch up’ is no longer an excuse; enterprise AI governance is not just an IT issue, it’s a legal, reputational and strategic issue,” said McCreary.

Struggling to Navigate Thorny AI Contracts? Question Everything

No one wants to read the terms and conditions. But as AI changes the tech landscape, it’s also changing the way enterprises navigate contracts.

The ever-changing nature of AI has made negotiating contracts with model vendors a tricky business. The key to getting it right may be tapping into “your inner 2-year old,” said Phil Bode, research director in the vendor management practice at Info-Tech Research Group. In short, ask more questions.

Though several pieces of these contracts are similar to those of conventional software, “AI means a lot of things,” said Bode. “Whatever AI contract you’re looking at is going to be full of nuances regarding that particular AI solution – not just a broad, plug and play, one-size-fits-all approach.”

The pressure to adopt AI quickly has made it more difficult to be shrewd with such contracts. And because laws and regulations haven’t kept up, enterprises often don’t know how to protect themselves, said Bode. “What you knew was reality last week has either been clarified or it’s no longer reality,” he said.

Getting in the Weeds

Covering yourself, Bode said, is all about asking more questions:

- Start with looking inward: First, question why your enterprise needs to work with an AI vendor in the first place, how you plan to use the AI model, who will use it and what you hope to get from it.

- Then, question the vendors themselves. With AI vendors, it’s particularly important to ask about data, such as how their models were trained, whether they have access to your data and how quality of outputs is monitored over time. “Due diligence is not one and done,” Bode said. “Especially with AI vendors, things will change.”

- It’s also vital to question usage and ownership, he said. Ask whether there are restrictions to how a model can be used, and whether your enterprise has exclusive license to use the model’s outputs for whatever you please.

One of the most important pieces of the puzzle is definitions, said Bode. Because AI is constantly evolving, the meanings of terms associated with it are changing, too. Users should ask how their vendor defines things like inputs, outputs, training data or fine-tuning data.

“I would recommend proceeding slowly,” said Bode. “With the introduction of new definitions and other implications, definitions keep taking on greater and greater meaning as we evolve from software to software-as-a-service to AI solutions. Those definitions keep growing and growing in importance, and the implications and risks keep growing too.”

Oracle Patent Keeps Large Language Models From Spilling Secrets

What goes into a chatbot eventually comes out.

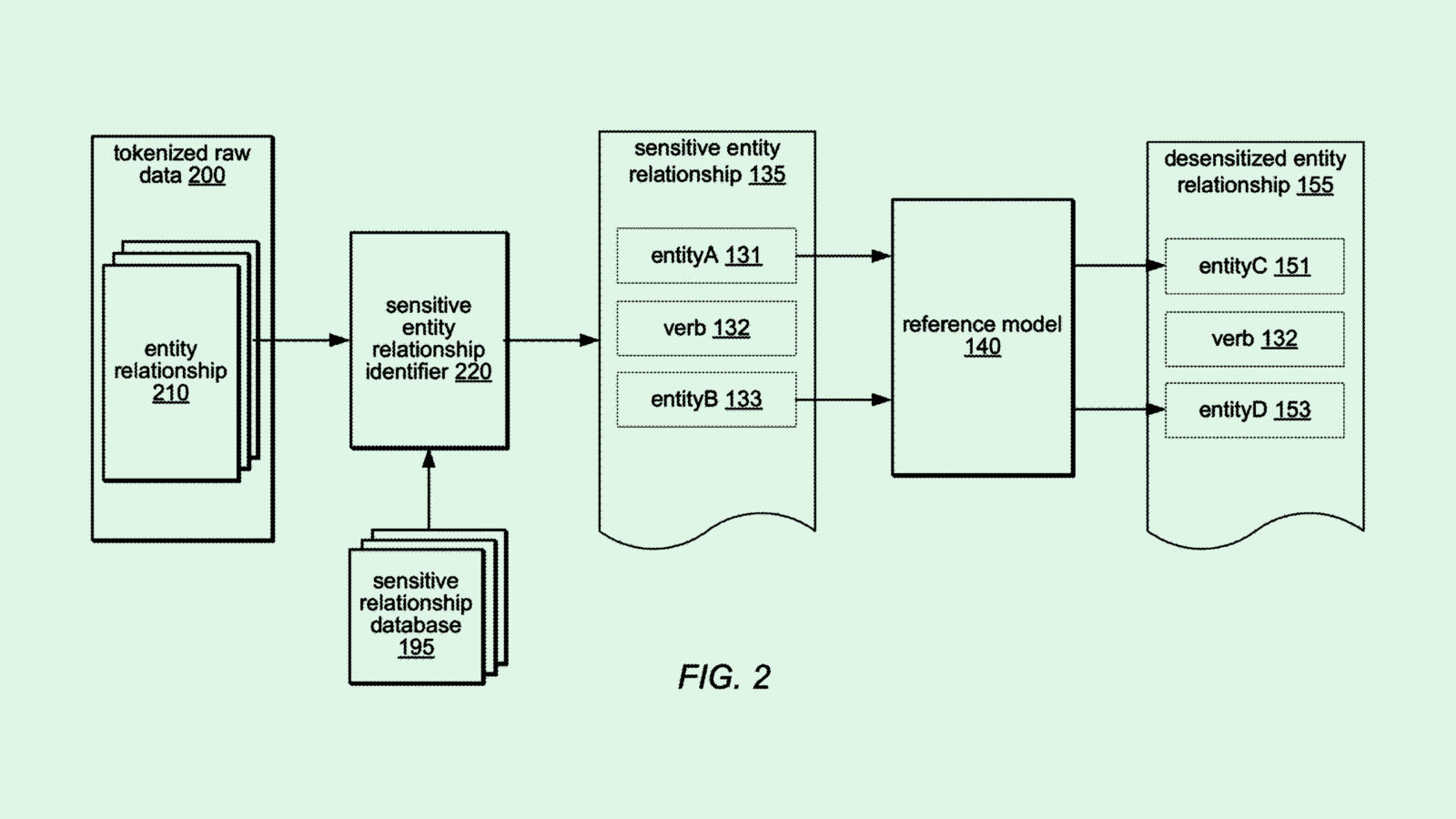

Oracle wants to make those inputs and outputs a little more confidential. The company is seeking to patent a system providing “entity relationship privacy for large language models” that would protect confidential information in datasets used in AI training.

Starting with raw data, Oracle’s tech scans for “sensitive entity relationships.” For example, it may check whether a name is connected to things like medical conditions if the data is healthcare related, or transaction information if the data is financial.

For each sensitive link that’s identified, the system will swap out one piece of information for something that isn’t sensitive, with the new information generated by an AI model. For instance, if a person named “John Smith” is connected to the condition “Type 2 diabetes,” it may switch out the person’s name for a fake one, preserving the structure of the data as well as the person’s privacy. That dataset can then be used to fine-tune a large language model without exposing people’s personal information.

Oracle’s patent highlights a key issue that AI developers are still reckoning with: Data privacy. “[The] reproduction of training data is also at the heart of privacy concerns in LLMs as LLMs may leak training data at inference time,” Oracle said in the filing.

AI models are like parrots. They don’t actually learn facts, they just repeat what they hear and infer what the next word in a given phrase will be. With the right poking and prodding, a threat actor could access any training data that was used to build it.

This presents a major problem with deploying AI into certain contexts where data regulations are tighter. Healthcare, for example, faces strict data-security protocols related to the Health Insurance Portability and Accountability Act, or HIPAA. Financial firms similarly grapple with strict legal requirements.

Oracle isn’t the only tech firm seeking to crack the data security problem. Microsoft, JPMorgan Chase and IBM have similarly sought patents for ways to redact and limit personal data in training datasets. But while guardrails exist, given the pressure to adopt quickly, enterprises are often more concerned with quick innovation and deployment than data protection.

Extra Upside

- AI Break Up: Google, the largest customer of Scale AI, is splitting with the startup following the announcement that Meta is taking a 49% stake in the company.

- Cloud Overseas: Amazon is planning to invest $13 billion in data centers in Australia.

- What Connects Artificial Intelligence, The Trump Administration, And Silicon Valley? Find out in Semafor Technology — a twice-weekly email briefing that examines the people, the money and the ideas at the center of the new era of Tech. Subscribe for free.*

* Partner

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.