Happy Monday, and welcome to CIO Upside.

Today: A conversation with Brian Chess, the SVP of AI at Oracle NetSuite, about how you can deploy (and use) AI in a responsible way that’s right for your organization. Plus: JPMorgan wants to make sure that the code an LLM writes is actually, well, right.

Let’s jump in.

Defining the ‘Why’ of Your AI

AI is infiltrating organizations from both the top down and the bottom up: CEOs are crafting big-picture strategies, and lower-level employees are using chatbots.

“We’re living through this period where there’s a lot of AI euphoria, and a lot of, ‘Well, it must be right, because it came from AI,’” said Brian Chess, SVP of Technology and AI at Oracle NetSuite. “People are right to be excited. There’s a lot to be excited about.”

But, he added, “It is not a magic bullet.”

Touching on his decades of experience in software development and computer security, Chess discussed with CIO Upside the biggest deployment mistakes that enterprises are making, his advice for companies thinking about AI downsides, and his core principle: engaging with new tech.

This interview has been lightly edited for clarity and length.

What are the biggest mistakes enterprises are making in their AI deployments?

AI is exciting for a number of reasons, but one of the reasons it’s exciting is because most new technologies either come top down or they come bottom up, and AI is doing both. The board of directors is putting pressure on the CEO: “Tell us what your AI strategy is going to be.” Meanwhile, all the employees are bringing their own chatbot and probably using AI whether you said it’s OK or not.

One thing [that] has gone wrong, in at least some companies, is they’ve tried to dictate this top-down strategy without talking to people about where they are already seeing benefits, and what are their needs? There needs to be a meet-in-the-middle here where the employer says, “Tell us your experiences. We know you’ve been using it. How has it been going? What do you need? What is going to make good collaboration, both across the organization as well as with the existing systems that people are using?”

What advice could you give to enterprises that want to deploy AI safely and responsibly in their organization?

[When organizations] have realized that there are potential downsides, you need to make it acceptable and safe to do some experimentation. And if you are actually experimenting, that means that not everything goes exactly as planned, but you’ve got to have a way for that to be OK.

One of the things that we’re doing with AI is we say, “AI is subject to the same roles and permission system that users are subject to.” And why do we have roles and permissions for users? It’s because we want to be able to limit their access, because it’s not the right idea to give everybody access to everything. So I’d say the same thing about the AI. In fact, I’d go a little further here and say, “The more sophisticated the AI gets, the more we can treat it the way we might think about human employees, and the systems that we’ve built in order to make sure that the employees do the right thing and not the wrong thing.”

What should people do to iterate and modify their AI as time goes on?

AI has to fit in with the existing systems. Now let’s talk about an AI disappointment: How long ago did we start talking about autonomous driving? It’s been a while now, right? That’s an example of a system where the stakes are obviously high, where if a car does the wrong thing, people can suffer. It’s also an example of a system where we are unwilling, at least as a society, thus far, to adapt the driving system to make things easier on the AI. In other words, we’re saying “AI needs to be better than a human is” is really the standard.

What’s going to happen in business is we’re actually going to relax that a little bit, and we’re going to say, “You know what, if we’ve got a business process, and we could change that business process a little bit to make it easier for the AI, we’re going to do that,” which means we’re going to see AI in business get adopted faster than we’ve seen autonomous driving get adopted.

When we talk about unique needs, I think about specialization and how much you have to change that AI in order to make it suitable for you. That’s one thing you can do. You can also change the parameters of your problem so that the AI you have can do a meaningful piece of that work.

You’ve been in this industry for a long time, and it changes rapidly by the year and by the day. Is there any one principle that’s been really crucial to you?

Just this idea that we’re engaging with something that both has a lot of promise and some potential peril to it: What do you do there? The one word I want to use there is you engage. You’ve got to go do it, and you’ve got to do it in a reasonable and controlled way so you’re not taking more risk than you intended to be taking, but you’ve got to engage with it to figure out what really works and what doesn’t work.

JPMorgan Patent Would Double-Check AI-Generated Code

AI can always write for you, but it’s not always right.

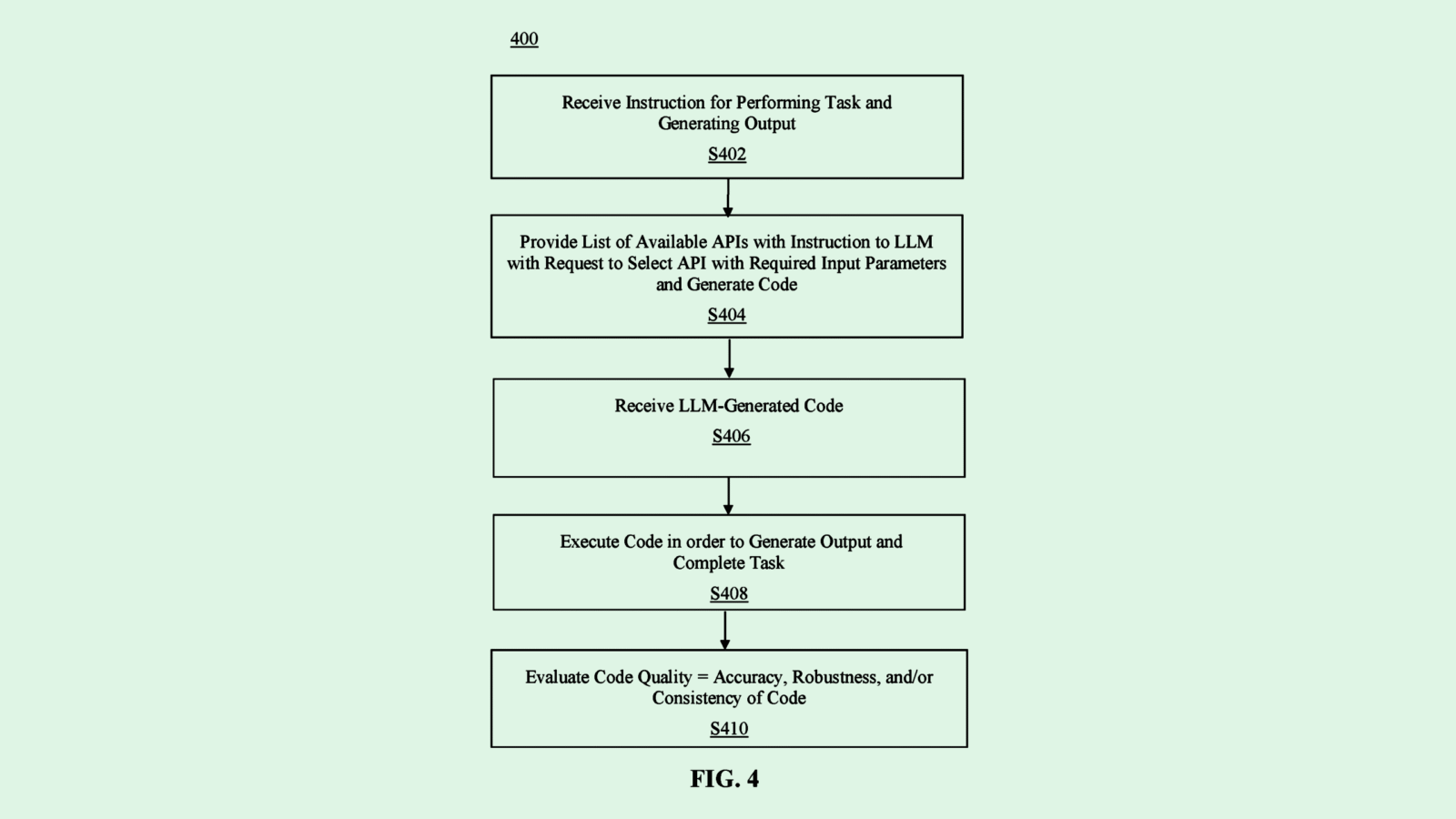

That’s why JPMorgan wants to double-check its LLM’s homework. The company is seeking to patent “a method and a system for obtaining an evaluation of a quality of software code” generated by an LLM.

First, a user gives instructions for a task, including the output they’re looking for. The system sends that information, along with a list of available APIs, to the LLM. It asks the LLM to choose just one API and write the code using it.

Next, the LLM replies with the API it chose, as well as the code it wrote based on the instructions. Then, the system runs that code to see if the task is properly run, with the expected result.

At the end of the process, the system checks the code’s quality: its accuracy, its reliability and robustness, and its consistency.

JPMorgan has sought patents to ensure accuracy before. Last year, the largest US bank looked into how machine learning and blockchain can fact-check. And accuracy has always been an issue tech companies are eyeing: Microsoft is also worried about AI code generation issues and wants to target AI bias, and IBM is trying to use AI to fix issues with AI.

Extra Upside

- Under the Curtain: OpenAI told everyone what tools it uses internally, and software companies freaked out.

- Generating Juice: Base Power’s $1B fundraise will bring more batteries to homeowners.

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.