Amazon Doubles Down on AI Processor Bet

Amazon wants to reduce its reliance on Nvidia and offer an alternative to Nvidia for Amazon Web Services clients in the process.

Sign up for smart news, insights, and analysis on the biggest financial stories of the day.

You can get a party size bag of potato chips on Amazon for less than $15, but Amazon itself is struggling to get its hands on the computer chips it needs to run its business.

Its solution is very Amazon: The company is doubling down on efforts to become a sizable player in the semiconductor business, according to a Financial Times report, with its latest chip to roll out soon. The idea is to reduce its reliance on chipmaking giant Nvidia and offer an alternative to Nvidia for Amazon Web Services clients in the process.

Chip Shot

Amazon read the tea leaves on the chipmaking boom early, acquiring Annapurna Labs, an Israeli chipmaking start-up with operations in Austin, Texas, back in 2015 for $350 million. In the time since, the AI boom has proven a major crunch for cloud computing. Amazon has long collaborated with Nvidia to service AWS customers who need the extra punch for AI tasks. But, like fellow major players in the cloud computing space — Microsoft, Google, Meta — it is prioritizing its own in-house chip infrastructure, too.

This year, Amazon is projected to drop around $75 billion in capital spending, largely on improving its tech infrastructure, and CEO Andy Jassy has suggested that could increase even more in 2025. That spending spree has resulted in Annapurna’s latest product, an AI chip called Trainium 2, which is expected to be formally announced and showcased next month, the FT reports. It could be the first step to chip, chip, chipping away at Nvidia’s market stranglehold:

- The company says building AI chips from the ground up to fit AWS infrastructure could keep costs down. One of Amazon’s current AI chips, Inferentia, is around 40% cheaper to run for generating responses from AI models than Nvidia chips.

- That might come at a performance tradeoff (Amazon doesn’t submit its chips for independent performance benchmarks) but lower performance at a cheaper cost might be a way to crack the market. Nvidia has around a 90% market share on AI chips, and, in its latest quarter, did around $26 billion in chip sales, or close to all of AWS’ revenue in the latest quarter.

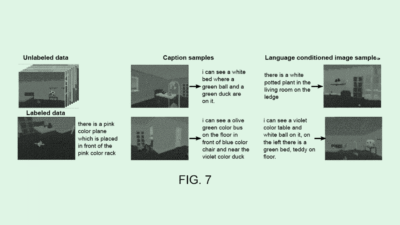

Cheap Seats: There may not need to be a premium on performance, anyhow. OpenAI’s latest model, Orion, has been an internal disappointment, Bloomberg reported, failing to prove itself as a marked improvement on GPT-4. Google and Anthropic have hit similar plateaus as the industry runs short on high-quality human-made training data. Almost makes you feel better about that pile of books you will, for sure, eventually, one day read.