Generative AI-ifying Alexa is Way Harder than Amazon Thought

The company’s struggle gives us a glimpse into how the wider world of voice assistants is trying to keep up with the technology zeitgest.

Sign up for smart news, insights, and analysis on the biggest financial stories of the day.

Who’d have thought a brain transplant would be so tricky?

According to a Financial Times report published Tuesday, Amazon’s attempt to bring Alexa into the zeitgeist by replacing the voice assistant’s “brain” with generative AI software has traveled an extremely bumpy road. Sources told the FT that weaving generative AI into Alexa’s operating system has been incredibly challenging, and the company’s struggle gives us a glimpse into how the wider world of voice assistants is trying to keep up with the technology du jour.

The Operation Behind the Brains

According to the FT, Amazon has spent the last two years on the project, which aims to make Alexa a far more capable virtual assistant, able to understand and react to a wider range of queries or requests. It’s a logical step for voice assistants: Alexa and Siri have to move with the times just like the rest of us, and while companies like Amazon and Apple don’t exactly have first-mover advantage, they do have the advantage of having already placed the hardware these assistants run on in our homes and pockets.

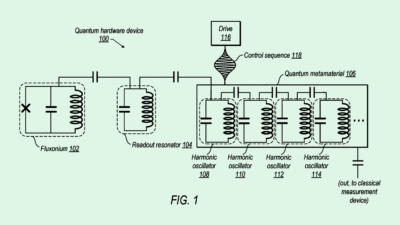

Switching from Alexa’s old software to Large Language Models (LLMs) has been extremely complex, however, more so than Amazon was expecting, sources told the FT. Among the technical roadblocks encountered by the company — which is using more than one LLM, also integrating Anthropic’s Claude on top of Amazon’s in-house software — are:

- Latency: If you ask Alexa a question, you expect an answer pretty quickly, but doing that with at least two LLMs working behind the scenes is difficult — at least, it is if you’re Amazon and you don’t want to be losing money on all the compute required to make that answer come back fast. “When you’re trying to make reliable actions happen at speed […] you have to be able to do it in a very cost-effective way,” Rohit Prasad, head of the artificial general intelligence (AGI) team at Amazon, told the FT.

- Cost-effectiveness: With regard to Alexa, that’s a sensitive topic for Amazon, as the division that makes the smart speakers which Alexa runs on has historically been a money-loser. At the moment, there’s a pretty fine tightrope between the money-making and money-bleeding powers of generative AI: Earlier this month, OpenAI CEO Sam Altman said that the company’s “Pro” subscription for ChatGPT, which costs $200 per month and gives users unlimited access to an upgraded version of ChatGPT, is losing money.

Alexa in the Sky With Diamonds: There’s also the risk that a generative AI-ified Alexa might “hallucinate,” as is the habit of generative AI so far. One source who was a former member of the Alexa team told the FT that fabricated answers were a big risk for Alexa’s brand. “At the scale that Amazon operates, that could happen large numbers of times per day,” this person said.