Microsoft Seeks Patent to Limit Hallucinations in AI

Along with mitigating hallucinations, this tech creates an audit trail for more transparency between the model and its users.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

Hallucinations in AI models are unavoidable, but tech firms are looking at ways to minimize the damage.

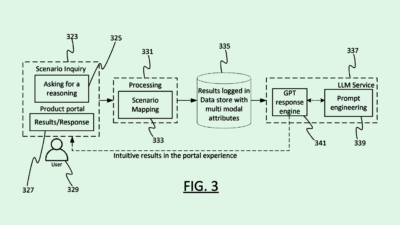

Microsoft is seeking to patent a system for “identifying hallucinations in large language model output.” Rather than preventing these models from spitting out incorrect outputs altogether, Microsoft’s tech essentially aims to monitor and catch them when they do.

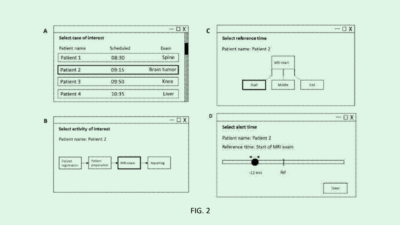

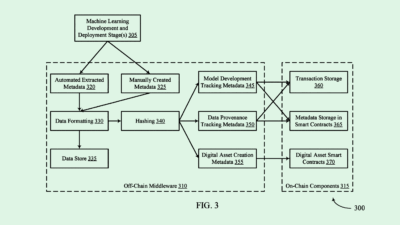

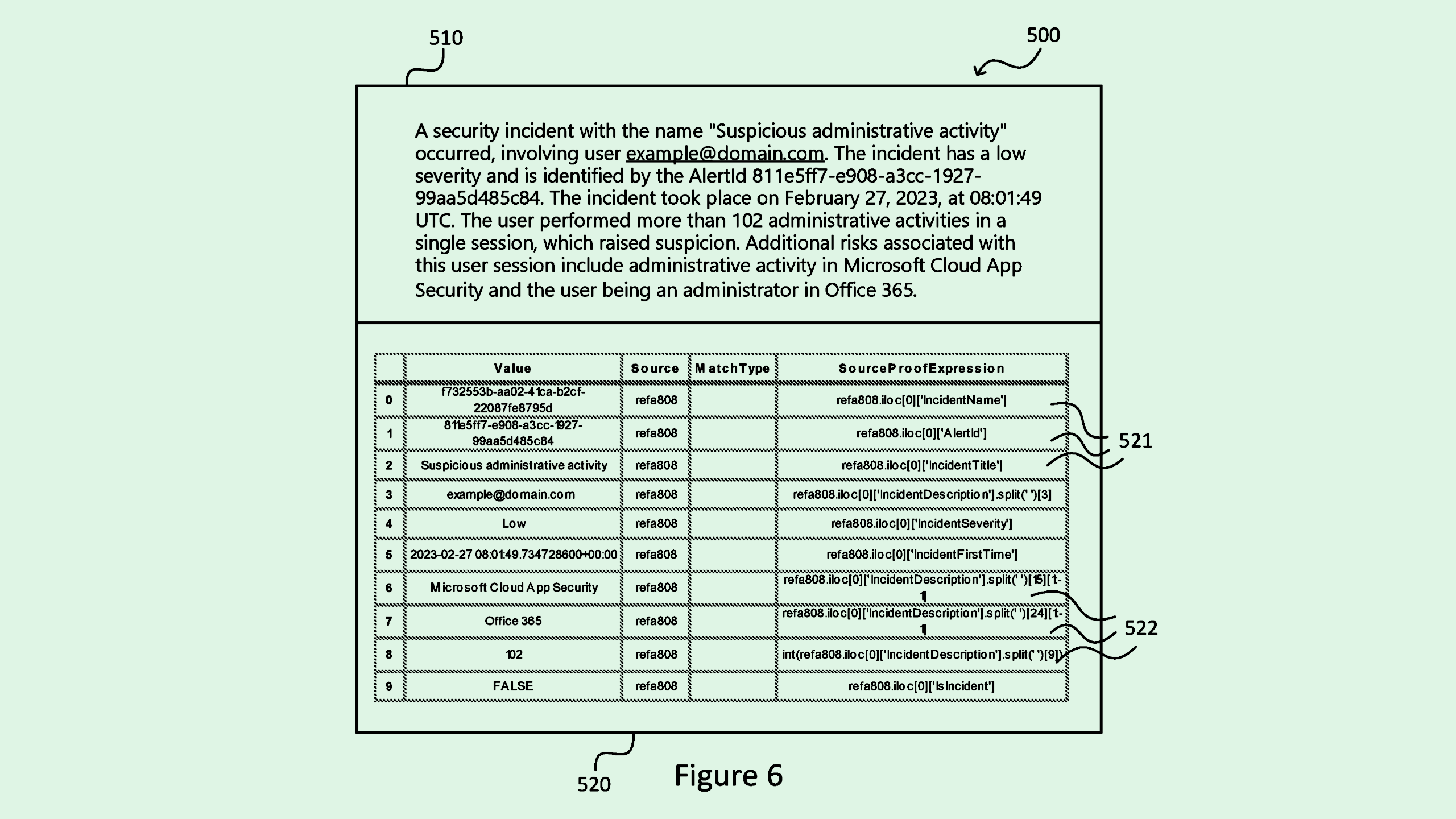

The system relies on the principles of explainable AI, or the idea that models should be able to show their work. When a query is submitted to an AI model, Microsoft’s system will submit a verification request alongside it, asking the model to provide data on exactly how it formulated its answers.

A separate system then analyzes the verification data to make sure that the response was “validly derived” from the query, ensuring that the answer not only makes sense but is traceable. Along with mitigating hallucinations, the tech creates an audit trail for more transparency between the model and its users.

Tech firms have been grappling with hallucinations, which are a natural part of AI models, as they make AI bigger and more powerful. Microsoft has sought similar patents in the past, and released a product called “Correction” last year which it claimed could catch potential hallucinations by making comparisons to the source material. Google launched a similar tool called Vertex AI in 2024. Experts, however, warned at the time that eliminating hallucination from AI is practically impossible.

Identifying mistakes as they happen may prove to be the best tool in tech firms’ arsenal for addressing the problem. And finding ways to ensure as much accuracy as possible is vital as agentic AI makes its way into enterprises with the goal of allowing humans to let go of the reins.