Microsoft Wants to Solve Inaccuracy, Hallucination in AI Models

hallucination in AI is a pervasive, core issue that might not be easily solvable.

Sign up to uncover the latest in emerging technology.

Microsoft wants to make AI models more accurate.

The company filed a patent application for “enriching language model input with contextual data.” This basically helps Microsoft’s model offer more accurate responses and predictions by feeding it domain-specific data as part of the input.

“Existing [natural language processing]-based technologies are incomplete or inaccurate,” Microsoft said in the filing. “One reason is because existing language models, such as Large Language Models (LLM), often make predictions without adequate contextual data.”

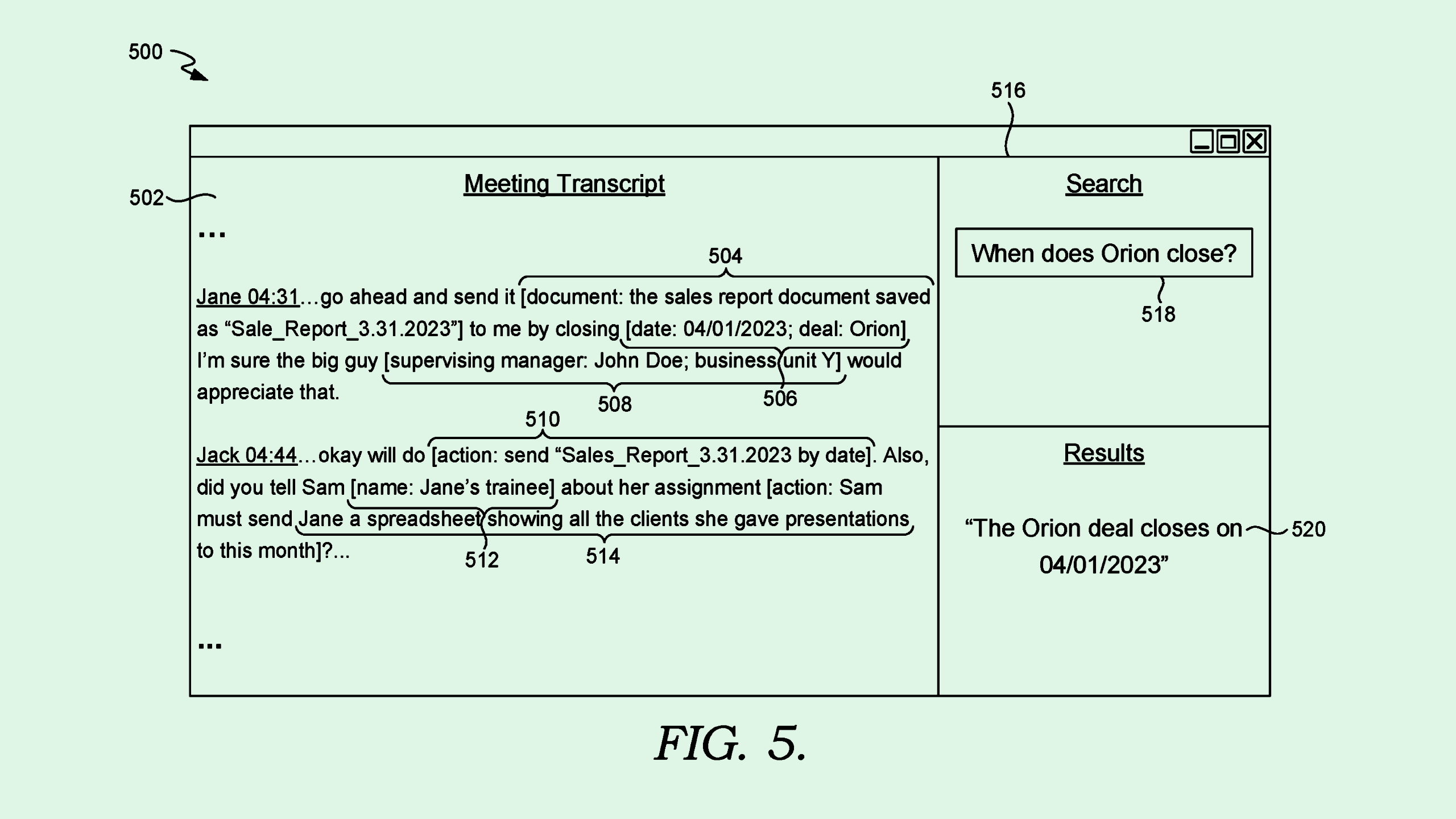

To give its language models a bit more context, Microsoft’s tech introduces “corpus data supplements,” or extra information that’s specific to the needs of the organization, as inputs for the model. These supplements can include metadata or tags that help distinguish general information from specific knowledge.

For example, if a project is called “Red Sea,” and a person asks their AI assistant, “What is the status of Red Sea?” that term may be tagged in Microsoft’s system as “[project name: business unit X],” to make sure it doesn’t answer questions about the body of water instead. Microsoft noted that this tech makes AI more accurate and wastes less computational resources on unnecessary queries.

With Microsoft’s primary use case for AI being a co-pilot to supercharge productivity, it adds up that it’d look for ways to make its outputs more relevant and accurate for whatever company or organization is using it. This also isn’t the first time that Microsoft has sought to use context and extra data to make its AI better.

Additionally, the company claims it has found a way to fix when its AI models do make missteps. Last week, the company unveiled a tool called “correction,” which attempts to catch and fix when AI-generated text is inaccurate by comparing it to source material. The feature can be used with the output of any large language model.

“Empowering our customers to both understand and take action on ungrounded content and hallucinations is crucial, especially as the demand for reliability and accuracy in AI-generated content continues to rise,” Microsoft said in its announcement.

Microsoft isn’t the only one that’s attempted to figure out what to do when AI models hallucinate. Google unveiled a similar tool earlier this year, called Vertex AI.

The problem, however, is that eliminating hallucination from AI is nearly impossible. Given that Microsoft’s tech uses AI to help fix AI, it’s possible that those models, too, could hallucinate, causing more harm than good. One expert told TechCrunch that hallucination is “an essential component of how the technology works.”