Meta To Try Out AI Business Tools as Enterprise Market Heats Up

A patent for AI chip architecture from Meta could make model development more robust as it builds AI tools for businesses.

Sign up to uncover the latest in emerging technology.

Meta is powering up its AI capabilities for business users.

The company is seeking to patent what it calls “chip-to-chip interconnect with a layered communication architecture.” To put it simply, this is a hardware patent that will enable more reliable, scalable and efficient AI systems for handling complex tasks.

“Large numbers of processing units are oftentimes connected for large-scale computing tasks,” Meta said in the filing. “However, these processing units are oftentimes connected in a manner that does not scale well with network speed growth and/or involves cumbersome communication protocols.”

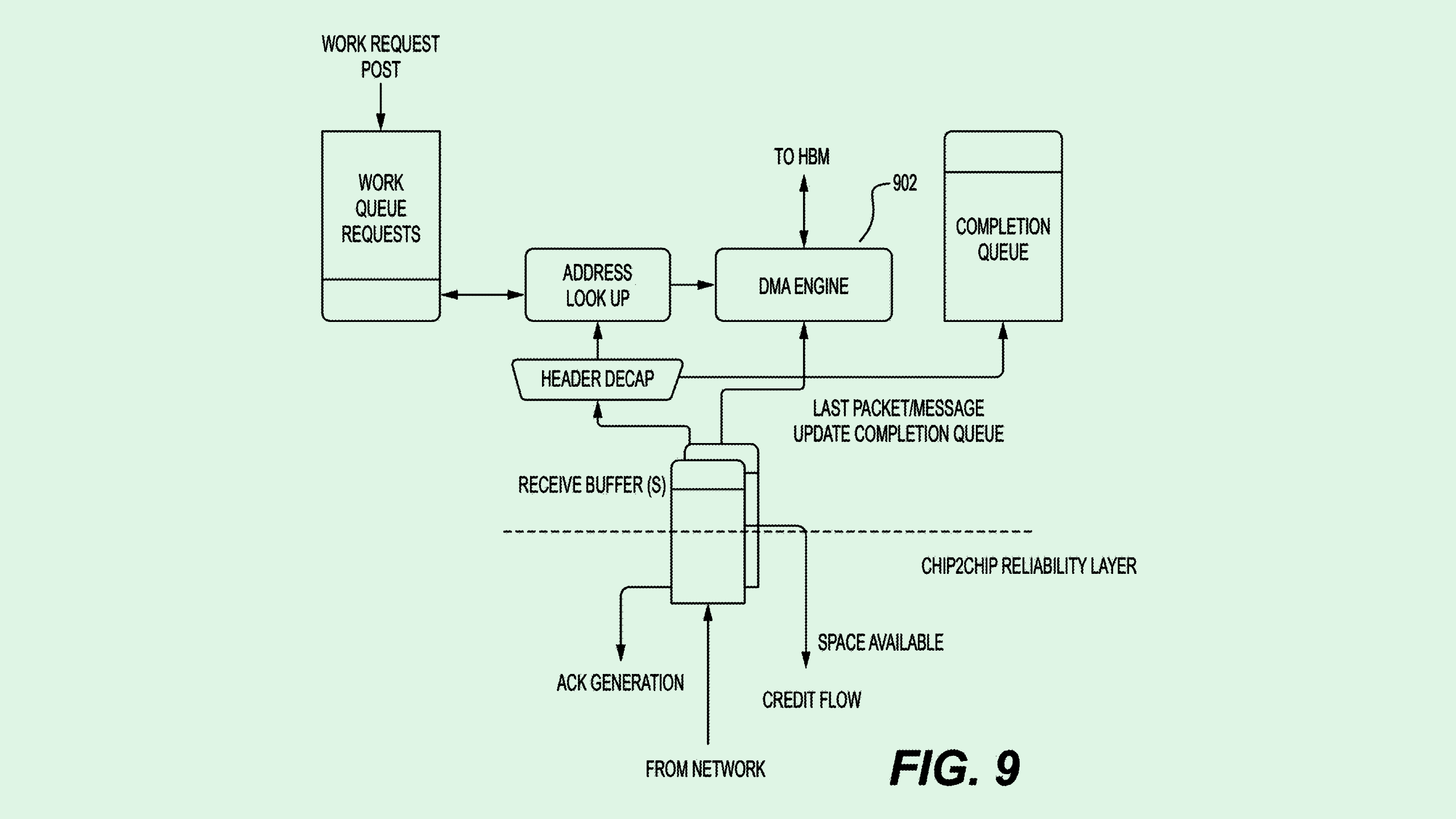

Meta’s patent is quite technical, but to break it down: Several integrated circuit packages each contain AI chips and a “chip-to-chip interconnect communication unit,” which manages the data exchange from one bundle of chips to the next.

This communication relies on “ethernet protocols,” which are known for the reliable, high-speed exchange of data. These protocols are completed via a “layered communication architecture,” which breaks down communication into several tiers to make the process more efficient. Each is responsible for a different part of the data transfer process – one to handle data transmission, another to handle data integrity, and another to manage final delivery.

Finally, Meta’s tech uses what’s called “credit-based flow control,” which makes sure that data is only exchanged when systems have the capacity to receive it. Any data that’s lost or corrupted in the process of being transferred between circuit packages is retransmitted.

The capabilities of Meta’s tech system more than the tech itself: Making communication between AI architectures more robust as a means of handling increasingly complex and data-intensive tasks.

By creating architecture that promotes seamless communication between GPUs, tech like this could broadly support Meta’s AI efforts, whether it be building out its artificial reality tech or creating a robust foundation for Llama, its group of language models, said Thomas Randall, advisory director at Info-Tech Research Group.

“If you end up with a model where you’re bringing in new chips that can’t communicate with your legacy ones, then you’ve lost a lot there,” said Randall. “[This can] maintain the seamless flow of data as new chips are being created.”

And Meta’s AI work may soon take a turn into enterprise: The company hired Clara Shih, former CEO of AI at Salesforce, to lead its new Business AI unit, which will focus on building AI tools for businesses that use Meta’s apps to reach consumers.

“Our vision for this new product group is to make cutting-edge AI accessible to every business, empowering all to find success and own their future in the AI era,” Shih wrote in a LinkedIn post last week.

It’s clear why Meta may be turning its attention to business tools, said Randall: The company has spent a whole lot of cash building strong foundational models to rival the likes of OpenAI, Google and Anthropic. Now, “they’ve got to show returns in some way,” he said. “The natural next step is to try and commercialize [its models].”

Meta, however, may be a little late to the game. The market is flooded with enterprise AI tools from Big Tech and startups alike. While Meta has the advantage of Llama being an extremely capable language model, Randall said, “they don’t have the first-mover advantage.”

“[Meta] needs to showcase what the unique value proposition is of the Llama model versus OpenAI or Gemini or all these other ones,” said Randall. “It might be better in some senses. But is that marginal, or is that significant enough to make this switch?”