Sign up for smart news, insights, and analysis on the biggest financial stories of the day.

Like the perfect beer pong technique, cutting corners in college is a true liberal art.

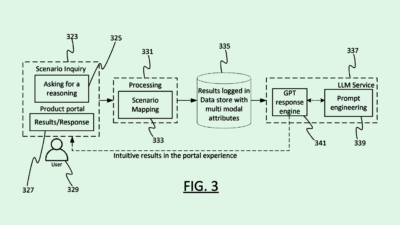

But collegiate rule-breakers have never had a tool quite like ChatGPT, an ultra-smart artificial intelligence program that can write convincing, human-like text with just a few quick prompts. In response, universities across the country are scrambling to safeguard the academic sanctity of term papers.

Paper Cuts

Developed by Microsoft-backed OpenAI, ChatGPT has been heralded as a breakthrough moment for artificial intelligence. After its release in late November, the program quickly amassed over one million users who can direct it to write jokes, TV episodes, or even compose music, like a polymath grad student. Elon Musk, who is also an OpenAI investor, has called ChatGPT “scary good,” while others have criticized its output as inaccurate and lacking in nuance.

For schools and universities around the globe, ChatGPT raises the stakes in the ever-evolving war against plagiarism:

- Annie Chechitelli, chief product officer at anti-plagiarism software Turnitin, told the FT they are developing a tool to help educators in determining if work has “traces” of the AI tool, warning of a coming “arms race” in the battle to detect cheaters.

- Others have warned of the implications of automation tools that can blunt the development of creative skills. A recent Rutgers study showed how students who relied on Google to answer homework questions performed worse on exams.

Not so Testy: Not everyone views ChatGPT as academic armageddon. Lauren Klein, an associate professor in the Departments of English and Quantitative Theory and Methods at Emory University, is nonplussed: “There’s always been this concern that technologies will do away with what people do best, and the reality is that people have had to learn how to use these technologies to enhance what they do best.” In other words, ChatGPT and its AI ilk may not be as smart as they think.