Happy Thursday, and welcome to CIO Upside.

Today: Where could artificial general intelligence fit into your enterprise – and is less really more? Plus: Tech giants seek to bring AI into the physical world; and Wells Fargo wants to sniff out deepfakes.

Let’s jump in.

Big Tech’s AGI Dreams Overshadowed by Smaller, In-Demand AI Advances

Does your model really need to be able to do anything and everything?

Though AI firms have long chased the elusive concept of artificial general intelligence, or a model that’s capable of matching human intelligence in virtually all domains, some may be questioning the validity of that dream: OpenAI CEO Sam Altman told CNBC last week that “AGI” is “not a super useful term,” especially since its definition varies between companies and developers.

“The point of all of this is it doesn’t really matter and it’s just this continuing exponential of model capability that we’ll rely on for more and more things,” Altman told CNBC.

So why is OpenAI seemingly turning tail on its long-time mission? Possibly because large language models might not be the way to achieve AGI. Yann Lecun, who is Meta’s chief AI scientist and largely regarded as one of the godfathers of AI, said in an interview with Big Technology’s Alex Kantrowitz that “human-level AI” won’t be achieved by simply scaling up large language models.

OpenAI, meanwhile, is a “great large language model factory,” said Bob Rogers, chief product and technology officer of Oii.ai and co-founder of BeeKeeper AI. “Their product is LLMs right now. Maybe they’re starting to think that it might be a longer time frame to get to that next set of capabilities, and so they need to be selling what they’ve got.”

As it stands, we’re far off from AGI becoming a reality, said Rogers. with our strongest systems still missing the mark on several metrics. “At least to the extent that we haven’t gotten to AGI yet, it’s a useful term — there’s a bar there,” said Rogers.

- One key capability missing among large language models in particular is consistency in problem solving and decision-making, said Rogers. Because these models are unpredictable, the quality of output can differ wildly, even if input prompts are similar.

- Another is that these models don’t actually know facts, said Rogers, but are instead performing word association to provide the most likely answer. “That leads to the next gap, which is then reasoning, because you can’t really reason on facts until you know facts,” he said.

- Additionally, large language models don’t have a great grasp of human emotion, he said, and often lack an understanding of the “linguistic nuance” of things like sarcasm.

If developers can overcome those challenges, AGI may prove useful to enterprises, said Rogers. Currently, agentic AI is all the rage, with tech firms promising specialized agents to handle tasks throughout operations. But as those agentic use cases start to scale, they can quickly become difficult to manage. In theory, that’s where AGI could come in, acting as a singular, central multitool, Rogers said.

“I think there is a practical consideration that, even if I have 20 agents that can do the 20 things I need to have happen in my organization, that can be harder to manage than having a single system,” he added.

But, setting aside the massive technological barriers that face the tech, the biggest obstacle for enterprises would likely be cost, said Rogers, especially for small and mid-sized companies that are already struggling with the high price of AI. Most are probably better off utilizing small, fine-tuned models that can run within their infrastructure.

“Right now, tuned models give a lot of benefits in terms of understanding my context and my documents and my needs,” said Rogers. “Why do I want one giant multitool with a million things sticking out of it that’s heavy and awkward? Where that AGI multitool becomes really valuable is a couple generations away.”

Are Your AI Agents A Data Breach Waiting To Happen?

Teams are deploying AI agents with broad API access and minimal oversight. But what starts as helpful automation can quickly become a security incident.

The problem isn’t the AI: it’s something called the “infrastructure gap.” Most teams simply bolt agents onto existing systems without proper boundaries, audit trails, or access controls. A misconfigured agent can delete vital files, trigger unwarranted financial transactions, or expose sensitive data–all because no one defined what it should or shouldn’t be able to do.

WorkOS addresses this systematically with:

- Machine-to-machine authentication that verifies every agent request.

- Token-based permissions that restrict what agents can access.

- Real-time monitoring that logs and audits agent activity.

Fast-moving teams use WorkOS to build secure agent workflows without having to rebuild security infrastructure.

How AI-Powered Robotics Face Obstacles in Real-World Scenarios

Tech firms want to bring AI into the real world, but they may not be ready for each other just yet.

Nvidia this week announced a host of new models, libraries and infrastructure for robotics developers. One model, called Cosmos Reason, is a 7-billion parameter vision language model offering “world reasoning” for synthetic data curation, robotic decision-making and real-time video analytics.

“By combining AI reasoning with scalable, physically accurate simulation, we’re enabling developers to build tomorrow’s robots and autonomous vehicles that will transform trillions of dollars in industries,” Rev Lebaredian, vice president of Omniverse and simulation technologies at Nvidia, said in the announcement.

This isn’t the first time Nvidia and other firms have sought to bring powerful AI models into robotics contexts. In June, Google DeepMind introduced Gemini Robotics On-Device, a “vision language action” model that it claims can help a robot adapt to new domains in between 50 and 100 demonstrations. Plus, both Google and Nvidia have filed plenty of patent applications for AI-powered robotics tools, including data simulation, control and safety measures.

Still, deploying AI – particularly powerful, foundational language models – in robotics contexts comes with a number of obstacles in materials science, mechanical engineering and software, said Ariel Seidman, CEO of edge AI firm Bee Maps.

- While it’s possible to teach a robot to do a very specific task, creating a general-purpose robot, controllable by a large language model and capable of handling a wide array of tasks, is a “much more open-ended problem,” said Seidman.

- “There’s almost like an endless number of scenarios that it has to deal with,” said Seidman. That unpredictability can be difficult to account for in training.

“It’s just a totally uncontrolled environment where I don’t think people are going to have the patience for robots that are constantly misbehaving or not meeting expectations,” said Seidman.

Logistics, factories and warehouse environments may be a good place to start for a number of reasons. For one, the environments themselves are often tightly-regulated, so the robots operating in them will face less unpredictability. These environments are also a great way to build up a “massive corpus of data” at scale with fewer privacy concerns than in public or personal use cases, said Seidman.

“There’s all the etiquette, combined with the utility that needs to be embedded within a robot,” said Seidman. “You need that kind of data so that robots can understand how to respond to a human in these situations.”

Wells Fargo Patent Highlights Fatal Flaw in Deepfake Detection Models

Can you tell when it’s a bot at the other end of the line?

Wells Fargo wants to help you decipher what’s real and what’s fake. The company filed a patent application for “synthetic voice fraud detection,” tech that attempts to pick up on deep fakes even if they’re based on authentic vocal samples.

“Advances in AI technology have made it possible to create highly convincing synthetic voices that can mimic real individuals,” Wells Fargo said in the filing. “As synthetic voice technology becomes more sophisticated, detecting synthetic voices becomes more difficult.”

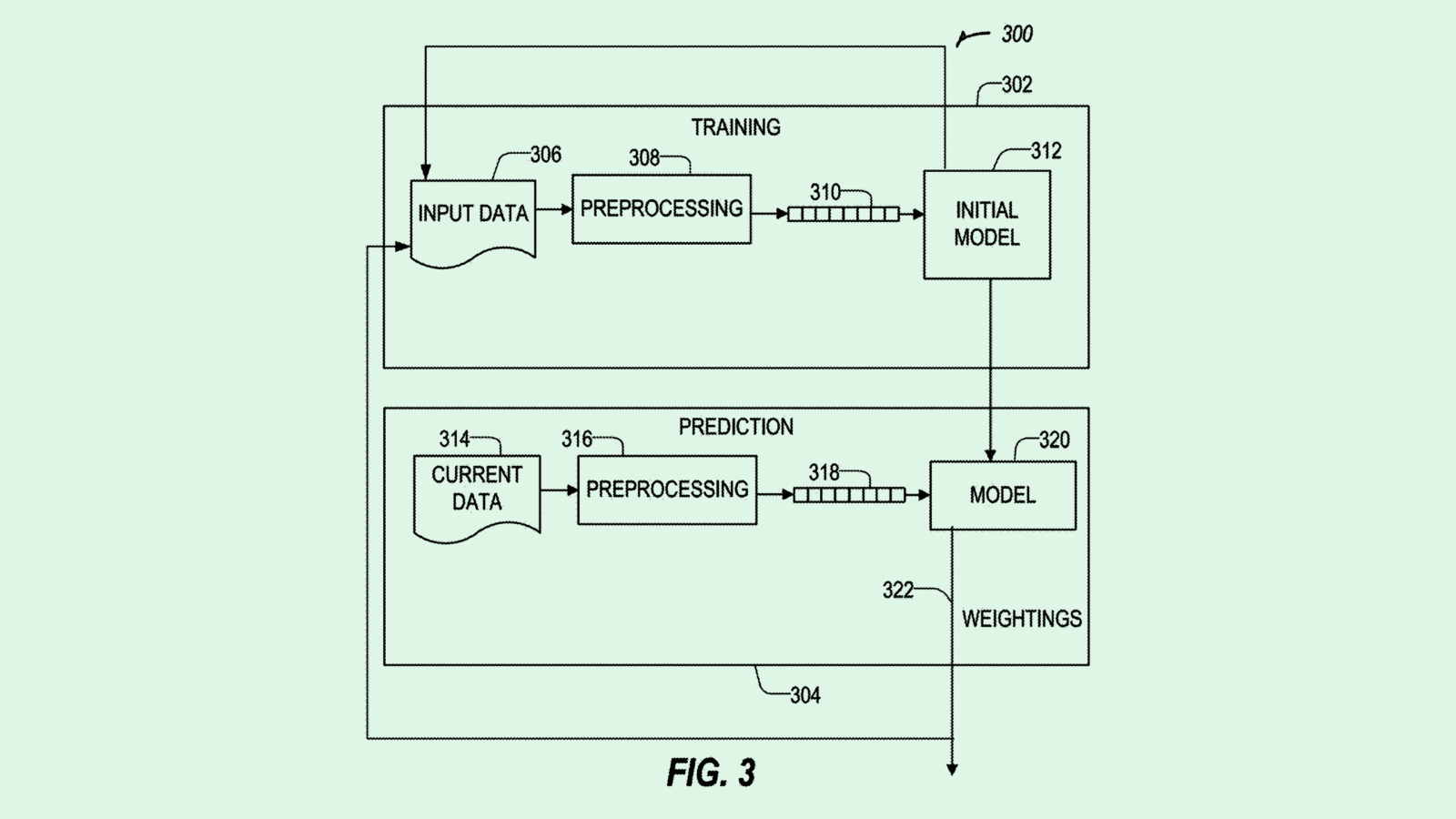

Wells Fargo’s system first collects samples of peoples’ voices and normalizes them, cutting out background noise and adjusting the volume if necessary. The system then generates an artificial clone of that person’s voice, feeding both to a machine-learning model for training.

From this, the model learns to spot subtle indicators and inconsistencies between the real and synthetic voice clips. This could include detecting audio artifacts from generation algorithms, unnatural variations in pitch or timing, or differences in the patterns of background noises or harmonic structure.

Wells Fargo is far from the first company seeking to tackle deepfakes. Sony filed a patent application that sought to use blockchain for deepfake detection, Google’s patent history includes a “liveness” detector for biometric security, and Intel sought to patent a way to make deepfake detectors more racially inclusive.

The fatal flaw with synthetic content detection systems, however, is one that’s present throughout the cybersecurity industry: When you build a 10-foot wall, threat actors will combat it with a 12-foot ladder. And defenses take far longer to build than they do to break.

This point is especially relevant as AI continues giving threat actors more ammo. With the tech providing the power to create more realistic deepfakes, detection tools can become outdated more quickly than ever. As Joshua McKenty, co-founder and CEO of Polyguard, told CIO Upside last week: “Every tool you build today, you’re putting into the hands of an attacker.”

Extra Upside

- Tracking Chips: U.S. authorities have reportedly embedded trackers in chip shipments to catch diversions to China, according to Reuters.

- Talent Wars: Anthropic has acquired most of the team behind AI management platform Humanloop to strengthen its enterprise strategy.

- Bring Structure to AI Agent Access. WorkOS delivers enterprise ready access controls, audit logs, and permission limits built to secure AI agent workflows. See how teams limit what agents can access without slowing down their work. Make your agents secure and scalable.*

* Partner

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.