Intel Filing Could Diversify Deepfake Detection Models

he filing adds to several patents from tech companies that aim to tackle the deepfake problem amid the proliferation of generative AI.

Sign up to uncover the latest in emerging technology.

Intel wants its AI models to identify all manner of deepfakes.

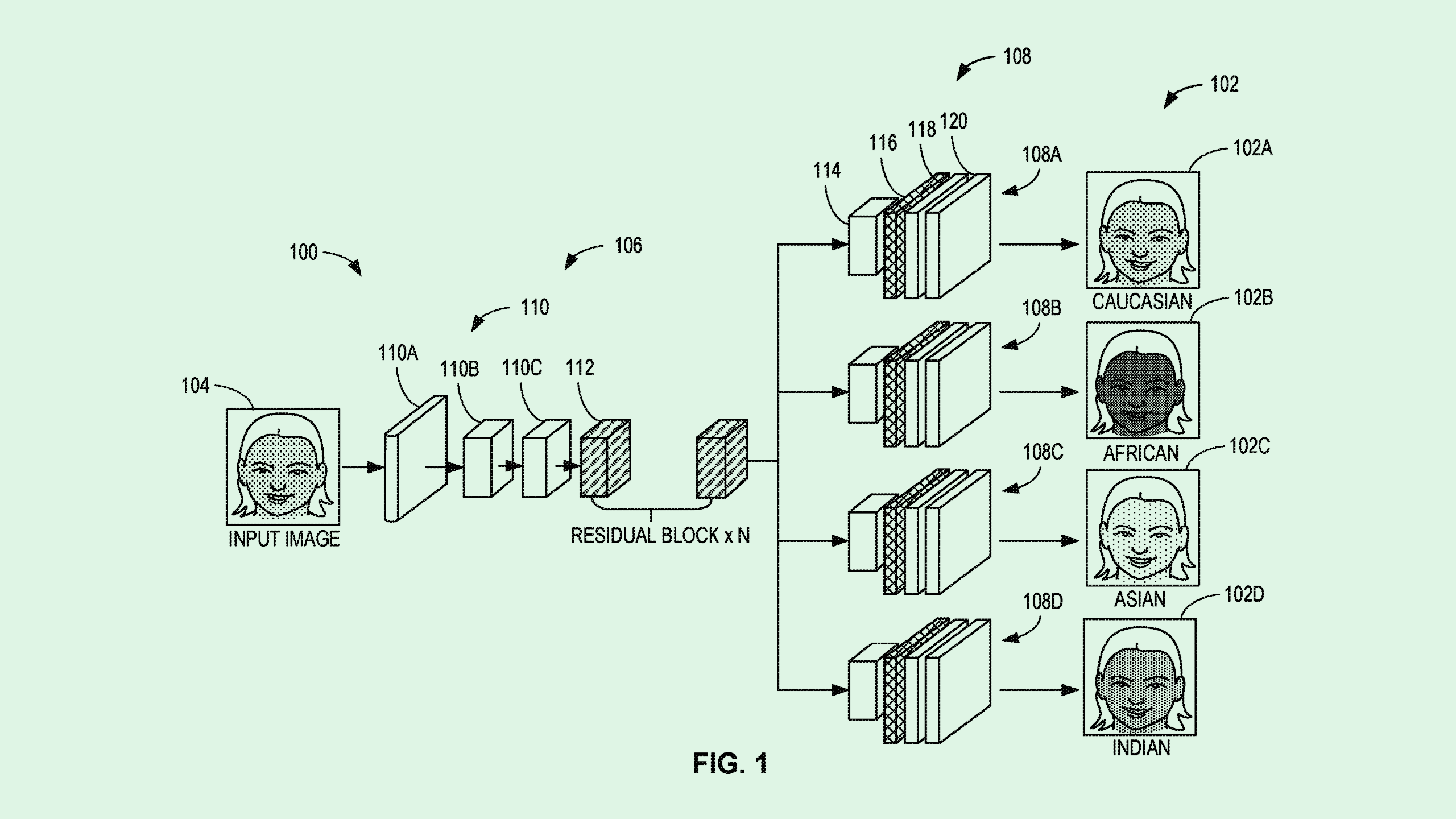

The company filed a patent application for systems to “augment training data” based on diverse sets of synthetic images. This tech uses generative AI to create deepfakes representative of “diverse racial attributes,” aiming to train its models to better detect a wider range of AI-generated imagery.

“Some training datasets include a disproportionate and/or insufficient number of images for one or more racial and/or ethnic domains,” Intel said in the filing. “The resulting deepfake detection algorithm may be biased and/or inaccurate when trained based on the training dataset.”

Intel’s tech relies on a generative AI model to transfer “race-specific” features from a reference image to an input image, creating a synthetic image that’s a hybrid of the two (though Intel doesn’t note specifically which facial features this tech may augment). After it’s created, the model employs what Intel calls a “discriminator network” which labels the race of the subject in the image and whether or not the image is synthetic.

Those synthetic images are fed back into the initial training dataset, aiming to create a more robust deepfake detection algorithm. “Augmenting the training dataset with the synthetic image(s) … may reduce bias and/or improve accuracy of the deepfake detection algorithm,” Intel notes in the filing.

With the widespread proliferation of generative AI, plenty of firms have sought patents to take on the deepfake problem. Sony sought to patent a way to use blockchain to trace where synthetic images came from, Google filed an application for voice liveness detection, and a Microsoft patent laid out plans for a forgery detector.

“The technology is becoming so widespread now that it’s hard to know what the future is going to look like,” said Brian P. Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University. “It’s definitely going to be an arms race between generation and detection of them.”

But Intel’s patent highlights an important issue with image-based deepfake detection tech: To have a model that works for all faces, you need a diverse dataset. Facial recognition systems generally already have a bias problem, often due to a lack of diversity in datasets. If datasets don’t reflect the deepfakes that need to be detected, more synthetic images may slip through the cracks as the issue snowballs.

Though this patent seemingly has good intentions, Green noted, this tech includes a system that labels images by race. However, race and ethnicity aren’t always easily detectable by just looking at an image, Green noted. For example, a system like this may fail to identify deepfakes of people who are mixed race, he said. “It’s a simplification of human diversity that could be ethically problematic.”

However, this isn’t the first time Intel has sought to patent systems to aim to make AI more reliable and responsible. Though the company’s primary business is making chips, it could be trying to give AI – and, in turn, itself – a good reputation, Green added.

“If AI in general gets this bad name because of deep fakes or other unethical behavior, then that could perhaps cause a backlash that would go all the way back to the chip industry,” Green said.