Sony May Use Blockchain to Track Down Deepfakes

Its latest patent for “fake video detection” could use crypto’s underlying technology to fight misinformation and establish more trust in AI.

Sign up to uncover the latest in emerging technology.

Sony may be looking at new ways to put blockchain technology to good use.

The corporation filed a patent application for “fake video detection using blockchain.” Sony’s tech uses AI image analysis and “image fingerprinting” to determine when a video has been altered or faked based on an original.

“Modern digital image processing, coupled with deep learning algorithms, presents the interesting and entertaining but potentially sinister ability to alter a video image of a person into the image of another person,” Sony said in the filing. This tech aims to solve this issue by determining when a video is genuine or AI-generated.

Sony’s system uses blockchain as a method of creating “digital fingerprints” of videos. In this, a blockchain block stores a “hash” of a video, which is essentially a digital verifier, as well as its metadata, such as location or timestamp, to prove authenticity.

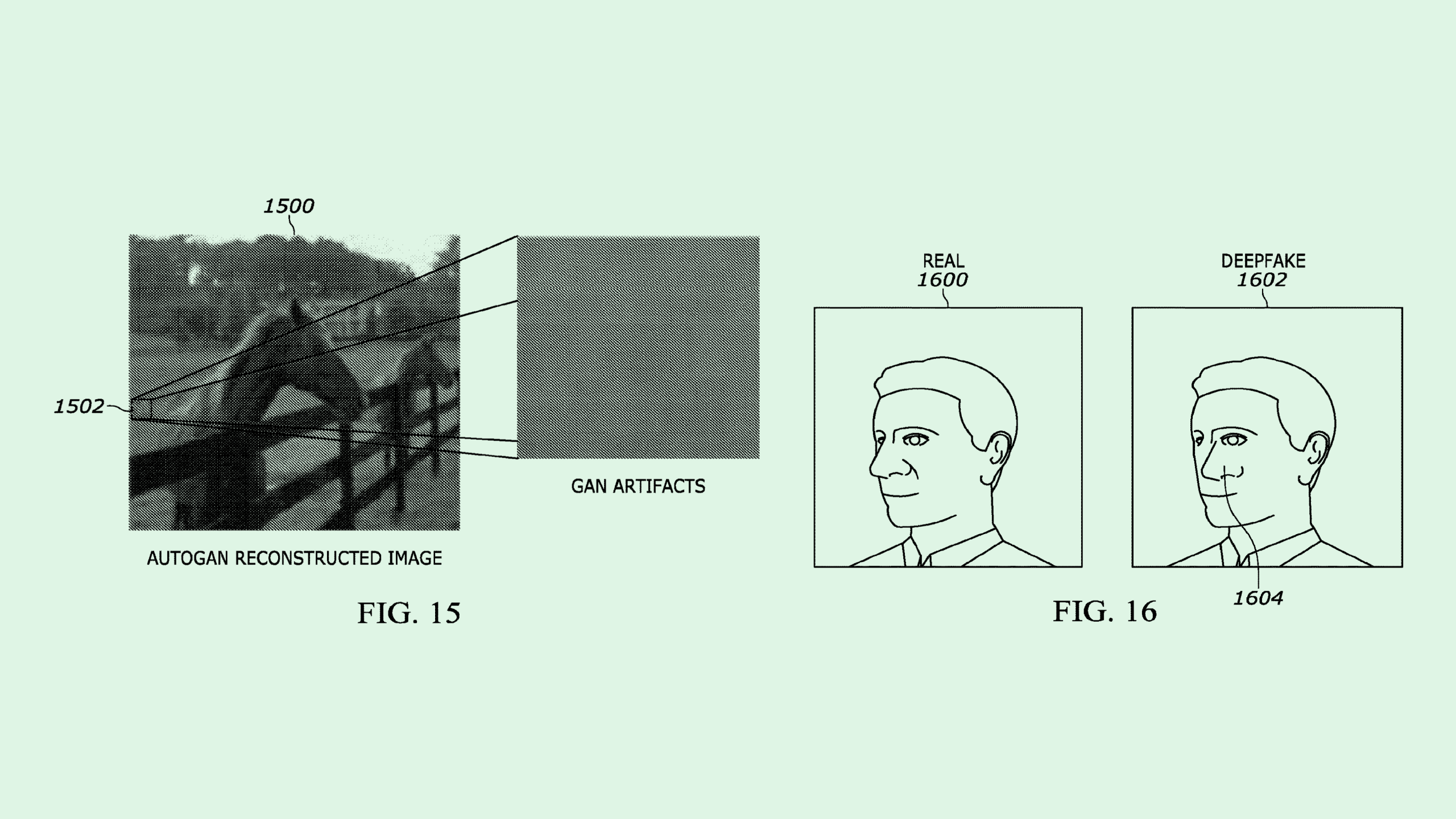

Before a video can get added to this blockchain, Sony’s system puts it through several different kinds of analysis to determine if the subject of the video is a real human being. First, a facial recognition model checks for irregularities in facial texture or between the face and the background, as well as whether the facial movements seem natural.

Next, the system employs “discrete Fourier transform,” a mathematical technique which can detect irregularity in video brightness. For audio, it may use a neural network to break down whether or not the voice in the video is representative of natural human speech.

If the video is determined to be fake, the system will either refuse to add its data to the blockchain entirely, or will add it with an indicator that it’s fake. The fake video may also be reported to the original video distributor or service provider to be taken down.

As AI-generated content continues to weave its way into everyday life, it’s becoming harder for people to distinguish fake faces from real ones. According to a study released in November by the Australian National University, the majority of test subjects couldn’t tell an AI-generated face from a real one, specifically when that face was white. (Non-white AI-made faces still fell into the so-called “uncanny valley,” likely due to disproportionate training data in the models that made them.)

Viral deep fake images are becoming more and more common, too, whether it be The Pope in a puffer jacket or Taylor Swift peddling Le Creuset cookware. And ahead of the 2024 presidential election in the US, the spread of this kind of content could quickly tip from humor to misinformation.

But Sony’s patent lays out an example of how blockchain could be used to fight it, said Jordan Gutt, Web 3.0 Lead at The Glimpse Group. Two of blockchain’s biggest features are immutability and transparency, said Gutt. Implementing those tenets into content distribution could help service providers ensure that the content they’re serving comes from actual human beings.

Though it’s unclear exactly how a system like Sony’s would be implemented, “the immutability, transparency and security of it are things that would improve the user experience and trustworthiness across multiple platforms,” he said.

This is just one example of how blockchain and AI may converge, said Gutt. The traceable nature of this tech could be valuable for verifying the authenticity of data in datasets, bringing more integrity to the AI training process, he said. “Blockchain really has these core functionalities that make a database seem antiquated.”

But blockchain adoption faces big consumer trust issues. After crypto’s fall from grace in 2022, blockchain technology gained a bad reputation. Before blockchain can be used to build trust in other technologies, the tech firms that want to use it may need to figure out how to make consumers trust it, too.