Happy Monday, and welcome to CIO Upside.

Today: While giving AI agents more connectivity and context may unlock major benefits, it might also unleash cybersecurity domino effects. Plus: You can’t teach an old codebase new tricks; and Microsoft’s recent patent targets bias in search.

Let’s jump in.

Who’s Who? Identity Questions Undermine Model Context Protocol

![SUQIAN, CHINA - MAY 23: In this photo illustration, the logo of Anthropic is displayed on a smartphone screen on May 23, 2025 in Suqian, Jiangsu Province of China. Anthropic on May 22 said it activated a tighter artificial intelligence control for Claude Opus 4, its latest AI model. (Photo by VCG/VCG ) (Newscom TagID: vcgphotos221514.jpg) [Photo via Newscom]](https://www.thedailyupside.com/wp-content/uploads/2025/09/vcgphotos221514-scaled-1600x1200.jpg)

As much as enterprises want agents and systems to work together, getting them to play nice may be harder than anticipated.

In November, Anthropic introduced a standard called Model Context Protocol, which aimed to connect AI models to the “systems where data lives.” As talk of agentic AI reached a fever pitch in the tech industry, OpenAI quickly followed in its rival’s footsteps, launching connector offerings between its AI models and enterprises’ internal systems.

While giving agents more context to work with can help users better reap the benefits of the tech, doing so presents some cybersecurity pitfalls: The more access you give to these agents, the larger the attack surface.

One of the biggest security concerns that these connected agents present is identity, said Alex Salazar, co-founder and CEO of Arcade.dev. When agents are performing actions autonomously or retrieving information on behalf of a person, access controls and identity can easily gum up the works.

- For example, if you ask an agent to pull up salary information related to one executive, it could hallucinate and retrieve data related to someone else that you may not be authorized to see.

- The issue of hallucination becomes “even more dangerous” when you ask an agent to do more than just retrieve information, such as performing and automating tasks like scheduling or responding to emails, said Salazar.

- “If I’m a CIO, I need to be able to have confidence that this agent, on behalf of this user, can perform this action on this resource,” said Salazar. “There cannot be room for hallucination in that question.”

The problem is that these agents are seen as a new kind of digital identity, rather than an application. And while OAuth, or a protocol that allows you to log into applications without actually sharing credentials, exists for applications, Model Context Protocol and other connectors don’t currently support that capability (though Arcade.dev is working on a solution, said Salazar).

To put it simply: As it stands, the question of identity creates friction in actually getting the best possible use out of AI systems. “Despite how far into agents we are, they still can’t send an email,” Salazar said.

For enterprises looking to more broadly adopt AI, the inability to trust and utilize agents could lead to two outcomes: a cybersecurity domino effect when AI systems go haywire, or a massive investment getting shut down before the agents could even get off the ground, Salazar said.

“If I can’t prove that an AI agent is only going to access my information and adhere to the permissions that I am held to … then I can’t ever really use it in production on a sensitive system or a multi-user system,” he added.

One solution may be thinking smaller: Among the biggest mistakes that enterprises make as they build agents is “overscoping” them, or making them do too much, Salazar said. The more you ask of an agent, the more access it needs to have, and the higher the likelihood it will make a mistake.

“When teams pick a narrow use case, they’re much more likely to succeed,” he said. “When you give an AI more information, it makes everything worse.”

Does Modernization Really Mean Walking Away From What Works?

CIOs face constant pressure: adopt cloud, enable AI, and deliver innovation without risking the stability of the systems the business runs on every day.

The challenge? Too many modernization strategies overlook the value of these proven core systems, creating unnecessary risk and disruption.

Rocket Software helps CIOs take a different path, one that builds on the strength of existing infrastructure while unlocking new capabilities.

Rocket Software delivers:

- Flexible modernization paths that evolve infrastructure, applications, and data while preserving stability.

- Integration with cloud and AI that extends the value of core systems.

- Enterprise-grade security that safeguards mission-critical data and applications throughout the modernization journey.

With Rocket Software, modernization doesn’t mean walking away from your existing infrastructure — it means bringing it forward.

Legacy Code Might Be Holding Your Enterprise Back

Your enterprise might need to look under the hood.

A recent report from Saritasa found that 62% of American companies are running outdated software. About 43% of those surveyed said legacy code represents a major risk.

In banking and finance, for example, 95% of ATM activity in the US and 80% of in-person credit card transactions still run on code that’s 60 years old. Financial services are at the forefront of code modernization, driven by legacy footprints, regulatory pressures and the risks involved in operating old code, said Dr. Ranjit Tinaikar, CEO of Ness Digital Engineering.

“Healthcare, travel, hospitality, retail and manufacturing are not far behind the first wave,” he added.

And the market for updating legacy tech could be lucrative, Tinaikar noted, with services in application modernization, mainframe modernization and more potentially valued at more than $100 billion combined by 2030.

As AI adoption starts to take hold, stakeholders are looking to resolve the technical debt of legacy systems, reduce security risks and unlock new capabilities, Tinaikar said. Plus, “modernizing outdated platforms is the only way to fully tap into AI-enabled productivity gains,” he noted.

So how can enterprises brush the dust off their aging code? One solution may be intelligent engineering, said Tinaikar, or optimized development teams that leverage state-of-the-art technologies, including AI, to modernize legacy systems.

- Instead of limiting projects by costs or spending on large engineering headcounts, intelligent engineering frameworks embed generative AI, monitoring and automation into every stage of the product lifecycle from design to testing, deployment and continuous improvement, Tinaikar said.

- AI modernization tools allow developers to understand code that is no longer in use. “Even legacy code with no documentation can now be documented with AI tools,” he explained.

- “By embedding continuous productivity improvement into modernization programs, (intelligent engineering) enables enterprises to update their digital surface and re-architect legacy systems into adaptive, future-ready platforms,” Tinaikar added.

But legacy modernization doesn’t come without security headaches. Risks include logic exposure from reverse-engineering, new configuration errors coupled with vulnerabilities, and supply chain compromises due to the reliance on third-party modernization tools, Nic Adams, co-founder and CEO of 0rcus, told CIO Upside.

“This creates a dual-system (legacy and new) environment with increased attack surfaces, making it a high-risk period for exploitation,” said Adams.

The temptation to rely on AI to handle legacy migration may be irresistible to executives. However, fully automated legacy modernization can backfire.

“Introducing any automated code translation tools brings the risk of inheriting and replicating legacy vulnerabilities, security flaws and even insecure programming patterns in newly generated code,” Adams said.

Microsoft Targets AI’s Bias Problem in Recent Patent

AI models can be easily swayed.

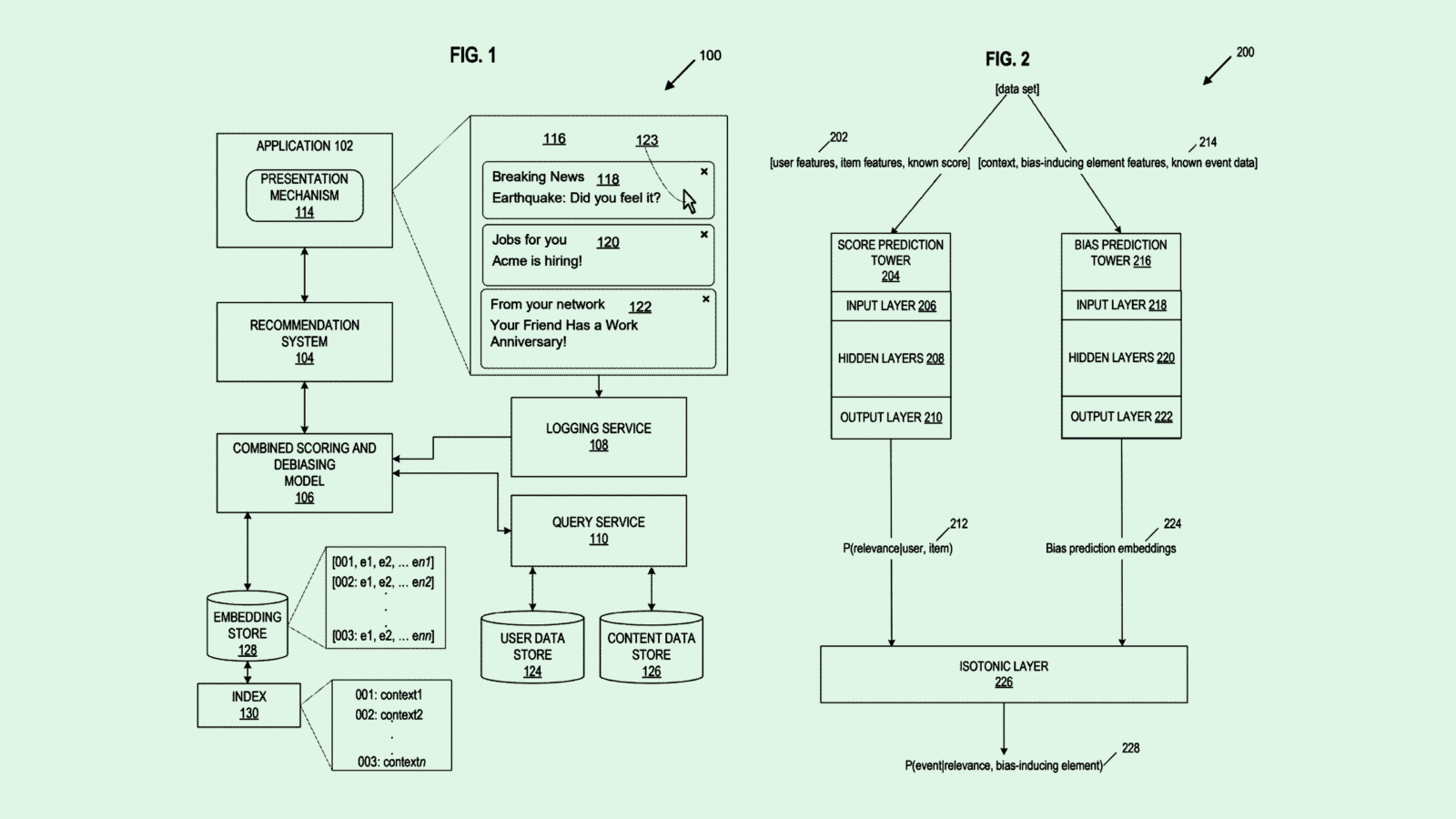

Microsoft may be looking to keep them more objective, with a patent application for a “debiasing framework for deep learning models.” In short, the tech aims to reduce bias in deep learning-powered ranking models, specifically targeting recommendation engines.

Bias is one of the fundamental issues that AI developers face as they build and deploy models. “Low or unstable prediction accuracy can have adverse consequences ranging from the display of irrelevant, inappropriate or biased information to users of an online system, to erroneous control signals or operational decisions of an automated system due to biased predictions,” Microsoft noted in the filing.

Deep learning-based ranking models usually rely on relevance scores to rank responses to queries. However, those can easily fall prey to biases, such as popularity of certain content items or the demographic of the user who submitted the query.

Microsoft’s tech uses a three-part architecture to remove biases from these relevance scores. First, the raw relevance score is produced by the deep learning model. In parallel, a bias prediction module creates “bias embeddings,” or estimates of distortions to the score that may have been caused by biases. The final part is an “isotonic layer,” which combines the raw relevance score with the bias embeddings, creating a “de-biased” output.

Microsoft isn’t the first tech firm to target AI’s bias problem. Companies including Amazon, Google, Intel, JPMorgan Chase and more have sought solutions in their patent activity. And it makes sense why: Biased outputs harm the accuracy, and therefore the usability, of these systems.

For this patent in particular, the goal is to create a more accurate and relevant ranking engine, which could be useful in anything from search engines, like Microsoft-owned Bing, to AI-powered assistants, such as Copilot. And as each company seeks to carve out its place in the frenzied AI market, anything that can make these systems more reliable could give the firms an edge.

Extra Upside

- Suit Settled: Anthropic agreed to pay $1.5 billion to settle a class action copyright lawsuit with a group of authors.

- Big Spender: OpenAI told investors its spending may rise to $115 billion through 2029, up from the $80 billion it previously expected to spend.

- Does Modernization Really Mean Walking Away From What Works? Rocket Software helps CIOs extend the value of proven core systems while enabling AI, cloud, and innovation, without disruption. Keep what works, modernize what’s next. See how to future-proof your infrastructure.*

* Partner

CIO Upside is a publication of The Daily Upside. For any questions or comments, feel free to contact us at team@cio.thedailyupside.com.