Amazon Patent May Help Models Unlearn Biases, Inaccuracies

And Amazon wants to help machine learning models unlearn bad data.

Sign up to uncover the latest in emerging technology.

Amazon wants its AI models to be able to break bad habits.

The company is seeking to patent “un-learning of training data for machine learning models.” The goal is to essentially remove the influence of specific points of data on a model’s output without having to retrain an entire model from scratch.

Because retraining is often required to remove data influence, “compliance with frequent data removal requests can thus be difficult to satisfy, particularly for smaller companies with limited budgets that rely on large data models,” Amazon said.

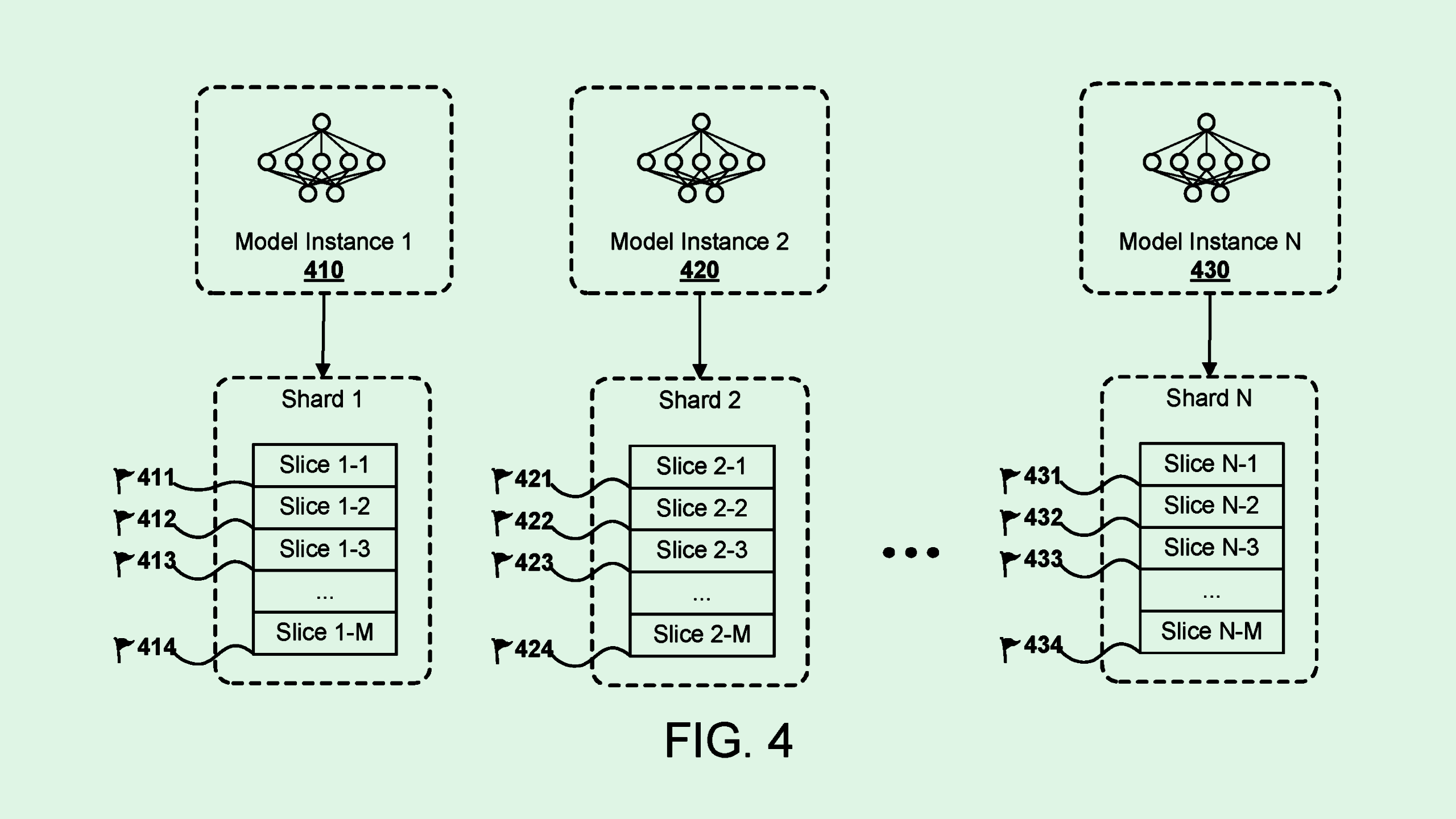

Amazon’s tech does this by dividing AI training datasets into “shards,” or subsets of data, and “slices,” which are smaller subsets of the shards. When training a model on this kind of dataset, it’s trained in waves — which Amazon calls “checkpoints” — on each slice and shard.

When a request is made to remove specific data points, the system would pick out the shard and subsequent slice where that data is housed. Those points are then removed from the specific slice. Amazon retrains the model by going back to a checkpoint before the model had been trained on that slice, and retraining it from that point on.

Backtracking in this way saves a ton of time and resources that would otherwise be spent retraining the entire model. This could allow for frequent retraining as a developer discovers inaccuracies or security problems.

Amazon has spent the past year trying to keep up with the rest of tech’s voracious appetite for AI. Along with strengthening the AI within its consumer offerings, such as its reported plans to give Alexa an AI makeover, its last re:Invent conference was filled with AI announcements, including new chips, generative AI tools, and a chatbot. It’s also extended generative AI capabilities to merchants and sellers to assist with ads and listings.

The company’s greater strength, however, is its cloud services dominance. As computing demand continues to rise, so does the demand for cloud computing — an industry in which AWS currently sits at the top. According to CRN, the company held 31% of the global cloud services market share in the first quarter of 2024.

And Amazon has certainly catered to the tech industry’s desires: It launched Amazon Bedrock, its generative AI development suite, last April through AWS. It also invested $4 billion in AI startup Anthropic, a competitor to OpenAI, and made Claude 3.5 Sonnet, the startup’s newest and most powerful AI model yet, generally available to Bedrock customers in mid-June. On a smaller scale, the company also announced a $230 million investment in generative AI startups, with some of the funds going toward its AWS Generative AI Accelerator.

All this said, it makes sense that Amazon would continue to seek IP in faster and more efficient AI training. In the case of this patent, this tech could help models unlearn inaccuracies, biases, and security leaks in a much more resource-efficient fashion. That capability could certainly be a draw to AI developers who don’t have the resources to strip their models down to the screws when they find a mistake.