Happy Thursday and welcome to Patent Drop!

Today, patents from Apple to make its artificial reality headset a little more health-aware could open up a whole new world of possibilities for AR in clinical contexts. Plus: Nvidia and Google want to make AI robots that can react, and Zoom wants to translate accents.

Ahead of today’s Patent Drop, we want to direct you to this free report that walks through the results of a survey on how risk professionals are feeling about fraud in the age of generative AI. Download Persona’s trust and safety report and see how your peers are combating AI fraud today.

And a quick programming note: Patent Drop is taking a brief vacation on Monday, April 1, but will be back on Thursday, April 4.

Let’s jump in.

Apple’s Headcase

Now that Apple’s gotten in your face with the release of the Vision Pro, it may want to dig a little deeper.

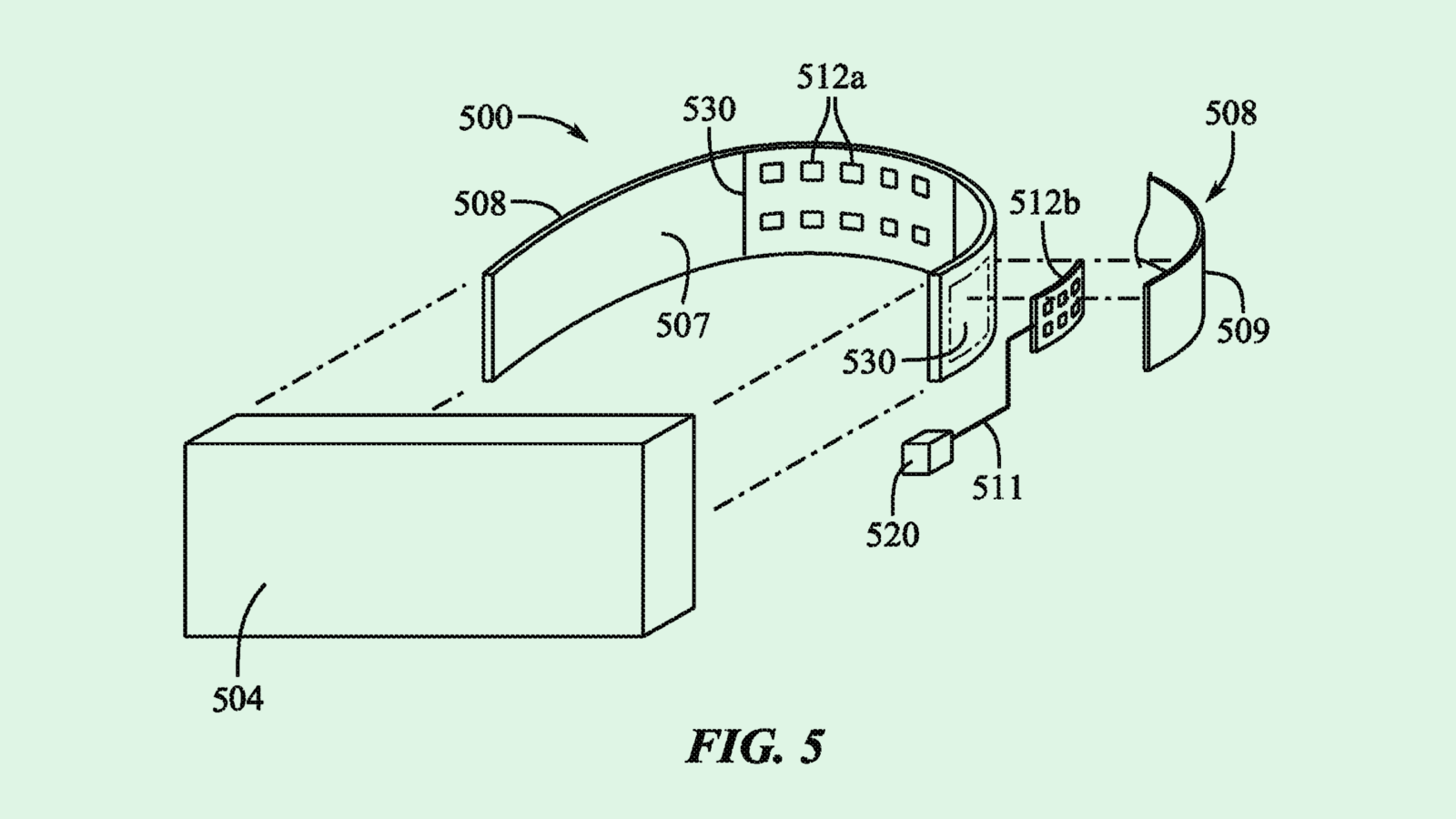

The company is seeking to patent a “health sensing retention band” for a head-mounted device. Apple’s system essentially aims to embed sensor technology into the band of its artificial reality headset that can track health data.

“An arrangement of sensors that efficiently utilizes the limited real estate on (a head-mounted device) and which is ideally positioned to detect the intended data is needed,” Apple said in the filing.

Apple’s system would add an array of electrode sensors within the band that straps an artificial reality device to a person’s head, positioning them toward the back of the user’s head. This would allow Apple to collect information about a user’s brain activity and transmit signals to the device based on those biometric readings.

These signals may be used to perform actions through the headset, such as changing visuals or audio. The company said that tracking these brain signals could be used for several different metrics, including user identity, environmental detection, movement, and location.

Outside of performing specific actions, Apple noted several additional use cases for this tech, including workplace, clinical, education, training, and therapy. It can also allow observations of the “autonomic nervous system,” tracking things like relaxation and stress indicators, mental health, or medical treatment.

Along with its back-of-the-head focus, Apple filed a separate patent for a way to integrate health sensors into the facial interface of the device. This would specifically integrate biometric pressure sensors into the “deformable pad” that sits on the bridge of the wearer’s nose. Apple noted that this could detect pulse, respiration, and facial expressions, as well as chewing, and changes in cartilage or blood pressure.

It’s no secret that Apple is the monarch of consumer health tech. Along with the Apple Watch and its embedded health and fitness apps, the company has an ever-growing pile of patents for health-tracking capabilities. This filing, however, could bring Apple’s tech into a more clinical setting with its capabilities to monitor mental health indicators or reactions to medical treatments.

It’s not the only indicator that Apple wants to help out in hospitals: The company announced earlier this month that it’s working with health tech firm Siemens on a Vision Pro app that creates realistic renderings of human anatomy, aiming to help with surgical planning and medical education. Additionally, a report from The Information in October noted that Apple was exploring more health monitoring capabilities with the Vision Pro.

Given Apple’s track record, its health research is hardly surprising, said D.J. Smith, Co-Founder and Chief Creative Officer at The Glimpse Group. And while there are always concerns about privacy as it relates to health data, Apple has amassed tons of trust from its user base (particularly when compared to Meta, its main extended reality competitor).

“The underlying thing is that people actually trust Apple,” said Smith. “Apple knows that their ecosystem is built around privacy and security, which makes the healthcare vertical way more applicable to them.”

Apple isn’t the only company that’s attempting to read brain waves. Both Google and Meta have sought patents for ways to read your mind. However, Apple’s filings lay out how this tech could be weaved into its existing form factor, whether it be a sleek band with unobtrusive electrodes or a small sensor at the bridge of your nose for measuring airflow. After all, Apple’s bread and butter is taking existing technology and improving on it, giving it the sleek user experience that its devices have grown so popular for.

And while brain waves and nose sensors can only give Apple so much data, when coupled with the rest of Apple’s health-tracking device ecosystem, the company can begin to get a sense of the bigger picture, Smith said. “Now you have a much better robust surveying system for what’s happening in your body,” he said. “This could be steps by Apple to secure all of the different sensing locations and the devices to do it.”

Trust and Safety Report: Tamping Down Identity Fraud in the GenAI Age

Everyone knows that genAI is making it easier for bad actors to commit fraud, but how is it impacting the professionals whose jobs are to protect their company?

Persona surveyed fraud, risk, and trust and safety leaders to find out and unearthed a trove of interesting stats, including:

- 39% find third-party fraud and identity theft the most challenging fraud issues to solve for

- 82% believe they address fraud attacks effectively, but 64% think their current fraud tech stack doesn’t help them proactively fight fraud

- 48% prefer building home-grown fraud solutions over buying tools

Download Persona’s survey report for a deeper dive into the numbers, along with commentary from experts on how to solve the challenges posed in the data.

A Tale of Two Robots

Big Tech wants to make sure its machines don’t crash into you: Both Nvidia and Google filed patents for systems to make their robots more environmentally aware.

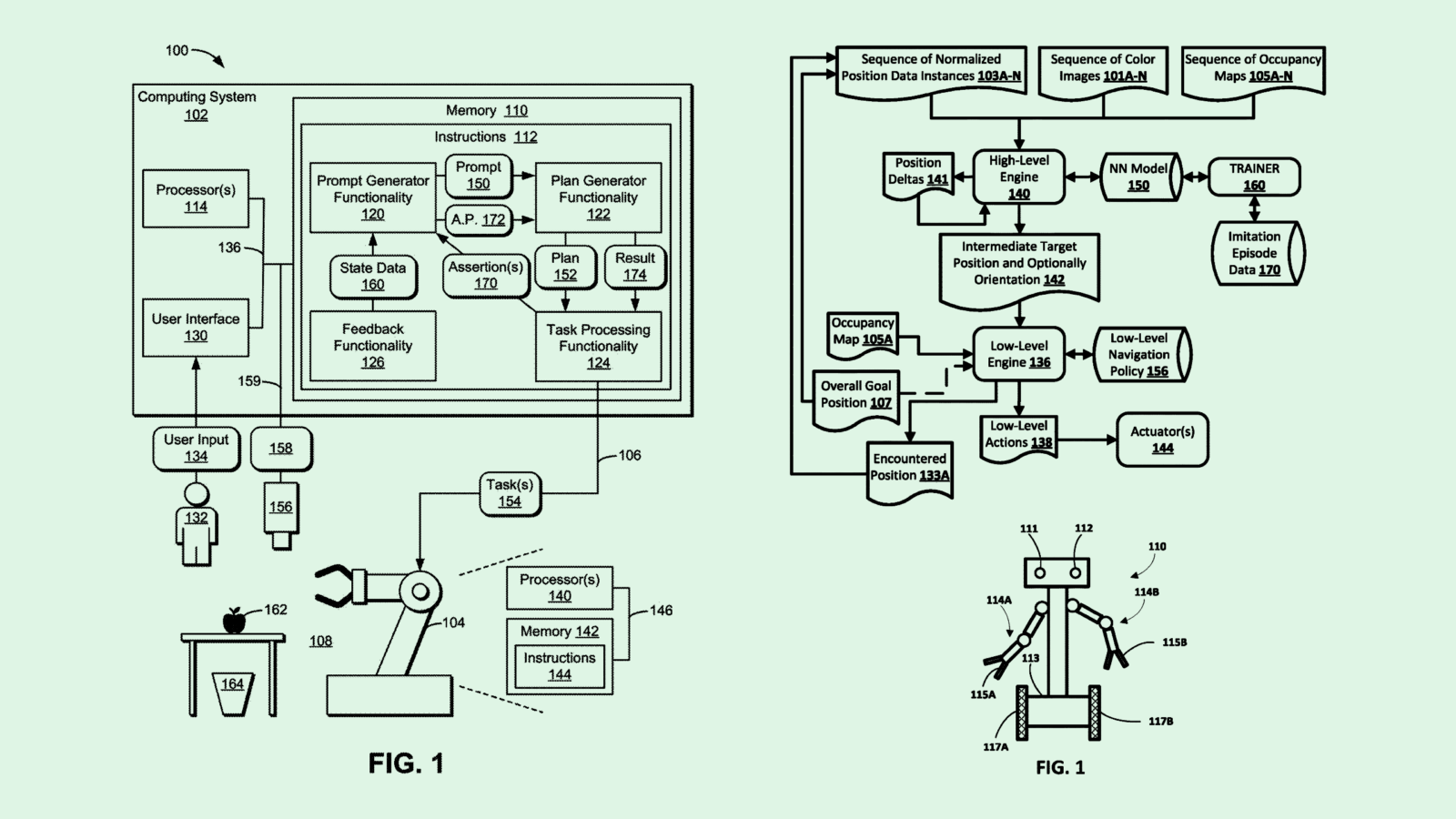

Nvidia is seeking to patent a “prompt generator” for use in machine learning processes that takes cues from its environment. Nvidia’s tech essentially gives machine learning models and autonomous machines tasks in real time, aiming to create a more reactive AI.

“Certain circumstances can cause less than optimal performance of task planning based on the environment,” Nvidia said. “This approach may be difficult to scale in environments that include many feasible actions and/or many objects.”

Nvidia’s system would include a large language model that generates prompts for an autonomous machine based on its surroundings. The system then sends those prompts to the machine’s internal task planner to perform instantaneously. For example, if the task is to put away a glass in a cupboard, the system would instruct the robot to complete some intermediary tasks to achieve that goal, such as “go to counter,” “pick up glass,” “open cupboard,” and “place glass in cupboard.”

Meanwhile, Google is seeking to patent robot navigation depending on “gesture(s) of human(s)” in its environment. This system creates a sequence of positions that allow a robot to reach a certain position based on the direction a human operator gestures.

In response to a certain gesture, such as pointing left or right, Google’s system uses a neural network to create a navigation path based on a series of “position deltas,” which represents how a robot’s position may change based on its surroundings. The system then sends the generated path to the robot’s control system to act on it.

This tech aims to overcome the issues of conventional “low-level” navigation methods, which “are not reactive to various behaviors of humans or other dynamic object(s) in the environment,” Google said.

While robots aren’t taking over the world any time soon, tech companies seem intent on creating AI-enabled machines that walk among us. Google and Nvidia’s patents add to several from tech firms that aim to give you mechanical coworkers, many of which are meant for factory and manufacturing settings. For example, Intel sought to patent a robot path planner that senses “occupancy information” of a building, and Amazon filed a patent application that assigned tasks to robots based on how charged they are.

These efforts add to a broader conversation about what the future of humanoid AI robots could look like. Google, Microsoft, and Tesla are already tinkering with fusing AI models and robotics. Plus, AI robotics startup Figure raised $675 million in Series B funding in late February and announced a collaboration with OpenAI.

Nvidia recently joined the host of companies working towards that future, announcing a “moonshot” plan, called Project GR00T, at the company’s annual GTC conference to build a general-purpose foundational model for humanoid robots.

“The next generation of robotics will likely be humanoid robotics,” Nvidia CEO Jensen Huang said in the conference’s keynote. “We now have the necessary technology to imagine generalized human robotics.”

Widening the range and reactivity of robots could unlock tons of potential and productivity across industries, particularly in labor-intensive industries like manufacturing, supply chain, and logistics. But to broaden robots’ horizons, developers need to ensure that they aren’t going to go haywire and potentially put the safety of their human co-workers at risk.

Zoom’s Accent Coach

Zoom wants to help you roll your R’s.

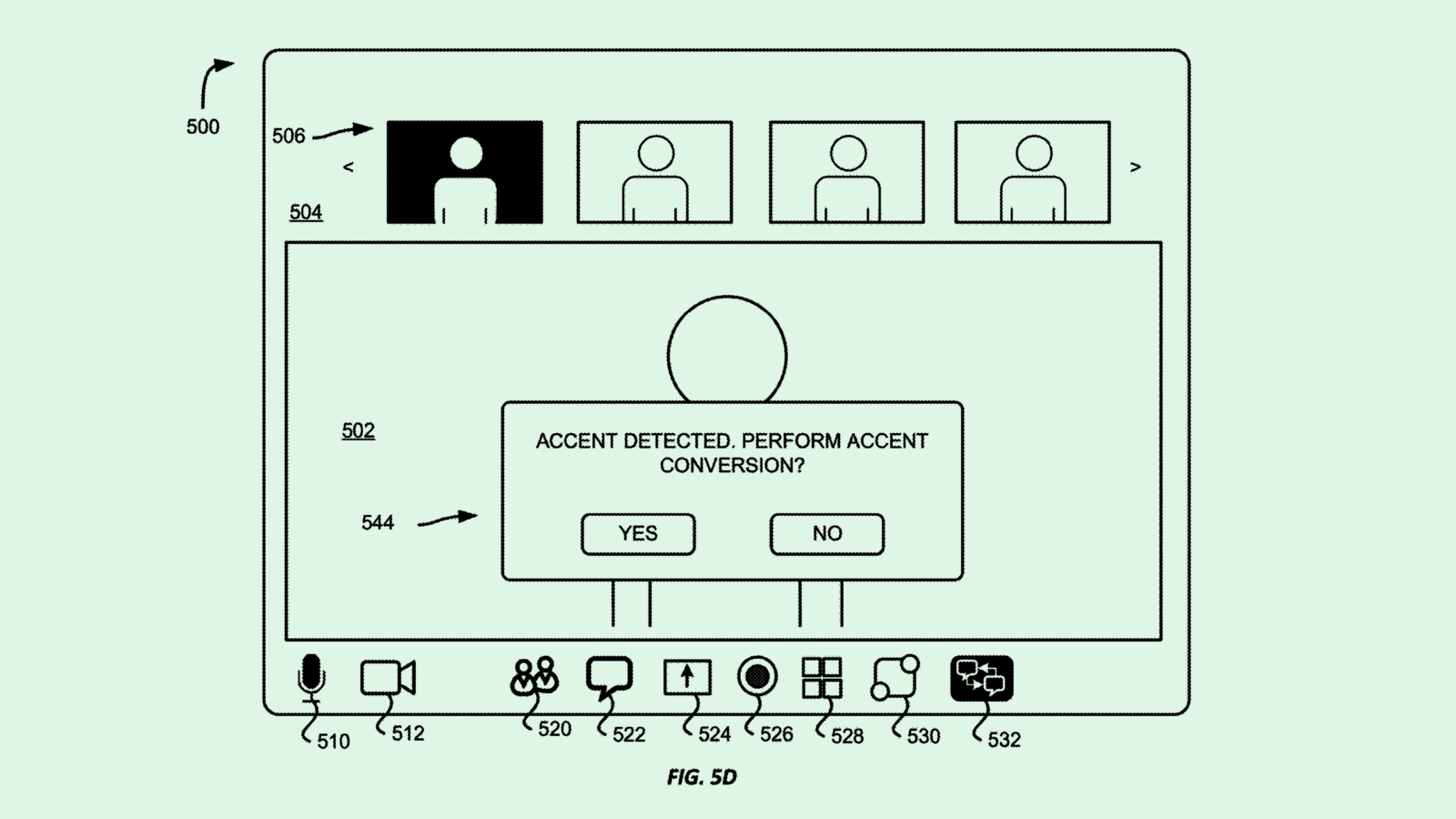

The company is seeking to patent “accent conversion” for speakers in virtual conferences. Zoom’s system uses AI to essentially translate the accent of a speaker so that it sounds “as if it was spoken by the speaker, but with a different accent.”

“The common language can sound markedly different depending on which speaker is talking,” Zoom said in the filing. “Further, different participants may have difficulty understanding the common language when it is spoken with an unfamiliar accent.”

This system relies on a machine learning model trained for what Zoom calls “voice conversion.” The system collects speech samples from people with a bunch of different accents to create training datasets that represent accents from different groups or regions.

Once trained, this system takes in speech from one accent and converts it to another while maintaining both the content and the “identity characteristics of the original speaker’s voice,” such as pitch or timbre. To perform the conversion, Zoom’s model listens for speech patterns in one accent, such as different pronunciation patterns, cadences, or rhythmic patterns, and changes them to fit the accent of the native speaker of that language.

On the user end, if a participant is having trouble understanding someone in the call, they could select an option that allows their accent to be converted just for their output, which may “enable participants in a virtual conference to more easily understand each other,” Zoom said. “Multiple participants can each receive a speaker’s speech but with different accents, allowing for better collaboration.”

Though this patent seems like a well-intentioned way of easing cross-cultural communication, there are certainly ways that this tech could go awry, said Brian P. Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University.

For starters, depending on the language, there may be bias in how well it translates one accent versus another, said Green. For example, the company’s tech may work far better with American English than with other accents or languages due to data availability.

The source of this accent data itself may also prove to be a privacy issue. Zoom caught heat last summer for data privacy concerns over its controversial terms of service update that allowed it free rein over user data to train its AI products. The company later reversed that decision and gave users the ability to opt out after a major backlash.

But even if this tool is trained with ethical data privacy practices and is bias-free, it could still reinforce “value judgments” for certain accents, said Green.

“In some cases, having an accent does make a person more difficult to understand, but there’s also an aspect to it underlying value judgment here, which is that accents are bad,” he said. “Voice is highly connected to a person’s identity … because it’s very tied to identity, It’s kind of a sensitive topic.”

However, one positive is that this could be a stepping stone to live translation of people’s voices from one language to another, said Green. Though this patent comes with risks, he added, if it leads to people being able to overcome language barriers, it could be a “huge positive for communication.”

Extra Drops

- IBM wants to capture the feeling. The company wants to patent a system for text summarization with “emotion conditioning.”

- Mastercard wants to help you take stock. The company is seeking to patent a system for “creating digital records of physical items.”

- Meta wants to make sure you can see straight. The company is seeking to patent a system for “optical image stabilization” in cases of asymmetric motion.

What Else is New?

- Sam Bankman-Fried now faces a maximum sentence of 110 years in prison for his fraud leading to the collapse of FTX. The judge raised the sentencing guidelines after finding out Bankman-Fried perjured himself.

- Amazon invested another $2.75 billion in AI startup Anthropic, adding to its $1.25 billion in funding from September and completing its planned $4 billion investment.

- Google’s charity wing, Google.org, launched a $20 million accelerator program to help fund nonprofits developing AI.

Patent Drop is written by Nat Rubio-Licht. You can find them on Twitter @natrubio__.

Patent Drop is a publication of The Daily Upside. For any questions or comments, feel free to contact us at patentdrop@thedailyupside.com.