Snap’s Patent Makes Text-to-Speech Emotional

Snap wants to understand what your texts really mean.

Sign up to uncover the latest in emerging technology.

Snap wants to give text-to-speech a little more flare.

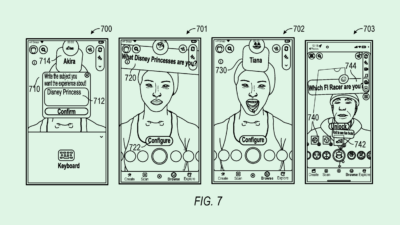

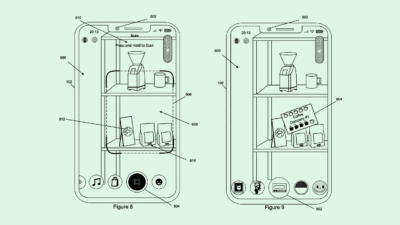

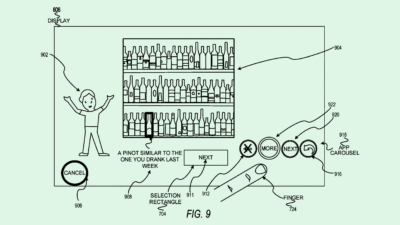

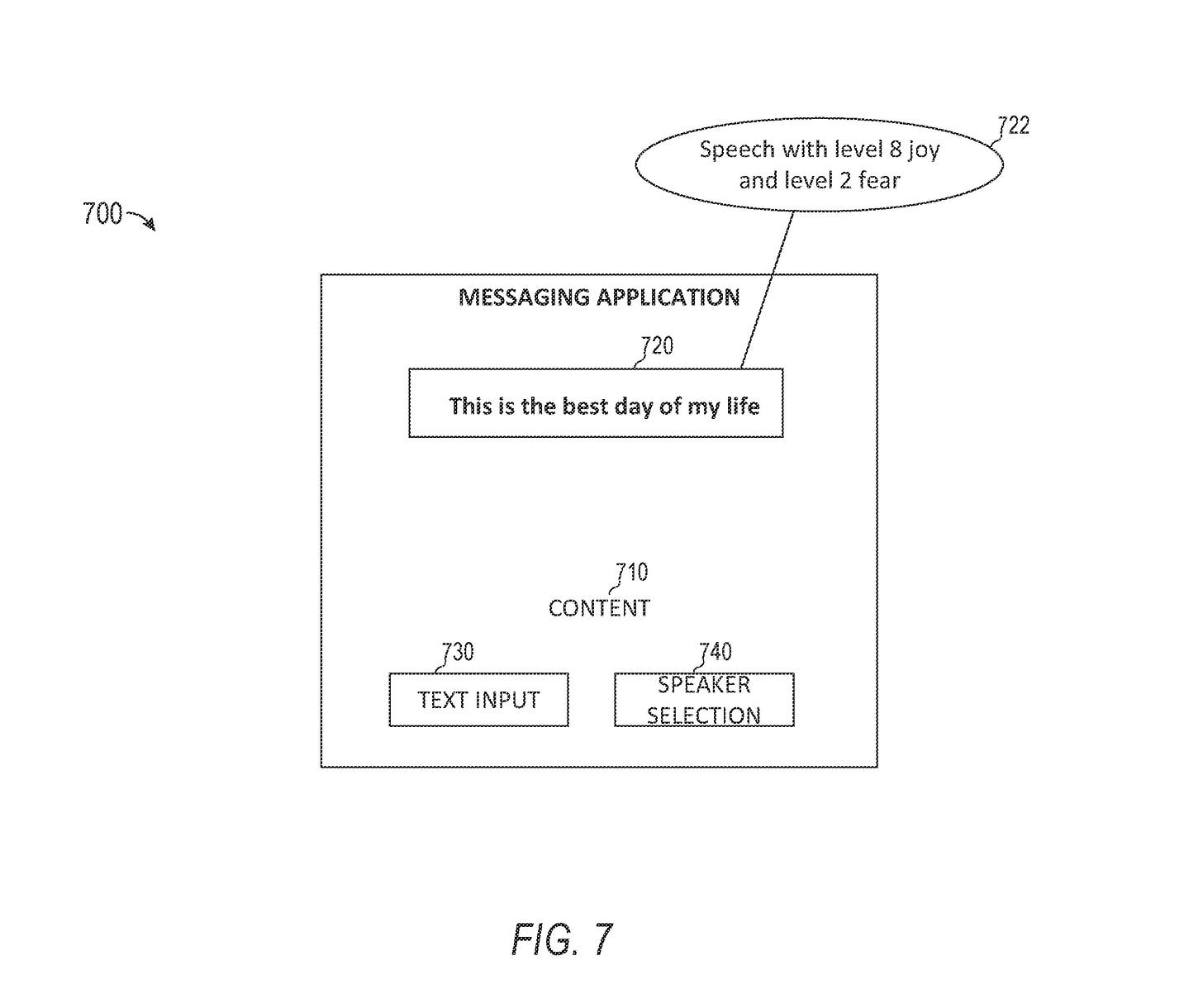

The social media company is seeking to patent a system for “emotion-based text to speech.” Snap’s system uses AI to derive emotions from text, and uses that input to help its text-to-speech systems give more realistic output.

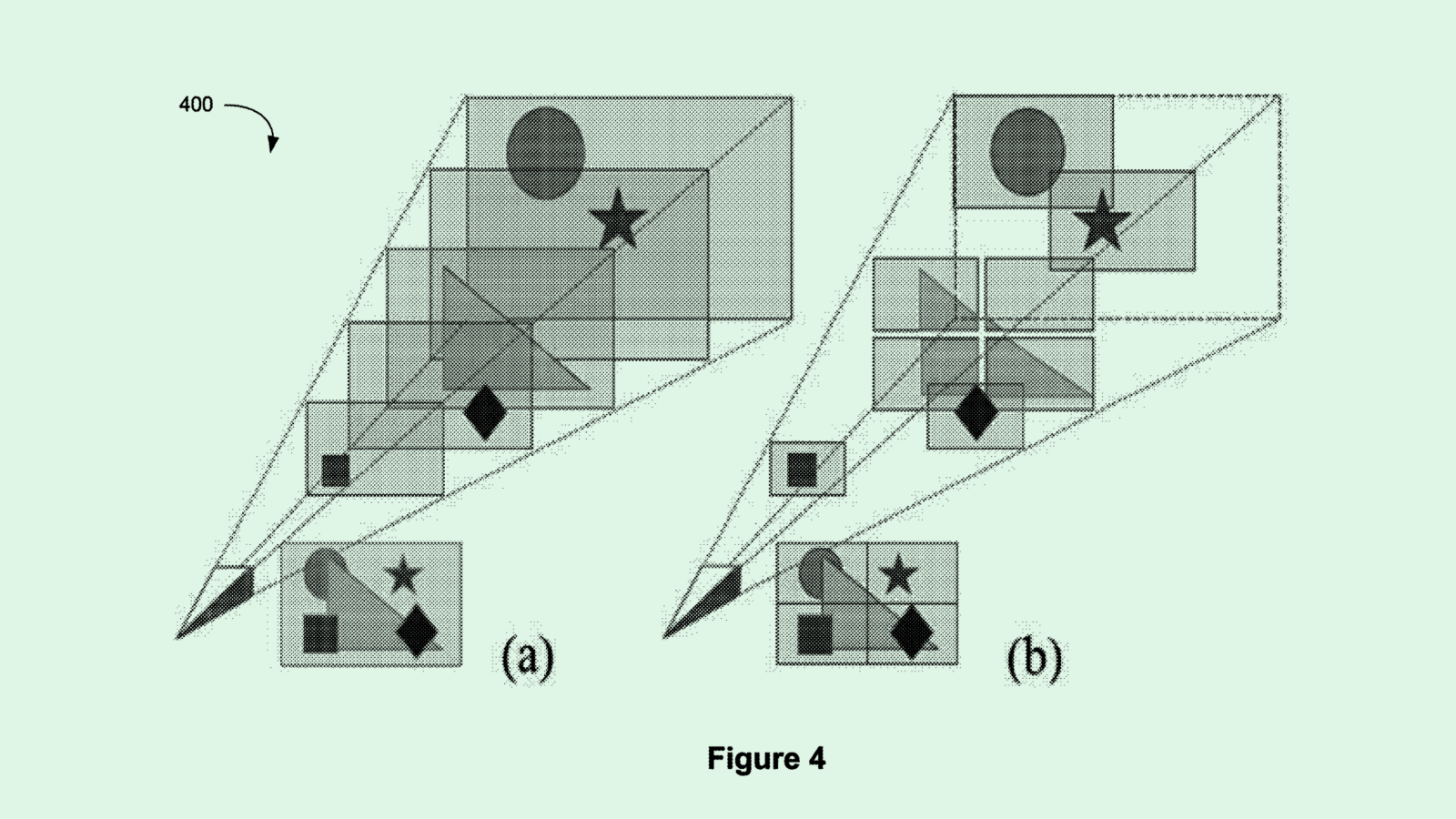

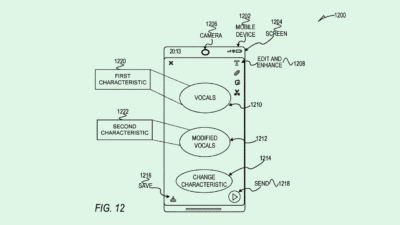

First, the system applies machine learning to the string of text to “compute a level of an emotion” associated with the words in that text string. This calculation helps the text-to-speech system decide how to mix and combine different emotions to get the appropriate output. For example, if the machine learning model decides some words in the sentence are neutral, and some are happy, it’ll meet somewhere in the middle.

While in some examples an AI model determines these emotions, in others, Snap notes that users are allowed to give input via an “emotion classification and emotion intensity module.”

Finally, all of this data is then fed to an “acoustic generator” and a neural network-based vocoder, which normalizes the text and generates the audio. That audio is then played back during the display of an image, video or augmented reality experience.

While text-to-speech systems are far from a new concept, conveying emotion through these systems and creating human-like speech from scratch can be difficult, Snap noted. “These systems can generate audio that speaks text in a neutral emotion which results in a cold, impassive and discouraging experience.”

Emotional understanding is an incredibly difficult barrier for AI to surpass, said Eldad Postan-Koren, co-founder and CEO of Winn.AI. This can be seen in speech recognition contexts, as companies like Amazon research how their smart speakers can better understand user requests by listening for emotion. Snap is essentially trying to solve the reverse, deriving emotion from text by breaking it down word by word, and translating it into a voiceover.

But several roadblocks stand in the way. For one, every person emotes differently, and many people struggle to convey emotion over text generally. (What did that extra exclamation point in your friend’s text really mean?)

Another obstacle is the cultural differences that exist in communication, Postan-Koren said. For example, he said, as someone that lives in Israel, he lives with different norms of communication than someone from the US. An AI that can nail all of the minute differences in global communication is practically “science fiction,” he said.

“In order to 100 percent understand you, I need to know,” said Postan-Koren. “I’d need to know the way you communicate, your personal background, your cultural background.”

This barrier may be why Snap’s patent specifies that it could get some direction from its users: In some implementations, the system allows users to pick which emotions they would like to convey in their text-to-speech, rather than making a socially inept machine learning model do it.

One last note: While this patent focuses on text-to-speech, understanding sentiment, whether it be from text or audio, can be a lucrative metric in advertising. Given that Snap’s Q2 revenues sunk even lower this past quarter, any tech that helps it better understand users could be to the company’s benefit.

Have any comments, tips or suggestions? Drop us a line! Email at admin@patentdrop.xyz or shoot us a DM on Twitter @patentdrop. If you want to get Patent Drop in your inbox, click here to subscribe.