Wells Fargo Patent Highlights Fatal Flaw in Deepfake Detection Models

Synthetic content is becoming more realistic by the day. Can detection tech keep up?

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

Can you tell when it’s a bot at the other end of the line?

Wells Fargo wants to help you decipher what’s real and what’s fake. The company filed a patent application for “synthetic voice fraud detection,” tech that attempts to pick up on deep fakes even if they’re based on authentic vocal samples.

“Advances in AI technology have made it possible to create highly convincing synthetic voices that can mimic real individuals,” Wells Fargo said in the filing. “As synthetic voice technology becomes more sophisticated, detecting synthetic voices becomes more difficult.”

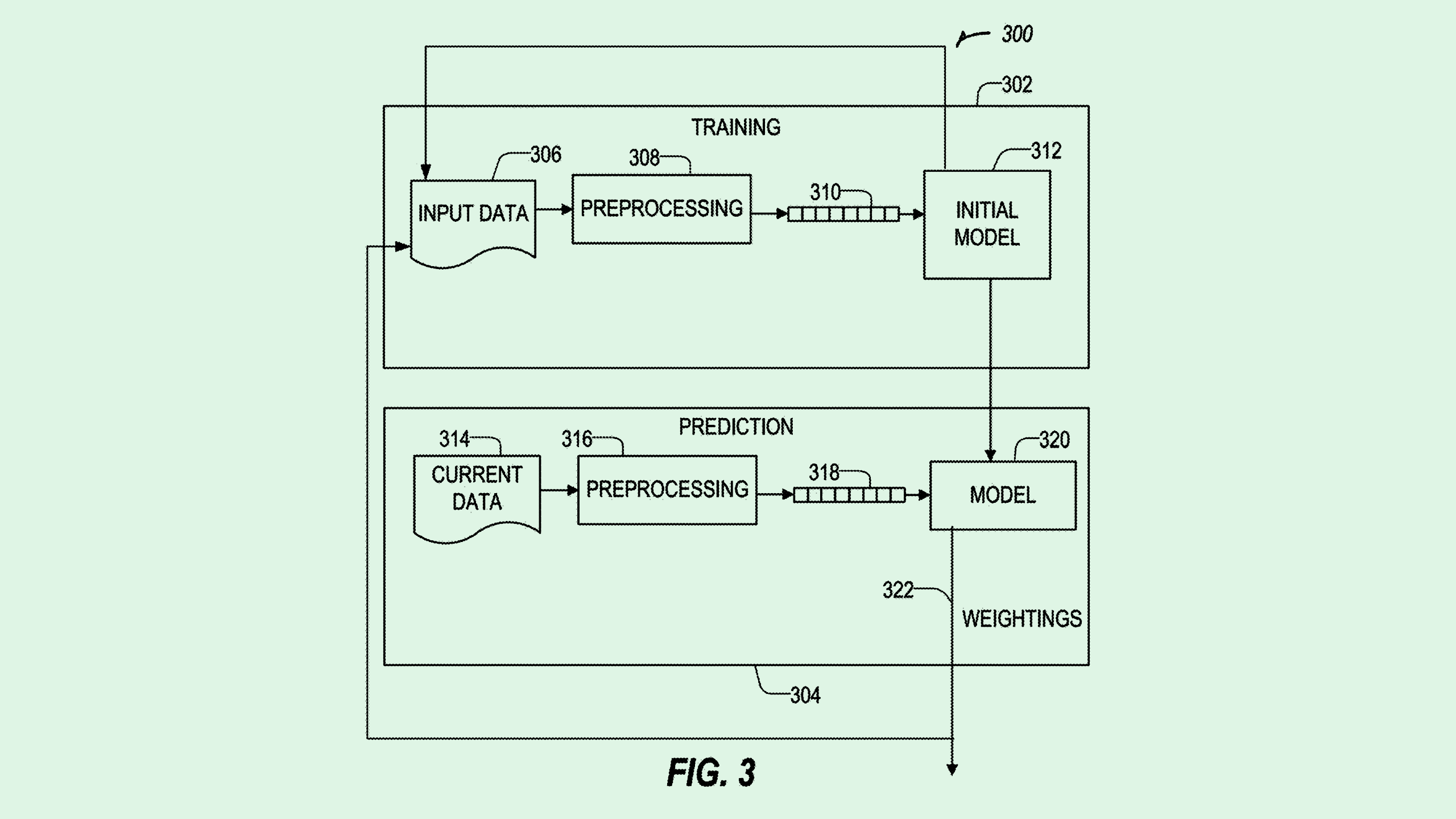

Wells Fargo’s system first collects samples of peoples’ voices and normalizes them, cutting out background noise and adjusting the volume if necessary. The system then generates an artificial clone of that person’s voice, feeding both to a machine-learning model for training.

From this, the model learns to spot subtle indicators and inconsistencies between the real and synthetic voice clips. This could include detecting audio artifacts from generation algorithms, unnatural variations in pitch or timing, or differences in the patterns of background noises or harmonic structure.

Wells Fargo is far from the first company seeking to tackle deepfakes. Sony filed a patent application that sought to use blockchain for deepfake detection, Google’s patent history includes a “liveness” detector for biometric security, and Intel sought to patent a way to make deepfake detectors more racially inclusive.

The fatal flaw with synthetic content detection systems, however, is one that’s present throughout the cybersecurity industry: When you build a 10-foot wall, threat actors will combat it with a 12-foot ladder. And defenses take far longer to build than they do to break.

This point is especially relevant as AI continues giving threat actors more ammo. With the tech providing the power to create more realistic deepfakes, detection tools can become outdated more quickly than ever. As Joshua McKenty, co-founder and CEO of Polyguard, told CIO Upside last week: “Every tool you build today, you’re putting into the hands of an attacker.”