Oracle Patent Keeps Large Language Models From Spilling Secrets

Oracle’s patent highlights a key issue that AI developers are still reckoning with: Data privacy.

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

What goes into a chatbot eventually comes out.

Oracle wants to make those inputs and outputs a little more confidential. The company is seeking to patent a system providing “entity relationship privacy for large language models” that would protect confidential information in datasets used in AI training.

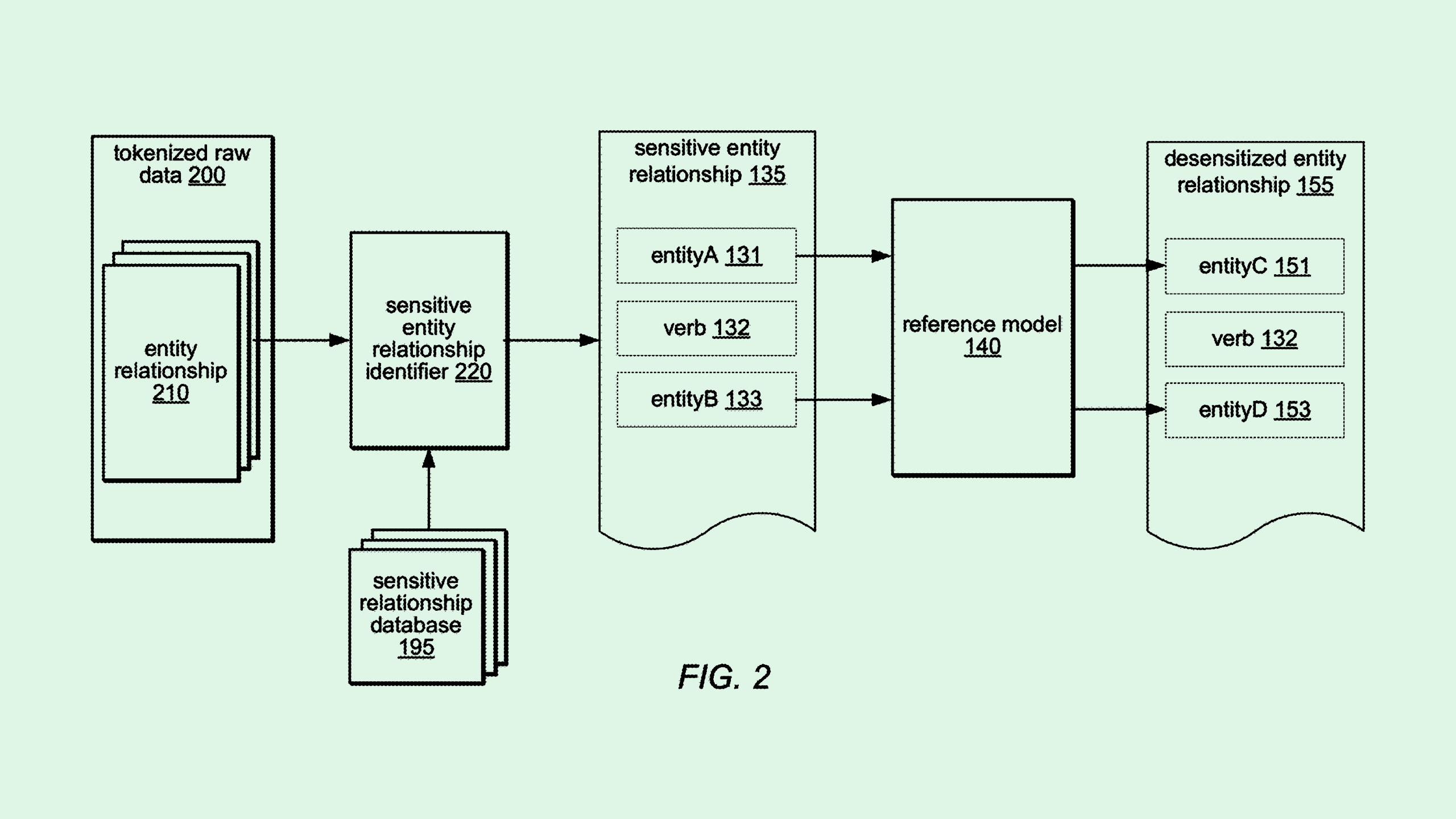

Starting with raw data, Oracle’s tech scans for “sensitive entity relationships.” For example, it may check whether a name is connected to things like medical conditions if the data is healthcare related, or transaction information if the data is financial.

For each sensitive link that’s identified, the system will swap out one piece of information for something that isn’t sensitive, with the new information generated by an AI model. For instance, if a person named “John Smith” is connected to the condition “Type 2 diabetes,” it may switch out the person’s name for a fake one, preserving the structure of the data as well as the person’s privacy. That dataset can then be used to fine-tune a large language model without exposing people’s personal information.

Oracle’s patent highlights a key issue that AI developers are still reckoning with: Data privacy. “[The] reproduction of training data is also at the heart of privacy concerns in LLMs as LLMs may leak training data at inference time,” Oracle said in the filing.

AI models are like parrots. They don’t actually learn facts, they just repeat what they hear and infer what the next word in a given phrase will be. With the right poking and prodding, a threat actor could access any training data that was used to build it.

This presents a major problem with deploying AI into certain contexts where data regulations are tighter. Healthcare, for example, faces strict data-security protocols related to the Health Insurance Portability and Accountability Act, or HIPAA. Financial firms similarly grapple with strict legal requirements.

Oracle isn’t the only tech firm seeking to crack the data security problem. Microsoft, JPMorgan Chase and IBM have similarly sought patents for ways to redact and limit personal data in training datasets. But while guardrails exist, given the pressure to adopt quickly, enterprises are often more concerned with quick innovation and deployment than data protection.