Meta Stamps Out its Responsible AI Team

Meta has disbanded a team in charge of making sure the company developed AI in an ethical way, according to The Information.

Sign up for smart news, insights, and analysis on the biggest financial stories of the day.

“Quick! While everyone’s looking at OpenAI…”

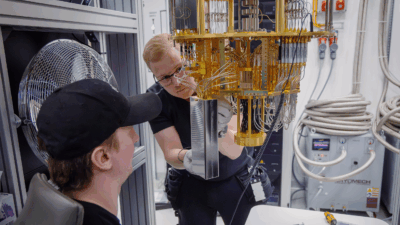

Meta has disbanded a team in charge of making sure the company developed AI in an ethical way, according to a report on Saturday by The Information. Most of the already-weakened team was reportedly folded into Meta’s generative AI workforce, which is amusing timing given last weekend’s headlines were largely dominated by OpenAI’s board suddenly jettisoning CEO Sam Altman, frantically trying to get him back, then losing him to OpenAI’s biggest investor Microsoft. When better to put all your firepower into building rival products, without having to slow down because you might, say, destroy humanity?

Who Needs Ethics Anyway?

While the report signals the death knell of Meta’s Responsible AI (RAI) team, it was reportedly already on life support. Formed in 2020, RAI had seen its headcount halve by last month, according to a Business Insider report. One source told BI that the team’s focus had shifted away from mitigating potential harm caused by AI to compliance, or “trying to make sure we don’t break any laws or get sued again.” Now that’s responsible.

This phenomenon isn’t restricted to Meta: Earlier this year, following the grim realization that they were no longer financially bulletproof, many Big Tech companies either shrank or cut their AI ethics teams, a revealing moment if ever there was one. For Meta, however, this is very much elective surgery:

- In October, Meta reported its most profitable quarter in two years. Mark Zuckerberg’s “year of efficiency,” during which the company laid off over 21,000 workers, should be drawing to a close soon.

- The RAI shutdown also comes as lawmakers around the world wrestle with how to legislate AI, and without staffers focused on harm (not to mention compliance), it seems like an added risk.

So Wrong It’s Copyright: Not everyone is shrugging off ethics. Stability AI, the company behind generative AI image tool Stable Diffusion, saw an executive depart on Friday over an ethical spat. Ed Newton-Rex, Stability’s now-former head of audio, told the BBC he left because Stability believes it should be able to use copyrighted material in its training data without asking the copyright holder’s permission. Stability is being sued by Getty Images for that exact reason, although Getty also recently announced its own AI image-generating tool and said it would front the legal costs for any user who was sued over an image they made with it. Seems blurry to us.