Sign up to uncover the latest in emerging technology.

Disney’s safe robots, Microsoft’s visual search engine & Facebook’s live sports highlights

1. Disney – robots that are safe around humans

Disney’s latest filing looks to reduce the ballistic risks to humans of robots with rapidly moving components.

There are a number of situations where robots work in close collaboration with humans. For instance, in factories, robots are often performing tasks with humans working nearby. In theme parks, animatronic devices are often entertaining visitors on certain rides. In the future, we may have robots in our homes providing care to the elderly, to our children, as well as performing domestic tasks.

As robots and humans increasingly operate in close proximity, there are certain risks that arise. For instance, imagine if a robot mimics a human by waving its hand. If there were to be a mechanical failure, such as a joint breaking, the robot’s hand could break off and become a projectile. Given the speed and weight of these components, this presents a huge safety risk.

Instead of limiting robot-human interaction, Disney is thinking about creating a ‘safety retention suit’ that covers parts of the robot. This material is optimised to be both flexible and durable, so that it enables the robot to move relatively freely, but retains any component if it were to break off in a mechanical failure.

In the filing, Disney describe the suit as being made with high tenacity nylon or a synthetic fiber with a tensile strength greater than nylon, such as Kevlar.

Given today’s robots are hardly interactive and mostly static, the problem of ballistic safety is one that hasn’t required much thought. But Disney’s patent filing is pre-empting the problem, especially for companies that are working on the cutting edge of robotics.

This filing is particularly interesting because it shows how Disney’s focus on building emotional connections between characters and humans means that the company has an edge when it comes to future industries such as robotics or AR/VR based entertainment.

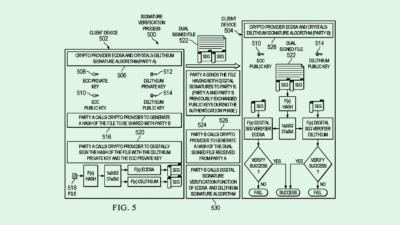

2. Microsoft – interactive visual search engine

Microsoft is working on a new type of visual search engine that acts upon information from users in a multi-step interaction via a chat bot.

The search engine will include a “visual intent module” that essentially identifies topics that correspond to different objects found in an image. If a user has submitted text input too, the search engine will look for keywords and triage it with the image a user submits.

For example, if a user submits an image of a flower and asks “what time of year does this flower bloom?”, Microsoft’s intent module will infer that the flower identified in the image relates to the question being asked. So if the flower in the image is identified as a tulip, Microsoft’s chat bot will then search for “what time of year do tulips bloom?” and provide the results back to the user.

With this chat bot, there can be a number of back-and-forth interactions so that the intent can be identified with sufficiently high confidence. For instance, if the image that a user has taken is too blurred, the chat bot could request the user to take another image.

This filing is particularly interesting because it looks like Microsoft is looking to use a chat interface as a new angle of attack when it comes to search. Imagine if Microsoft’s visual search engine was accessible to all people via WhatsApp? Or if Microsoft provided its visual search engine as an API for websites and apps to deploy inside of their own apps?

Moreover, Microsoft seems to be building an interesting product flywheel with its deep-tech work in natural language processing and computer vision being deployed for enterprise (e.g. in Microsoft Teams) and now consumer.

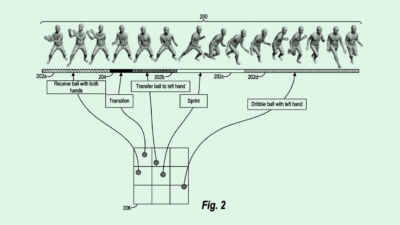

3. Facebook – automatic digital representations of sports events

Facebook is thinking about automatically creating event recaps, especially for sports events.

If someone misses a sports game, just seeing the final score fails to convey the drama and emotion that may have been experienced during the game. Facebook’s patent filing looks to identify the emotions experienced by the live viewers and convey it in the recap.

To determine the emotions of during an event, Facebook will look at the following signals:

number of comments posted during the various portions of the event

how the scores change over time

the loudness of a crowd watching the event

the speed and quality of speech of the event announcers

With this, Facebook will look to create digital / visual representations of key emotional moments of the event, instead of just showing the final score.

Why is this interesting?

By having social features embedded into the live streaming experience, Facebook is able to capture a lot of signals around which parts of the video are the most engaging. Moreover, with a huge userbase, Facebook is able to develop and rapidly iterate its models so that it can effectively capture the most engaging moments in live events. In other words, Facebook has a learning engine for creating recaps of the most engaging moments.

By summarising live events to its most engaging moments, Facebook may be looking to do a better job of showcasing live sports post-event. When Facebook does bid for the rights to host live sports deals, being able to effectively display the content post-event, automatically and in a way that’s engaging, contributes to viewership and potential monetisation.

Before you leave…

Join 200,000 stock market investors following Patent Drop’s portfolio on Public.com

If you’re trying to understand why NFTs are interesting, here’s a small graphic I put together that described the new paradigm