Facebook Uses Your Voice (plus more from Netflix)

voice avatars, automated trailers & shared digital world space

Sign up to uncover the latest in emerging technology.

voice avatars, automated trailers & shared digital world space

1. Facebook – voice models of users

So this is one of those patent applications that are equally creepy and fascinating.

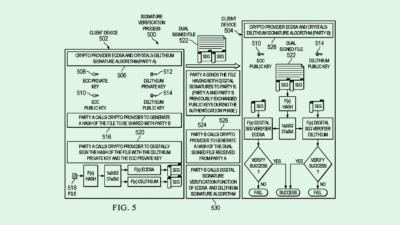

Facebook is thinking about generating voice models for users that are designed to resemble their actual voice.

In the filing, they describe how voice models could be useful in the context of messaging. For example, if you’re driving in a car and I send you a text message, Facebook could use my voice model to read out the text message using my voice.

Hearing the message in the sender’s digital voice would help the recipient figure out who it’s from without diverting their attention away from their current activity – e.g. driving.

This could also be particularly useful in group chats where there are a number of participants. Using personalised voice models to read out the messages removes the need for a system-generated pre-amble explaining who sent each message.

To generate the voice models, Facebook would ask users to provide audio samples of them reading out certain phrases. Neural networks would then be used to train voice models of the users.

Now, I’m pretty unconvinced of the utility of personalised voice models for reading out messages while driving a car. But one context where personalised voice models could become interesting is in helping people achieve digital immortality.

Digital immortality is when someone’s body dies, but they live on as a digital avatar that people can continue to interact with. In theory, we could use GPT-3 to train a text-generation model on everything someone has written online. And then with a personalised voice model, we could turn AI generated text into a voice. Put those together, people could meaningfully interact with avatars of people who have passed away.

I doubt that Facebook are necessarily thinking too much about this idea of digital immortality. But they are thinking about the metaverse, VR and avatars. At some point, all of these things will converge.

2. Netflix – automated trailers

When you land on the Netflix homepage, the auto-playing trailers of content that appear when you hover over a video are extremely important in influencing your decision on which movie / series to invest time in.

In this patent filing, Netflix describes the current process of creating these trailers as pretty labour intensive. Firstly, an editorial assistant will watch the entire piece of content. They’ll then identify portions of content that would be interesting to present as trailers. An editor will then edit the interesting portions into a trailer. And assuming Netflix is A/B testing different trailers for each piece of content, there are probably many trailers being created for each piece of content.

Besides being labour intensive, another disadvantage of this manual approach is the potential for human biases. For example, if an editorial assistant doesn’t like the look of one actor, they may subconsciously minimise the amount of screen time that actor has in the trailer. This may not align with the preferences of a typical user.

Moreover, human curation of these trailers doesn’t allow for deep personalisation. For example, as a British Asian, I may appreciate seeing characters that reflect my upbringing. A movie might have an Asian character having a very small part in it. But when showing me the trailer, the trailer could highlight this character to me in a bid to make the movie more appealing to me.

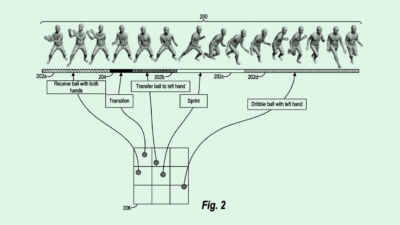

Netflix’s solution is to use facial recognition to determine the appearance of target characters. Then different sequences of shots from the content will be analysed to calculate the percentage of frames within that sequence that include the target characters. Finally, based on a specified heuristic of target frame percentages for different characters – “a clip recipe” – clips that fit the target percentages will be stitched together to create a sequence to feature in a trailer.

Given how much money Netflix spends on building its content library and how important content consumption is for minimising subscriber churn, deeply personalised trailers that make any given piece of content relevant to an individual could be an interesting way of maximising value from its content library.

3. Facebook – shared artificial reality environments

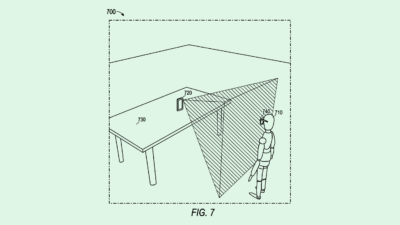

This patent application from Facebook looks at how multiple people in the same location could interact in a shared artificial reality environment when wearing VR headsets.

Essentially, multiple VR headsets in a shared space will cooperate to share data on the pose and the body positions of each of the participants. Within each person’s headset, the shared data will be used to render the positions of all of the other participants.

The advantage of this system is that it doesn’t require there to be external cameras or sensors to determine the positions of each participant. Therefore, creating shared VR experiences could in work in a simpler and cheaper way than existing systems.

Why is this interesting?

A common fear that a lot of people have with virtual reality is that it shuts people out of the world around them and encourages isolation. This filing hints at the ambition for virtual reality to be a shared, multi-player experience that can be enjoyed by people in the same location. Specifically, the filing mentions the possibility for this system to be used in multiple applications, such as teleconferencing, gaming, education, training or simulations.

If there’s one thread that ties together Facebook and Snap’s ambitions, it’s that they both view the future to be people enjoying a shared digital world space.