Sign up to uncover the latest in emerging technology.

Plus: Mastercard’s small business chatbot; Adobe’s Waldo-finder

Happy Thursday and welcome to Patent Drop!

Today’s patents are (surprise, surprise!) all AI-based. Google wants to patent tech to make its AI a little more humble; Mastercard wants to help small businesses understand themselves better; and Adobe is breaking down the bigger picture.

But before we get into that, a quick word from today’s Sponsor, ButcherBox. As a Patent Drop reader, you make sure to source your news from only the most high-quality, dependable, and witty of sources (that’s us). But what about when it comes to sourcing your food?

Like your brain, your body needs high-quality fuel – and that means it’s time to stop settling for gas station snacks and Subway runs and start opting for ButcherBox. ButcherBox delivers the highest-quality beef, chicken, pork, and seafood directly to your doorstep. We’re talking the full-blown, grass-fed, free-range, wild-caught good stuff, complete with recipes to make the most of every piece (think brown butter scallops, grilled steak bruschetta, and citrusy herbed lamb).

Ready to up your meat game? New customers can get $100 off with code MARCH100 plus free chicken nuggets in every box for 1 year when they sign up right here.

Anyways, let’s take a peek.

#1. Google’s course corrector

Sometimes AI can be like that one guy in your freshman year politics class who always had something to say: overconfident in responses that weren’t entirely accurate. Google wants to program a little self-doubt.

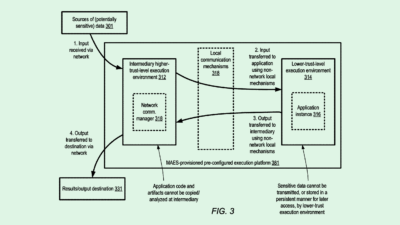

The company filed a patent application for regularizing machine learning models, which improve performance of a trained neural network. To do this, Google is trying to solve the longstanding issue of “overfitting,” the term for when a neural network gets too confident in its responses to things that aren’t covered in its training data and ends up generating poor outcomes.

Overfitting is a fundamental problem in AI development, but if you didn’t take Machine Learning 101 in undergrad, think of it like this: An AI is trained to identify birds by looking at thousands upon thousands of images of birds flying in the sky. If it’s then shown a photo of a plane in the sky, it might classify that as a bird.

Google’s tech solves this issue by using a “regularizing training data set,” or ones with a predetermined amount of “noise,” or meaningless data. These are created by modifying the labels in its datasets, for example, changing one that “correctly describes the training item to a label that incorrectly describes the training item.” This way, a neural network is trained to not be overly reliant on its training data, and will come up with more accurate answers.

“Overfitting may be described as the neural network becoming overly confident in view of a particular set of training data,” Google said in its filing. “When a neural network is overfitted, it may begin to make poor generalizations with respect to items that are not in the training data.”

Overfitting is not a new problem. Engineers have faced it practically since the dawn of AI, and Google’s tech is just one method at which to solve it, said Swabha Swayamdipta, an assistant professor of computer science at the University of Southern California. Overfitting is a “subclass” of the larger problem of generalization, or performance of an AI on different data than it was trained on. For instance, Swayamdipta said, “If you train an AI model on language in newspapers, how will that same model do with language on Twitter?”

“The overfitting issue is like something I believe everyone who has done machine learning research has seen along the development process,” Swayamdipta told me. “But more or less, we know how to deal with it. I was a little surprised to see a patent like this late in the game.”

What might be more interesting about this patent, however, is its timing. As the overfitting problem is age-old, Google has likely been implementing the tech outlined in the patent for a while now. By seeking to patent this tech more recently – filed in November – the company may want to claim this specific type of problem-solving for its own as more developers jump into the AI frenzy.

Though overfitting has largely been solved, working out problems of generalization is crucial as Google continues to push forward on its AI journey. The company opened up its waitlist for Bard AI, it’s ChatGPT competitor, last week, and hinted at a slew of AI-powered features for its search engine in a blog post February. “We’re reinventing what it means to search and the best is yet to come,” Prabhakar Raghavan, an SVP at Google, said at an event in February.

As one of the biggest search engines in the world, Google getting AI right in search is critical to keep from unintentionally spreading misinformation on a mass scale.

#2. Mastercard’s small business guru

Mastercard wants to give small merchants their own personal AI mentor.

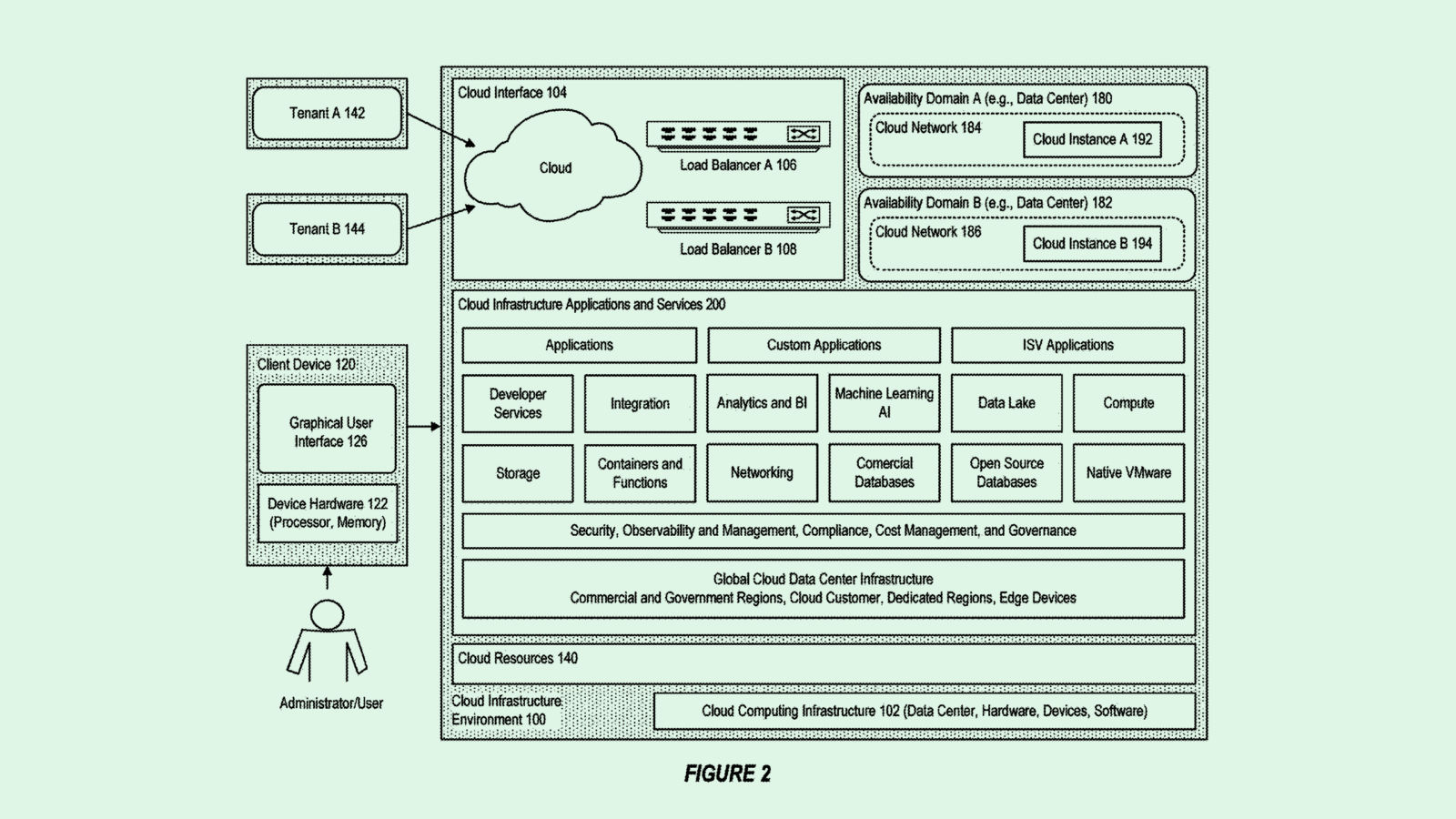

The company filed a patent application for tech that helps merchants and small businesses get data on performance metrics from “intelligence crowd-sourced options.” To break it down: This system gives merchants a chat interface and allows them to ask questions about their customer base and business performance. Using natural language processing, it then pulls responses based on data such as transactions, customer demographics and similar businesses, and breaks it down into comprehensive analytics.

A merchant can compare their business’s performance to others in similar categories without revealing the identity of the other business entities, and ask questions about how to improve their business’s performance.

Think of it as a personalized version of ChatGPT that can answer any question you may have about your business’s audience. For instance, say a merchant asks, “How were sales this month compared to the competition?” The system may say, “sales were on par with other businesses in your category,” and offer some performance metrics.

“Although this corpus of information is being generated by a typical small business, the resources are not ordinarily available for the small business to analyze this information to derive useful information the small business can use to improve their performance,” Mastercard said in its filing.

Having access to performance data and analytics can give a big leg-up in growing a small business, said Adam Niec, founder of Rate Tracker, a startup that helps companies track credit card processing fees. Knowing your demographics, performance metrics, and how you stack up in your sector makes it much easier to figure out what works and what doesn’t. Payment data specifically “tells the story” of what resonates, he said.

For example, when Niec sold credit card processing services for another company before launching his business, he said that “business owners that bought analytics packages were always more successful than the ones that didn’t.”

“Numbers are powerful, and knowing your numbers is a huge strength,” said Niec. “If you don’t, as a business owner, it’s a recipe for disaster in my opinion.”

Mastercard already offers a number of services for businesses, including checkout solutions, partnerships with point-of-sale platforms, and business credit cards. Adding performance analytics could be another value-add to its arsenal of small business and merchant tech to help lure lucrative enterprise customers away from its biggest competitor, Visa.

And with the amount of data that a company like Mastercard has, processing $8.2 trillion in transactions in 2022 from millions of users, it’s inevitable that the company has the capability to offer some pretty in-depth insights to businesses about themselves and their competition.

As we’ve discussed in Patent Drop before, AI is transforming fintech in a major way, from things like fraud detection to customer retention and sales. With this patent application, Mastercard is putting its stockpile of data in the hands of the merchants themselves, and using AI to help them actually understand it and put it to use.

SPONSORED BY BUTCHERBOX

The Secret To A High-Quality Life?

High-quality food, like the kind provided by ButcherBox.

Let’s face it – you’re never gonna feel your best when you’re filling your body up with empty carbs, fake flavorings, and sugary nothingness. But replace those nasty snacks with high-quality cuts of meat, and tell us you don’t notice a difference

(Hint: you will, almost immediately).

That’s why we love ButcherBox. Their grass-fed beef, free-range chicken, wild-caught seafood, and other delicious options make it easy to take your meals to the next level.

Use code MARCH100 to get $100 OFF *and* free chicken nuggets for a whole year when you sign up today (new customers only).

#3. Adobe breaks it down

A recent patent by Adobe might be able to help you find Waldo… finally.

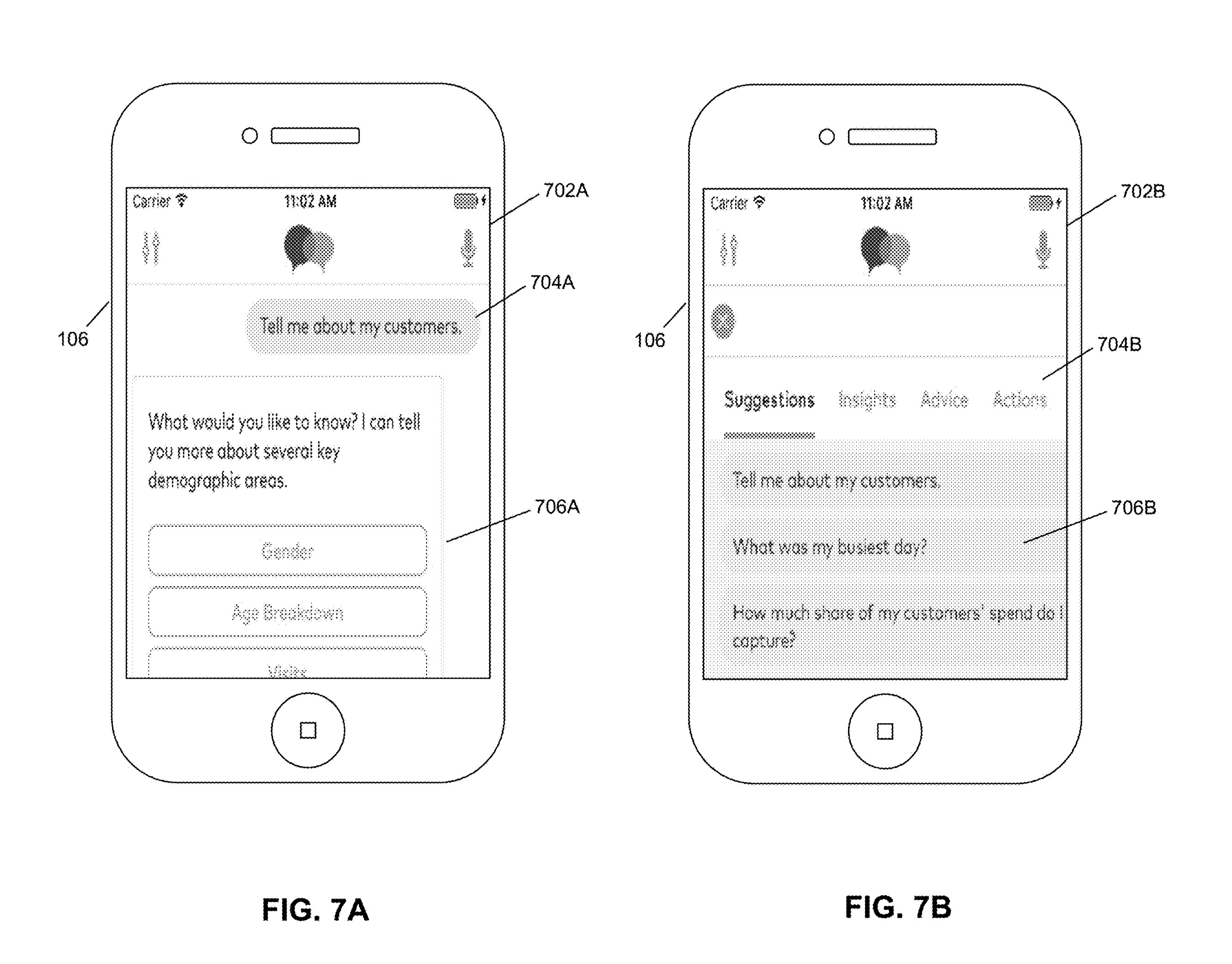

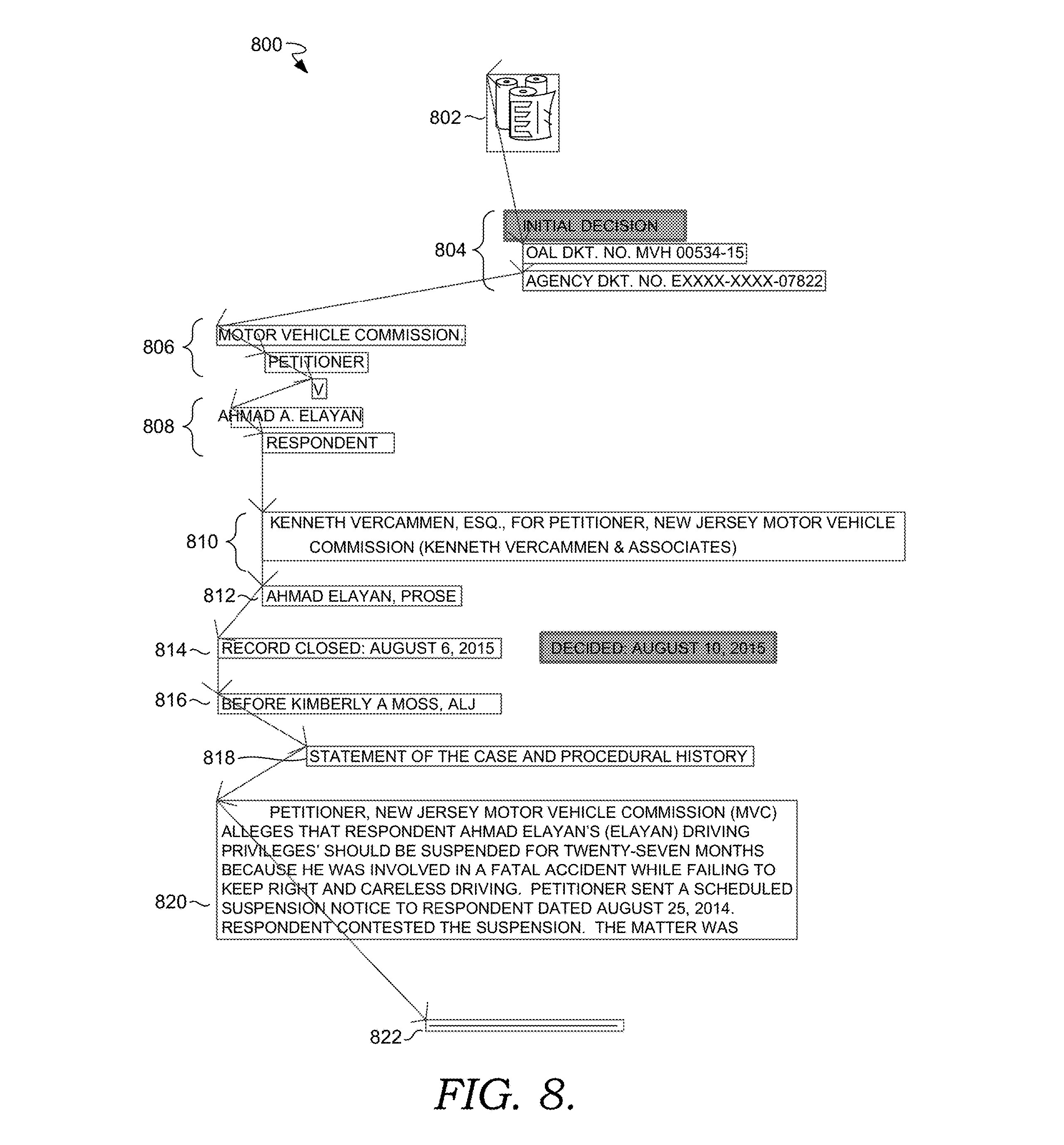

The company is seeking to patent tech that improves machine learning for prediction and document rendering. Essentially, Adobe’s machine learning models would create “content order values or scores” to help it break down complicated documents or images by organizing them “according to the position or rank of objects.”

This tech also makes its system “less likely to misclassify or incorrectly detect instances or the ordering between predicted instances,” meaning it could more accurately identify certain objects or lines of text in a document without far fewer mistakes than traditional algorithms.

For example, if given a complicated image, Adobe’s system would be able to correctly classify whether an object in an image is in the foreground versus the background, and pick out individual objects in a scene. Theoretically, it could do so without missing smaller features in the image – catching details like a blurry cousin in the background of a family picture or a bird on the horizon in a nature panoramic – and with fewer mistakes. (Yes, we’re back to birds.)

In the case of a text document, if it has several paragraphs or lines of text, this tech would rank and break down the sentences in those paragraphs into the order in which they should be read, as well as color code them based on content.

“Machine learning systems and other vision-based systems suffer from a number of disadvantages, particularly in terms of their accuracy,” Adobe said in its filing. “Consequently, machine learning models often misclassify or wrongly detect objects within documents.”

While Adobe’s tech doesn’t sound like that big of a revelation, it’s yet another example that AI is being implemented in ways that make day-to-day life easier, automating tedious tasks like object detection in imagery or content organization in documents.

It’s also a sign that AI is quickly replacing older systems as they improve efficiency and speed: Adobe noted in the application that this tech is improving upon previous object detection tech, using machine learning algorithms to replace “heuristics-based algorithms,” or a hand-coded algorithm that’s not a model trained on a data set.

These algorithms, Adobe said in its filing, “typically require subject matter experts to help manually define variables … (and) are manually-driven and tedious to write.” AI models, meanwhile, can more easily operate at scale, learn and improve from their mistakes, and operate at a much quicker speed.

It’s no secret that AI has a long way to go and several hurdles to overcome as it develops. But as systems consistently implement better AI in place of aging algorithms – and as the market for this tech continues to swell by billions – improvements like Adobe’s will become more and more commonplace.

Extra Drops

A few other cool ones before you go.

Microsoft wants to whisper in your ear. The company wants to patent “botcasts,” or AI-generated “personalized podcasts,” which basically cut and paste together podcasts based on “contextual relevance” to the user.

Ford wants you to break out of your own car – the safe way. The manufacturer seeks to patent a “window breaker tool” which does exactly what the name says in the case of an emergency.

Apple wants to make a scene. The company is looking to patent tech which essentially creates an extended-reality scene within a physical environment using AR, y’know, in case watching TV was getting a little boring.

What else is new?

Over 1,000 tech leaders signed an open letter calling for companies to pause training of AI models “more powerful” than GPT-4 for six months. Tech companies, meanwhile, have gutted their AI ethics teams.

OpenAI is facing an FTC complaint filed by the Center for AI and Digital Policy. The group claims that the company’s large language models are “biased, deceptive, and a risk to privacy and public safety.”

Alibaba’s logistics unit has started preparing for an IPO, according to Bloomberg. The unit, called Cainiao Network Technology, is worth $20 billion.

Have any comments, tips or suggestions? Drop us a line! Email at admin@patentdrop.xyz or shoot us a DM on Twitter @patentdrop.