TikTok to Start Labeling AI-Created Content

The platform says it plans to identify and label content created by other AI tools, like Adobe’s Firefly and OpenAI’s Dall-E.

Sign up for smart news, insights, and analysis on the biggest financial stories of the day.

AI-enhanced content will come with a warning label on TikTok.

On Thursday, the popular, perhaps soon-to-be-banned short-form video giant announced it would begin labeling content created using both its own generative AI tools as well as those from third parties. It’s a sign that the burgeoning AI world may be evolving toward industry standards.

Muddying the Watermarks

For all the hype surrounding generative AI, it’s a massive problem for digital media platforms and their users: it’s never been easier to flood the zone with quickly made, and often-spammy, content. Google, for instance, has been at war with spammy AI-generated web pages designed to rocket to the top of search results — and may even start charging users for better-quality results. Meanwhile, there’s the backdrop of — gulp — the human race’s biggest election year in history, with political contests all but guaranteed to traffic in AI-generated misinformation and deepfakes.

And so TikTok, perhaps because of constant questions about its central role in young American’s media diets, is getting proactive. The platform already labels content created with its own AI tools, but now TikTok says it plans to identify and label content created by other AI tools, like Adobe’s Firefly and OpenAI’s Dall-E. That puts it ahead of the curve among its social media peers — but only slightly:

- TikTok will use a digital watermark system called Content Credentials, a system developed by industry group the Coalition for Content Provenance and Authenticity (itself created by Adobe, Microsoft, and others), that implants a “made with AI” marker under the hood of AI-generated content. TikTok’s system will identify and label content that carries the Content Credentials mark.

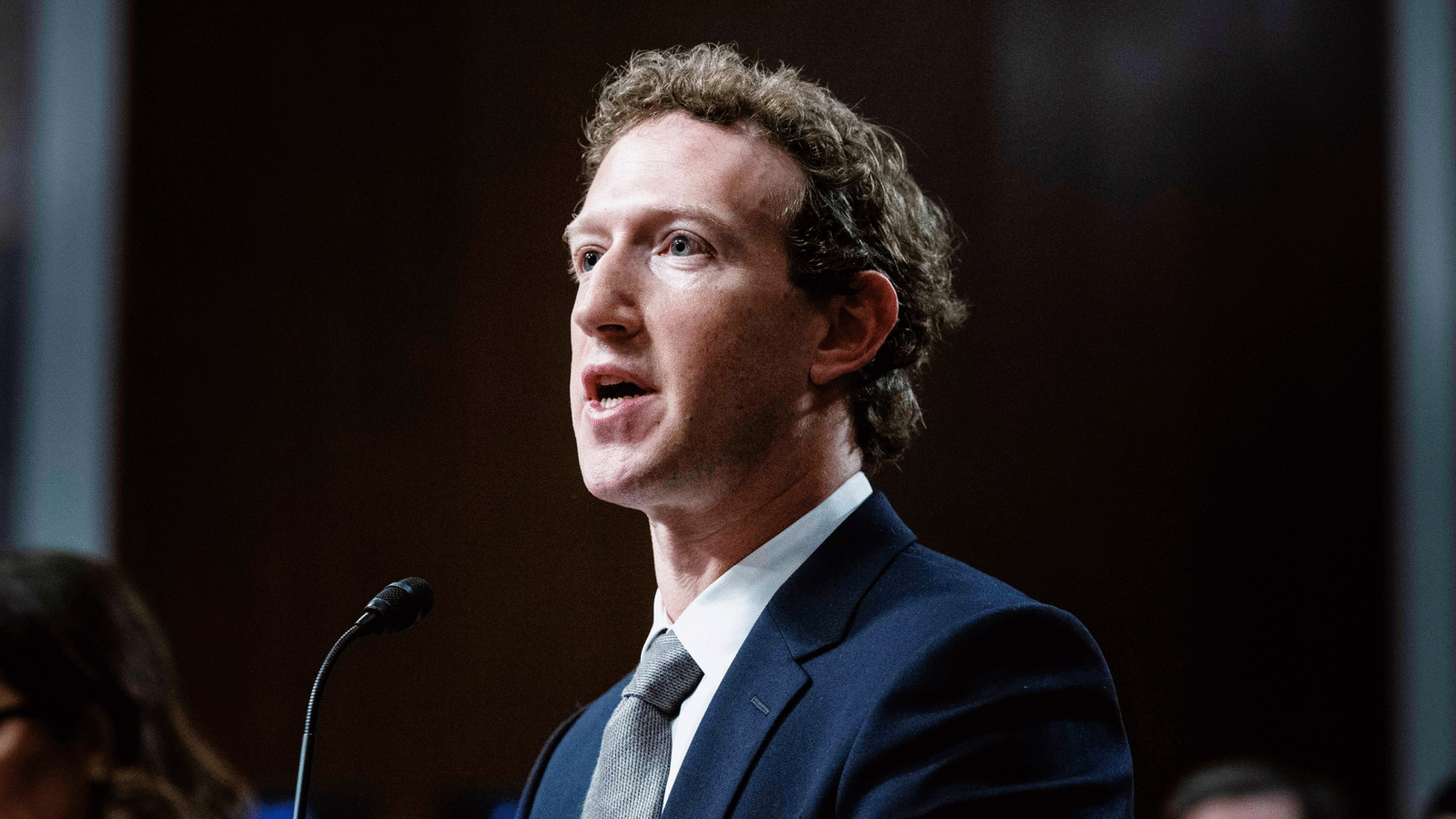

- OpenAI said earlier this week it would start including the Content Credentials mark in the digital fingerprints of content its platforms create. YouTube and Meta have already said they plan to eventually implement the technology and watermark.

The Limit Does Exist: The system has its limitations. First and foremost, it requires the maker of generative-AI tools to implement the Content Credentials watermark into the metadata of its products. So while OpenAI may opt in, others may not. In March, NBC News reported how easily bad actors can sidestep or remove the watermarks, with one obvious example being someone simply recording an AI-generated video, cropping or re-editing it to remove the watermark, and then re-uploading. Meanwhile, educators probably can’t wait until watermarks start popping up in essays and homework assignments.