IBM Patent Introduces Context to Fix Hallucinations

“By introducing such context, there is a greater confidence that the output of the artificial intelligence model is more accurate.”

Sign up to get cutting-edge insights and deep dives into innovation and technology trends impacting CIOs and IT leaders.

When it comes to AI models, more context is always better.

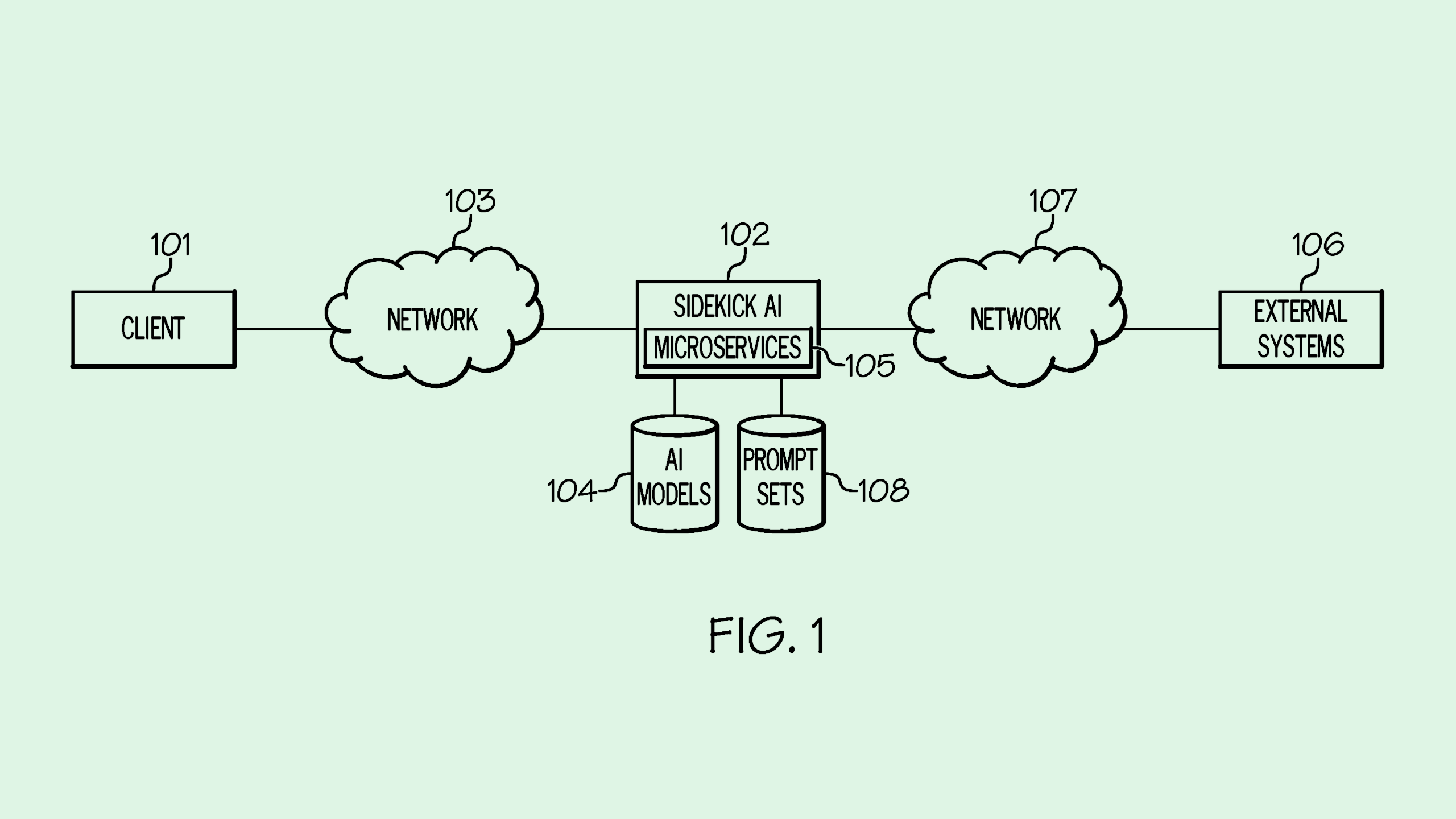

IBM may have found a way to give its agents a hand: The company is seeking to patent a system for “enhancing accuracy and reducing hallucinations” in generative AI outputs using context integration and “multi-AI-model” interaction. As the lengthy title of the patent application implies, the system basically gives AI models additional data to work with as a means of making its outputs more precise and relevant.

“AI hallucinations may occur due to various factors, including overfitting, training data bias/inaccuracy, and high model complexity,” IBM said in the filing. “Unfortunately, there is not currently a means for effectively limiting such misrepresentations.”

When a user interacts with an AI model, the model leverages “context,” which in this case doesn’t simply mean more user-specific data. IBM’s system may leverage other AI model outputs, task-specific agents, documents, previous conversations or an “intermediary” system, such as the user’s personal virtual assistant, to help make responses more accurate.

“By introducing such context, there is a greater confidence that the output of the artificial intelligence model is more accurate,” IBM noted.

Though hallucination rates have been on the decline as AI models get more powerful, these incidents of inaccuracy still occur – and can still have major consequences depending on the context in which they’re used. One such incident occurred with Anthropic’s model Claude in recent weeks, when the firm’s lawyers admitted to using an erroneous citation hallucinated by the chatbot.

IBM isn’t the first tech firm trying to wrap its head around hallucination. Several tech giants have filed patents seeking to limit these slip-ups, including Amazon, Microsoft, and Adobe. However, while it’s possible to mitigate such incidents, it’s likely that they’ll never fully be eliminated. That makes monitoring and additional context even more vital as AI gains more autonomy with the tech industry’s push towards an agentic future.