Adobe Wants to Fix When AI Starts to Drift

Adobe wants to stop AI models from losing their trains of thought.

Sign up to uncover the latest in emerging technology.

Adobe wants to know when its models are off-base.

The company filed a patent application for “hallucination prevention for natural language insights.” Adobe’s tech aims to keep hallucinations from ending up in a language model’s output by ensuring responses actually contain facts.

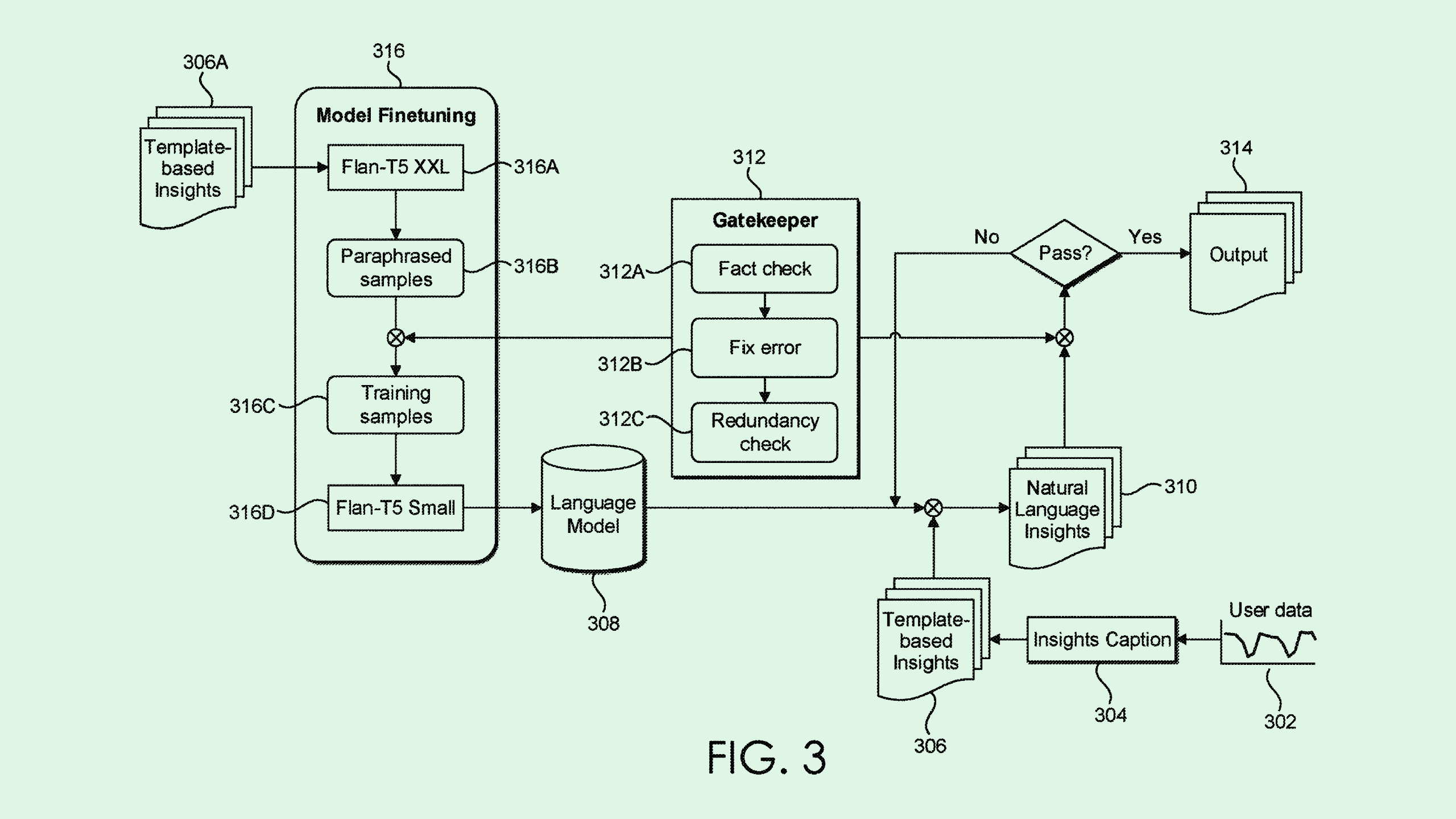

Adobe’s system implements a “hallucination gatekeeper engine,” which puts an extra set of eyes on a generative AI model’s output.

“Hallucination generation by language models is a significant problem in conventional implementations as hallucinations can occur at a nontrivial error rate, thereby decreasing the reliability of conventional language models,” Adobe said in the filing.

Adobe’s tech would likely be put to use in an enterprise context, allowing the system to draw on “stored data regarding the business” as a means of “understanding of the reasons behind various data events, predicting future trends, and recommending possible actions for optimizing outcomes,” the filing notes.

After a user sends in a query, the system would draw on that stored data to generate what it calls “template-based insights,” which are then fed to a language model to be translated into natural language insights.

Finally, to ensure that nothing was lost in translation, the hallucination gatekeeper engine specifically checks that the facts, names, and numerical data mirror those in the template-based insights. If something is amiss, the system prompts the engine to regenerate the output.

Adobe isn’t the first tech firm that’s sought to find a solution to hallucination. Microsoft announced a tool in September called Correction, which attempts to catch and fix hallucination based on source material. Google unveiled a similar tool earlier this year called Vertex AI.

And it makes sense why Adobe would want to target hallucination — especially in enterprise contexts, as this patent suggests. The company’s AI-based creative tools largely target business and business use cases, and it claims that its generative model Firefly is safe for commercial use.

Its patent history tells a similar story, including AI data visualization, marketing campaign development, and generative image editing tools for professional use. When using AI tools in enterprise operations, it’s vital to ensure that users can actually trust the outputs.

Hallucination, however, is a natural part of any AI model. While it may be reduced with consistent monitoring and emerging techniques, it likely can’t be eliminated entirely. It begs the question of where businesses should draw the line with AI integration — and whether tools like Adobe’s may become more vital as AI deployment continues.