Apple Patent Highlights Upcoming AI Integrations for Vision Pro

Apple Intelligence integrations are reportedly in the works for the company’s debut headset

Sign up to uncover the latest in emerging technology.

Apple may use AI to help the Vision Pro eat up less power.

The company is seeking to patent a system that uses machine learning to determine user location in artificial reality based on “object interactions.” Apple’s filing claims that its method eats up less computational power than other artificial reality tracking processes by monitoring a user in relation to their environment.

“Existing systems may require utilization of a relatively large amount of processing and/or power resources to detect a user location,” Apple said. “Such systems may require constructing a map of an unknown environment using computationally-intensive techniques while simultaneously tracking a user location.”

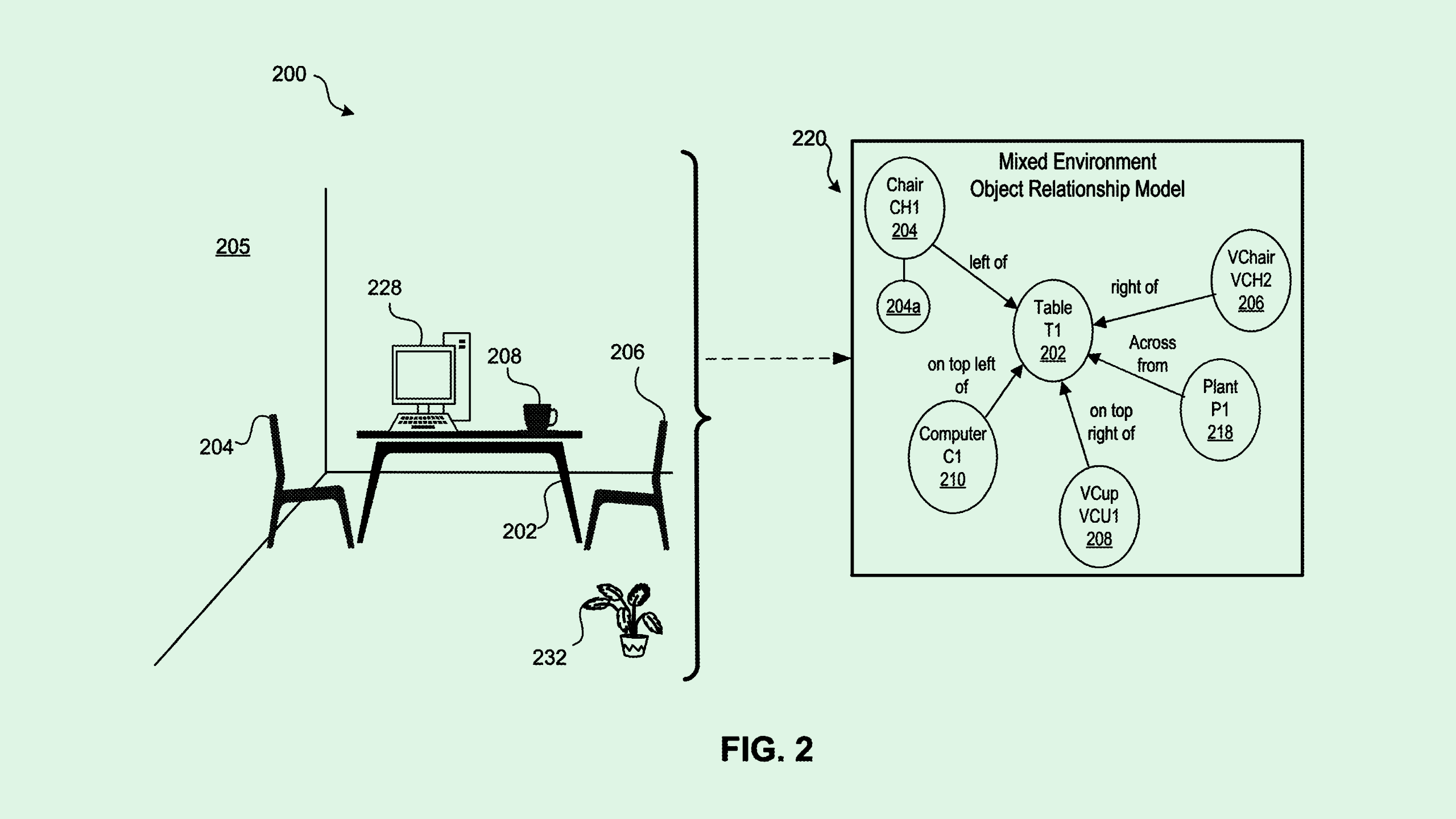

Instead, this tech uses a machine learning algorithm to create an “object relationship model,” which represents the “positional relationship” between objects in an environment. To create this object relationship model, the system collects sensor data to feed to the machine learning algorithm from audio, image and motion sensors embedded within the device.

As the user interacts with the object in their environment, the device begins to understand their movements, such as orientation, object contact, or gaze direction. Finally, the machine learning algorithm determines a user’s location in relation to the objects in their surroundings.

Understanding a user’s location and surroundings in this manner “can aid in the processing efficiency of displaying an XR scene,” Apple said.

After more than a year of remaining relatively quiet on its AI plans, Apple jumped headfirst into AI at its Worldwide Developers Conference in June, debuting “Apple Intelligence,” its personal AI, for iPhone, iPad, and Mac. It also announced a major partnership with OpenAI to integrate ChatGPT directly into Siri.

AI integrations for the Vision Pro were not included in the slew of announcements this year, but the headset may not be left out of the company’s AI roadmap. Bloomberg reported last week that the company plans to bring Apple Intelligence to the Vision Pro, but is facing the challenge of ensuring that the user interface looks seamless in a mixed reality environment. Patents like these reveal how machine learning may be integrated into the inner workings of Apple’s mixed reality hardware, too.

However, roughly six months after the company’s debut headset hit shelves, the market’s reaction has been expectedly “lukewarm,” said Tejas Dessai, research analyst at Global X ETFs. The current version is just a start, setting the stage for a “more comprehensive entry into the post-smartphone ambient computing landscape,” he said. “Overall, we are at least five years away from mixed reality being fully ready for mass-market adoption.”

A lot needs to happen to get to that point, he said, including a broader application ecosystem, cheaper components, and better form factor. But adding AI into the mix could play a major role in getting consumers to adopt these devices.

“Device-based intelligence will become increasingly important as consumers interact with devices in the coming years, driving permanent behavioral changes in how users engage with applications,” he said.