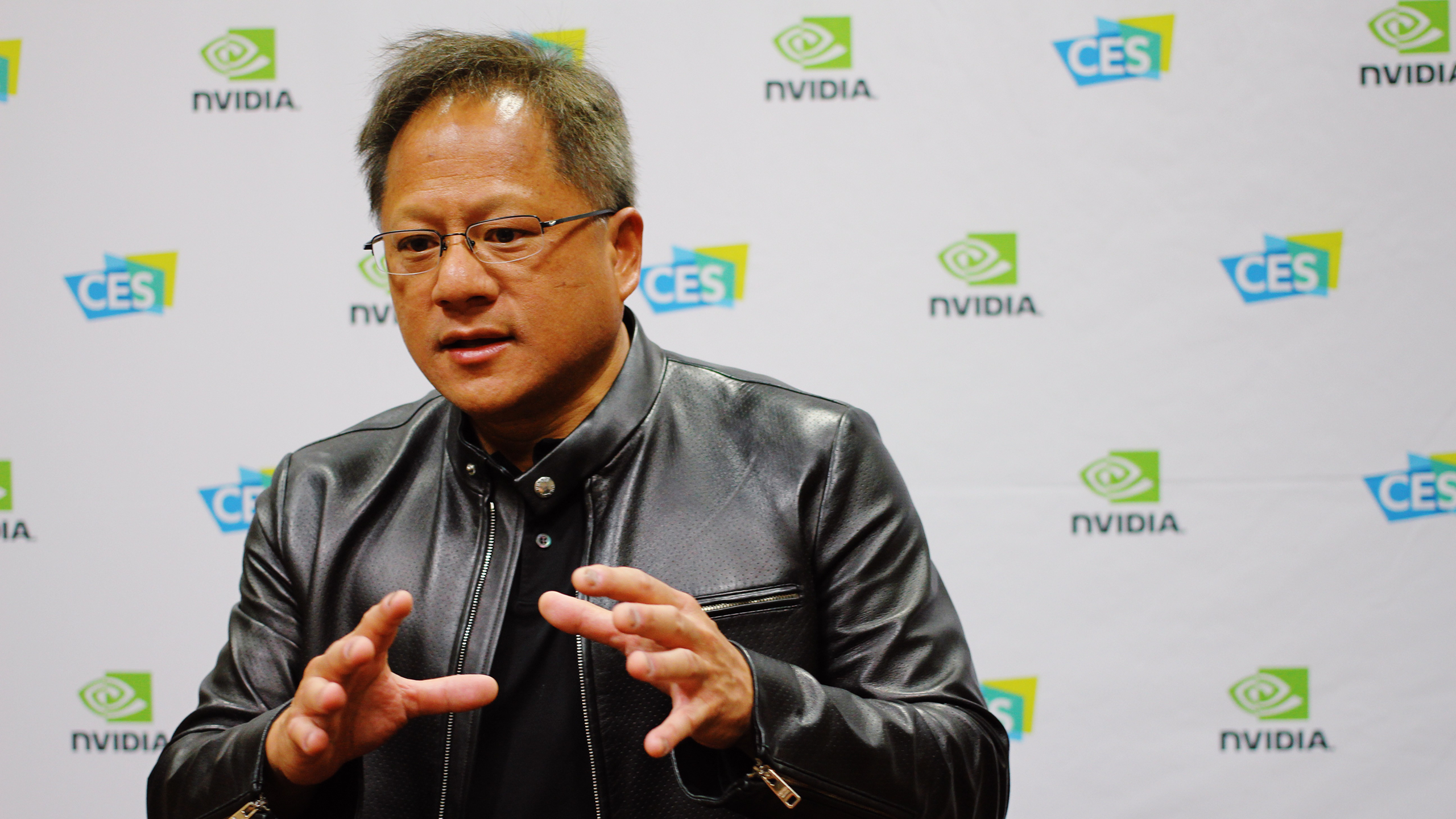

Nvidia CEO Expects AI to Get Cheaper as Computing Power Expands

Jensen Huang seems to think that Sam Altman’s quest for $7 trillion to invest in AI chips is maybe a little overkill.

Sign up for smart news, insights, and analysis on the biggest financial stories of the day.

What’s $6 trillion between friends?

Jensen Huang, CEO of chipmaker Nvidia, told a conference on Monday that the costs of building out AI will fall as computers get smarter and faster. His comments were in response to a Wall Street Journal report that OpenAI’s Sam Altman is on the hunt for $7 trillion (yes, with a “t”) to pour into the semiconductor industry — the ocean in which the generative AI algae bloom is currently taking place.

Chipping Away

ChatGPT may seem to pull sentences out of thin air like a digital Rumplestiltskin, but it takes a heck of a lot of computing power to a) train the large language models that underpin it, and b) keep the thing running. As popular as ChatGPT and its brethren are, they have not transformed into instant money-makers for the companies that build and operate them.

A major part of the cost equation for generative AI is semiconductors, which are pricey bits of hardware. Nvidia’s role as a chipmaker has made it one of the biggest winners of the AI boom, so much so that its market capitalization overtook Amazon’s on Monday for the first time in two decades. However, sources told the WSJ that Altman is on a mission to increase the globe’s chipmaking capacity, sending supply up and costs down. Huang, however, said he doesn’t see the need for a massive increase in hardware:

- “You can’t assume just that you will buy more computers. You have to also assume that the computers are going to become faster and therefore the total amount that you need is not as much,” Huang said at the World Government Summit in Dubai, as reported by Bloomberg.

- While Huang may not buy into the idea that Altman needs to spend $7 trillion bulking up the industry, he does think the near term will see perhaps another $1 trillion spent on growing AI infrastructure. “There’s about a trillion dollars’ worth of installed base of data centers. Over the course of the next four or five years, we’ll have $2 trillion worth of data centers that will be powering software around the world,” said Huang.

As Is Our Custom: For Nvidia, which is far and away the chipmaker that has benefited the most from the generative AI boom, there are plenty of new deals to go around. Reuters reported last week that Nvidia will start making custom chips for firms. Its customers include OpenAI and Google, so working with Big Tech companies to inveigle itself deeper into their infrastructure is a smart move in a world where its dominant market position could be eroded by the Altmans of the world turning on the money hose to create new chipmakers.