Oracle’s Patent Could Break AI’s Black Box

The data services company wants to patent a way for neural networks to explain themselves. The tech signals a vital need for AI adoption.

Sign up to uncover the latest in emerging technology.

Oracle wants its neural networks to show their work.

The data company filed a patent application for “human-understandable insights” for neural network predictions. Basically, Oracle’s system aims to help users of AI models understand how they arrived at their answers.

Oracle says current AI models don’t offer users an adequate explanation of how they make their predictions, even if the probability of a correct answer is very high. “A customer may not trust the results generated by the neural network if the customer does not understand what factors the neural network is considering when making a particular prediction,” Oracle noted.

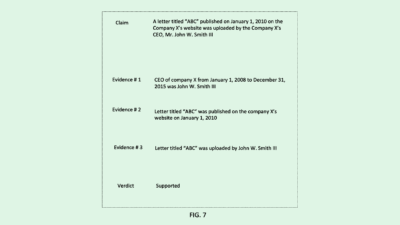

To break it down: Oracle’s system identifies what it calls “human-understandable attributes” that contributed to a neural network’s predictions. It does this by mapping each of the attributes that go into an AI model’s decision-making process, and assigning a “confidence score” to each of them based on whether or not an average person could understand it.

For example, an average user wouldn’t typically understand the mathematical formulas that go into an AI model deciding whether or not a credit card transaction is fraudulent. However, this system weeds out the more technical aspects of an AI’s decision-making when explaining its answers, leaving behind the attributes that a human would understand, such as source, dollar amount, timing, or recipient of a transaction.

This process, Oracle noted, essentially breaks down the “blackbox” of typical machine learning models and neural networks, which “suffer from a lack of explainability.”

As AI continues to weave into every facet of our lives, trusting the answers that AI models come up with is extremely important, said Brian P. Green, director of technology ethics at the Markkula Center for Applied Ethics at Santa Clara University. AI being able to explain itself not only helps its users trust it more, but helps its operators know if it starts to go haywire.

“If we’re assigning a tool that we don’t fully understand to a job that humans aren’t particularly good at, then we really need to be able to trust it, to make sure we’re … not getting something that is contaminated with bias or is otherwise not as accurate as we would like it to be,” said Green.

But as AI adoption grows, the models we use only grow more complex, Green noted. Even as more AI explainability tools like Oracle’s come to fruition, more complex models become far more difficult to explain, even to those that understand tech, let alone the average, non-technical user.

“It’s kind of an open question, how explainability is going to really translate to normal human beings, when the complexity of the system itself is going to get more and more difficult,” said Green. “Who knows, are even our best machine learning people going to be able to understand this? Or are they eventually even going to beleft behind?”

Oracle’s patent also represents just one option for explainable AI tools. Plenty of other companies offer toolkits like this, said Green: IBM sells its AI Explainability 360, several free tools for this exist on GitHub, and PayPal is even seeking to patent its own AI explainability tool. Even if it does manage to secure this patent, the market itself only grows more crowded, which doesn’t look good for Oracle as the company continues to fall behind in the AI race.