Palantir Patent Highlights Need for Zero-Trust Security in AI

A patent from Palantir highlights the importance of data security in AI when used in government and defense contexts.

Sign up to uncover the latest in emerging technology.

Palantir wants to make sure its data is in the right hands.

The company is seeking to patent a system for “security-aware large language model training and model management.” Palantir’s tech aims to gatekeep a model’s outputs based on whether or not a user has permission to access them.

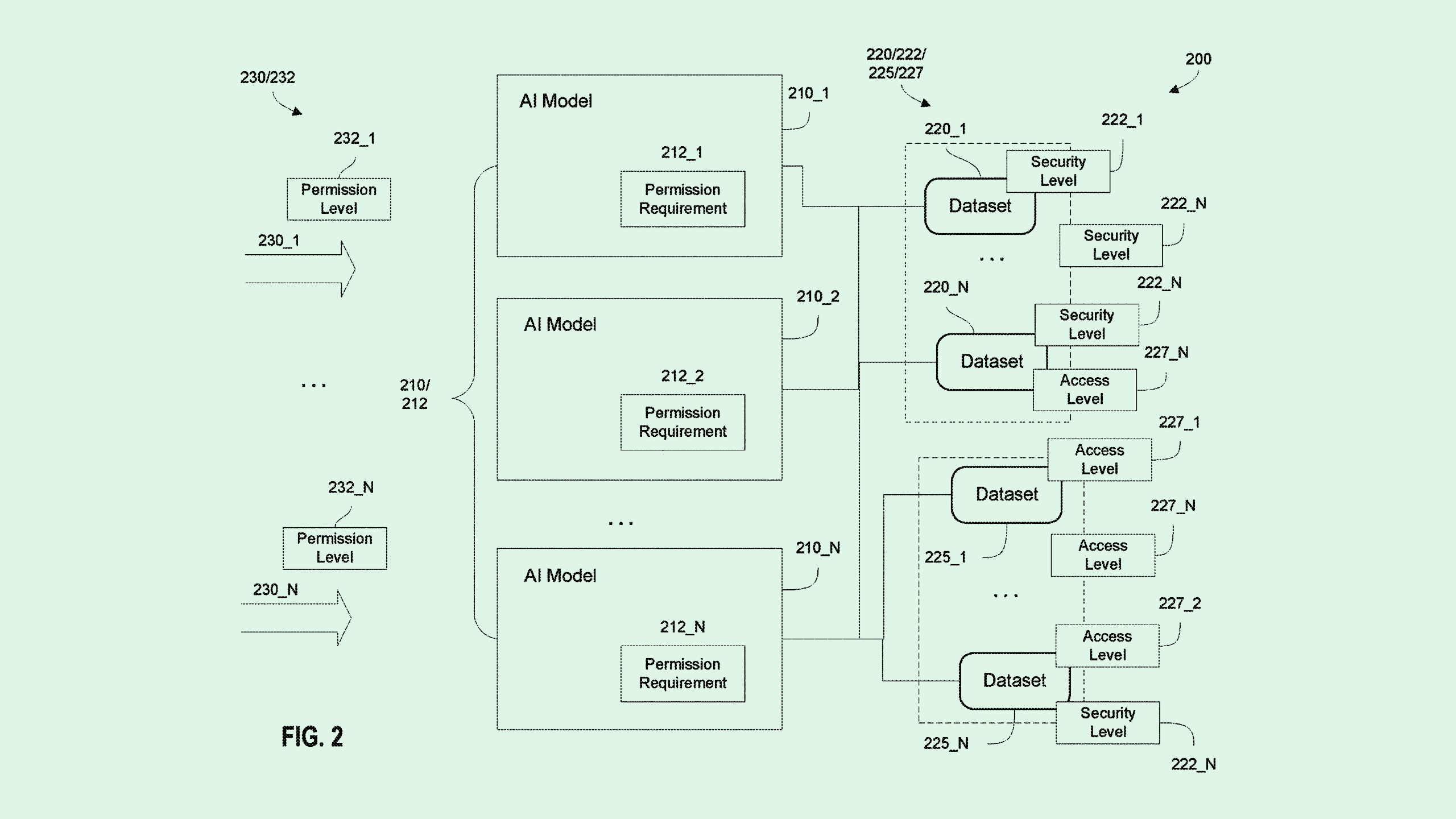

Because machine learning models often require data from lots of sources to learn niche tasks, in some instances, “multiple data sources for the ML model training may have different access and/or use requirements,” the company said in the filing. Given that these models still face data security risks, the use of restricted data may present issues.

To overcome this, when a large language model is trained on data that has certain access permissions, Palantir’s system links those permissions to the model post-training. The training data is segmented into different parts based on those permissions. For example, half of it may be public, and the other half may be private, for a certain group.

Then, when someone uses the model, the outputs vary based on that user’s specific permissions. For example, if a user was given access to the data or information the model was trained on, they may receive more in-depth outputs than someone who doesn’t have that access. In some cases, the user may not be allowed access at all.

It makes sense why Palantir wants to make sure its models are saying the right things to the right people. The company’s primary moneymaker is government and defense contracting – in September, it won a $100 million contract to expand access to its AI targeting tech to more military personnel, adding to the $480 million defense contract it scored in May.

In those contexts, it’s vital to make sure information is in proper hands, said Bob Rogers, Ph.D., the co-founder of BeeKeeperAI and CEO of Oii.ai. “You can definitely see where the [Department of Defense] would want that type of control,” said Rogers. “Just because Bob the Janitor has a login, that login should not give you access to the nuke codes.”

Since AI models often suffer data security slip-ups, tech like this emphasizes the need for “zero-trust” security measures, or those that assume no device or user is trustworthy, said Rogers. This kind of security aims to make the impact of a breach or leak “as small as possible,” he said.

“Think of all the different places that different accesses are going to pop up, and how much information is going to be mingling around from different AIs,” Rogers added. “If they can’t control who has access to what, it’s going to be really tricky.”

Because Palantir’s tech involves the AI model itself “managing its own security model,” Rogers said, it may provide a much more efficient means of monitoring what information goes where without having to keep an eye on every output.

And with the sheer number of AI integrations being pushed in in the tech industry and beyond, more innovations like this are likely to come. “It’s very appropriate for companies to be thinking about how to create AI that’s self-regulating,” Rogers said.